The GPTJUDGE: Justice in a Generative AI World article will be published in October by Duke Law & Technology Review. The authors are Maura Grossman, Paul Grimm, Daniel Brown and Molly Xu. In addition to suggesting a legal framework for judges to determine if proffered evidence is Real or Fake, the article provides good background on generative AI. It does so in an entertaining way, touching on a wide variety of issues.

The evidentiary issues raised by generative type AI and deep fakes, and analysis of federal rules, are the parts of their article that interest me the most. Their proposed legal framework for adjudication of authenticity is excellent. It deserves attention by judges and arbitrators everywhere. ‘Real or Fake’ is not just a meme, it is an important issue of the day, both in the law and general culture. Justice depends on Truth, on true facts, reality. Justice is difficult, perhaps impossible to attain when lies and fakes confuse the courtroom.

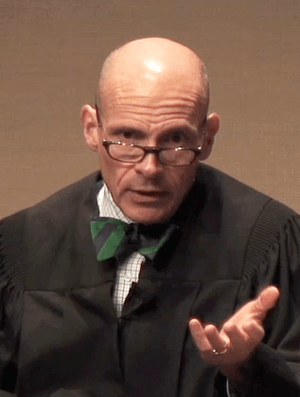

Before I go into the article, lets play the `Real or Fake game’ now sweeping the Internet with some pictures of the two lead authors, both public figures, Paul Grimm and Maura Grossman. What do you think which of these pictures are Real and which are Fake? There will be more tests like this to come. Leave a comment with your best guesses or use your AI to get in touch with me.

Introduction

The GPTJUDGE: Justice in a Generative AI World, will be published in October in Vol. 23, Iss. 1 of Duke Law & Technology Review (Oct. 2023). Maura Grossman was kind enough to provide me with an author’s advance version. Doug Austin of E-Discovery Today has already written a good summary of the entire twenty-six page article. The GPT Judge is a very ambitious article that covers A to Z on Generative AI and law. My good colleague, Doug Austin, describes the entire article. I recommend you read Doug’s article, or better yet, read the whole GPTJudge article for yourself.

Unlike Doug’s article, I will, as mentioned, only focus on one part of the article. This is the part of The GPTJudge, found at pages 12-18, which addresses the thorny evidentiary issues concerning LLM AI. Is it real or fake evidence offered? What rules govern these issues? And what a judge should do, or in my case, an arbitrator do, when these issues arise.

Although Doug Austin’s article is a real article, not a fake, it appears to me that Doug did have a wee bit of help in writing from the devil itself here, namely ChatGPT-4. He carefully reviewed and edited the Generative AI’s work, I am sure. It is a fine article, but has a familiar ring. Parts of my article will also have that familiar generative AI tone. It is a real Ralph Losey writing, not a fake. I am pretty certain of that. But, truth be told, I too use GPT AI – ChatGPT-4 to help me write this article. My own tiny human brain needs all of the AI help it can get to accomplish the ambitious task I have set myself here of summarizing this complex corner of the Duke Law Review Article. ChatGPT is a good writing tool, and so is the WordPress software that I also use to create these blogs. I now also use another tool to craft my blogs, a generative AI program called Midjourney. Here, for instance, Midjourney helped me create some pretty cool, but fake images of Grimm and Grossman. Another `Real or Fake’ test will be coming soon, but first some more background on the lead authors whom I know well. This is not to slight the the fellow professor with Maura Grossman at David R. Cheriton School of Computer Science at the University of Waterloo, who is a co-author, Daniel G. Brown, Ph.D., nor Professor Brown and Professor Grossman’s undergraduate student here who helped, Molly(Yiming) Xu.

Lead Authors, the Very Real Paul Grimmand Maura Grossman

One of the lead authors of GPT Judge was a real District Court Judge in Baltimore until just recently, Paul Grimm. He is now a Professor at Duke Law and Director of the Bolch Judicial Institute, and, as all who know him will agree, a truly outstanding, very real person. Paul Grimm is without question one of the top judicial scholars of our time, especially when it comes to evidentiary issues. I have had the privilege of listening to him speak many times and even teaching with him a few times. He was even kind enough to write the Forward to one of my books.

Now back to `Real or Fake’ starring Paul Grimm. You be the judge.

The lead author of The GPTJUDGE: Justice in a Generative AI World, is a top expert in law and technology and friend, Maura Grossman, PhD. She is now a practicing attorney, with her own law and consulting firm, Maura Grossman Law, in Buffalo, NY, a Special Master for Courts throughout the country, a Research Professor in the School of Computer Science at the University of Waterloo, an Adjunct Professor at Osgoode Hall Law School at the University of Waterloo and an affiliate faculty member of the Vector Institute in Ontario. Phew! How does she do it all? I suspect substantial help from AI. Now it is Maura’s turn to be the subject of my `Real of Fake’ quiz, then we will get into the article proper.

What do you think, `Real or Fake’ Maura Grossman?

One more thing before I begin, Judge Grimm and Maura Grossman have recently written another article that you should also put on your must read pile, or better yet, click the link and see it now and bookmark: Paul W. Grimm, Maura R. Grossman, and Gordon V. Cormack, Artificial Intelligence as Evidence, 19 Nw. J. Tech. & Intell. Prop. 9, 84 (2021). The well known information scientist, and Maura’s husband, Gordon Cormack, is also an author. So you know the technical AI details in all of these articles are top notch.

Summary of the Segment of the Law Review Article, The GPTJudge, Covering Evidentiary Issues

Judges will soon be required to routinely face the issues raised by evidence created by GPT AI and any evidence alleged to be fake. This will force judges to assess the authenticity and admissibility of evidence challenged as inaccurate or as potential deepfakes. The existing Federal Rules of Evidence and their state counterparts can, the authors contend, be flexibly applied to accommodate the emerging AI technology. Although not covered by the article, in my personal opinion the rules governing arbitrations are also flexible enough. The author’s contend, and I generally agree, that It is infeasible to amend these rules for every new technological development, such as deepfakes, due to the time-consuming revision process required to amend federal rules. Paul Grimm should know as he used to be on the rules committee that revises the Federal Rules of Civil Procedure.

The admissibility of AI evidence hinges on several key areas under the Federal Rules of Evidence: relevance (401), authenticity (901 and 902), the judge’s gatekeeper role in evaluating evidence (104(a)), the jury’s role in determining the authenticity of contested evidence (104(b)), and the need to exclude prejudicial evidence, even if relevant (403).

Judges should adapt the rules to allow for their application to new technologies like AI, without rigidly adhering to them, to promote the development of evidence law. Federal Rule of Evidence 702, which pertains to scientific, technical, and specialized evidence, requires judges to ensure such evidence is based on sufficient facts, reliable methodology, and has been applied accurately to the case.

These evidence rules provide judges and lawyers with enough guidance. Arbitrators probably do not need special new rules either, especially because there are no juries in arbitration.

It is important when assessing potential GPT AI evidence, or alleged deepfake evidence, that judges pay close attention to the rule requiring the exclusion of evidence that could lead to unfair prejudice (Rule 403). This stresses the importance of ensuring that such evidence is both valid and reliable before being presented to the jury. This rule obviously has no direct application to arbitration, but still, arbitrators must take care they are not fooled by fakes.

When evaluating the authenticity of potential evidence, including deepfakes, or other disputed evidence, judges should refer to Federal Rule of Evidence 702 and the Daubert factors to assess the evidence’s validity and reliability. Careful consideration of the potential for unfair prejudice that can occur with the introduction of unreliable technical evidence is of prime importance. The authors stress that admissibility should not solely hinge on whether the evidence is more likely than not to be genuine (the preponderance standard), but also should depend on the potential risks or negative outcomes if the evidence is proven fake, or insufficiently valid and reliable. Evidence should be excluded if the authenticity is doubtful and the risk of unfair or incorrect outcomes is high. Again the presence of a jury or not tempers this risk.

So how are judges supposed to make the call on `Real or Fake’? Judge Paul Grimm and Maura Grossman recommend Judges follow three steps to make these determinations:

- Scheduling Order: Judges should set a deadline in their scheduling order for parties intending to introduce potential GPT AI evidence to disclose this to the opposing party and the court well in advance. This allows the opposing counsel time to decide whether they want to challenge the admissibility of the evidence and seek necessary discovery.

- The Hearing: When there’s a challenge to the admissibility of the evidence as AI-generated or deepfake, judges should set an evidentiary hearing on the testimony and other evidence needed to rule on the admissibility. This hearing should be scheduled significantly ahead of the trial to allow the judge enough time to evaluate the evidentiary record and make a ruling.

- The Ruling: Following the hearing, judges should carefully consider the evidence and arguments presented during the hearing and issue a ruling. This ruling should assess whether the proponent of the evidence has adequately authenticated it. The judge should address the relevance, authenticity, and prejudice arguments. Special attention should be given to the validity and reliability of the challenged evidence, weighing its relevance against the risk of an unfair or excessively prejudicial outcome.

This is good advice for any judge facing these issues, arbitrators too, as well as attorneys and litigants. Everyone should walk these three simple steps to escape the fake traps that Generative AI can create.

Conclusion

I commend the entire law review article for your reading, but especially the section on evidentiary issues. This section, pgs. 12-18, was, for me, particular interesting and helpful. Also see the earlier article by Grimm, Grossman and Cormack, Artificial Intelligence as Evidence. Judges and Arbitrators will all soon be facing many challenges regarding the authenticity and admissibility of evidence related to AI. `Real or Fake’ may be a key question of our times.

The authors insist, and I somewhat reluctantly agree, that it’s not practical to amend the existing Federal Rules of Evidence for every new technological development. Instead, as the authors also point out, and I again agree, these rules provide a flexible framework. Our rules already allow judges to evaluate factors like relevance, authenticity, and potential prejudice of AI-generated evidence. Rule 702 in particular is crucial because it requires that scientific, technical, or specialized evidence be based on ample facts, reliable methodology, and a sound application to the case at hand. The same situation applies to arbitrations, although arbitrations are typically more informal and there are no juries. Still, arbitrators should be on the lookout for fake or unreliable evidence and look for general guidance from the federal evidence rules.

Judge Grimm and Dr. Grossman propose a three-step process to guide judges when handling potential GPT AI or deepfake evidence. I like the simple three-step procedure proposed. There is more to it than described in this summary, of course. You need to read the whole article – THE GPTJUDGE: JUSTICE IN A GENERATIVE AI WORLD.

I urge other lawyers, arbitrators and judges to try out the three-steps proposed when they face these issues. The just, speedy and inexpensive resolution of disputes must remain the polestar of all our dispute resolution. These suggestions, if employed in a reasoned and prudent manner, can help us to do that. Ensuring the reliability of evidence is important because Justice arises out of truth, not lies and fakes.

Copyright Ralph Losey 2023, to text and fake images only.