This article is the conclusion to my three-part review of Robophobia by Professor Andrew Woods. Robophobia, 93 U. Colo. L. Rev. 51 (Winter, 2022). See here for Part 1 and Part 2 of my review. This may seem like a long review, but remember Professor Woods article has 24,614 words, not that I’m counting, including 308 footnotes. It is a groundbreaking work and deserves our attention.

Part 2 ended with a review of Part V of Andrew’s article. There he laid out his key argument, The Case Against Robophobia. Now my review moves on to Part VI of the article, his proposed solutions, and the Conclusion, his and mine.

Part VI. Fighting Robophobia

So what do we do? Here is Andrew Woods overview of the solution.

The costs of robophobia are considerable and they are likely to increase as machines become more capable. The greater the difference between human and robot performance, the greater the costs of preferring a human. Unfortunately, the problem of robophobia is itself a barrier to reform. It has been shown in several settings that people do not want government rules mandating robot use.[272] And policymakers in democratic political systems must navigate around—and resist the urge to pander to—people’s robophobic intuitions. So, what can be done?

Robophobia is a decision-making bias⎯a judgment error.[273] Fortunately, we have well-known tools for addressing judgment errors. These include framing effects, exposure, education and training, and, finally, more radical measures like designing situations so that biased decision-makers—human or machine—are kept out of the loop entirely.

If you don’t know what all of these “well-known” list of tools are, don’t feel too bad, I don’t either. Hey, we’re only human. And you’d rather read my all-too-human review of this article than one written by a computer, which is all too common these days. You would, right? Well, ok, I do know what exposure, education and training mean. I’ve certainly sat through more than my fair share of anti-prejudice training, often very boring training at that. Now we add robots to the list of sensitivity training. Still, what the hell are “framing effects”?

More on that in a second, but also what does the professor mean by keeping biased decision makers out of the loop? As a long time promoter of “Hybrid” Multimodal Predictive Coding, where hybrid means humans and AI working together, my defenses are up when there is talk of making it a default to exclude humans entirely. Yes, I suppose my prejudices are now showing. But when it comes to my experience in working with robots in evidence search, I like to delegate, but have the final say. That’s what I mean by balanced. More on this later.

Professor Woods tries to explain “framing effects” so that even a non-PhD lawyer like me can understand it. It turns out that framing effects have to do with design, like how you frame a question often impacts the answers. Objection, leading! It includes such things as what lawyers commonly call “putting lipstick on a pig.” Make the robots act more human-like (but not too much so that you enter the “uncanny valley”). For example, have them pause for a second or two, which is like an hour at their speeds, before they make a recommendation. Maybe even say “hmm” and stroke their chin. How about showing some good robot paintings and poems? Non creepy Anthropomorphic design strategies appear to work, so too do strategies of making “use of a robot” the default action, instead of an alternate elective. This requires you to make an affirmative effort to opt-out and use a human, instead of a robot. We are so lazy, we might just go with the process, especially if we are repeatedly told how it will save us money and provide better results; i.e. – Predictive coding, TAR, is better than sliced bread!

Now to the threatening idea, to me at least, and possibly you, to just keep humans out of the loop entirely. Robot knows best. Big Brother as a robot. Does this thing even have a plug?

Let’s see what Professor Woods has to say about this just let the machines do it idea. I agree with much of what he says here, especially about automated driving, but still want to know how to turn the robots off and stop the surveillance.

[T]here is considerable evidence in a number of scenarios that keeping humans in the loop eliminates the advantages of having an automated system in the first place and, in some instances, actually makes things worse. . . . The National Highway Traffic Safety Administration recognizes six levels of automotive autonomy, ranging from 0 (no automation) to 3 (conditional automation) to 5 (full automation).[302] Some people believe that a fully autonomous system is safer than a human driver, but that a semi-autonomous system—where a human driver works with the autonomous system—is actually less safe than a system that is purely human driven.[303] That is, autonomy can increase safety, but the increase in safety is not linear; introducing some forms of autonomy can introduce new risks.[304] . . .

If algorithms can, at times, make better decisions than humans, but human use of those algorithms eliminates those gains, what should be done? One answer is to ban semi-autonomous systems altogether; human-robot interaction effects are no longer a problem if humans and robots are not allowed to interact. Another possibility would be to ban humans from some decision-making processes; a purely robotic system would not have the same negative human-robot interaction effects. This might mean fewer automated systems but would only leave those with full autonomy.

If humans misjudge algorithms—by both over- and underrelying on them—can they safely coexist? Take again the example of self-driving cars. If robot-driven cars are safer than human-driven cars but human-driven cars become less safe around robot cars, what should be done? Robots can simultaneously make the problem of road safety better and worse. They might shift the distribution of road harms from one set of drivers to another. Or it might be that having some number of robot drivers in a sea of human drivers is actually less safe for all drivers than a system with no robot drivers. The problem is the interaction effect. In response, we might aim to improve robots to work better with humans or improve humans to work better with robots. Alternatively, we might simply decide there are places where human-robot combinations are too risky and instead opt for purely human or purely machine decision-making.

Conclusion

So the solution to this problem is a work in progress. What did you expect for an article that first recognizes robophobia? These solutions will take time for us to work out. I predict this will be the first of many articles on this topic, an iterative process, like most things robotic, including law’s favorite, predictive coding. I have been teaching and talking about this stuff for a long time. And here I am doing it again in yet another iteration. For nostalgia sake, check out the snazzy video I made in 2012 to introduce one of my first methods to use evidence finding robots. I was more dramatic back then.

Even though it worries me, I understand what Andrew means about the problems of humans in the loop. I have seen far too many lawyers, usually dilettantes with predictive coding, screw everything up royally by not knowing what they are doing, by mistakes. That is one reason that I developed and taught my method of semi-automated document review for years.

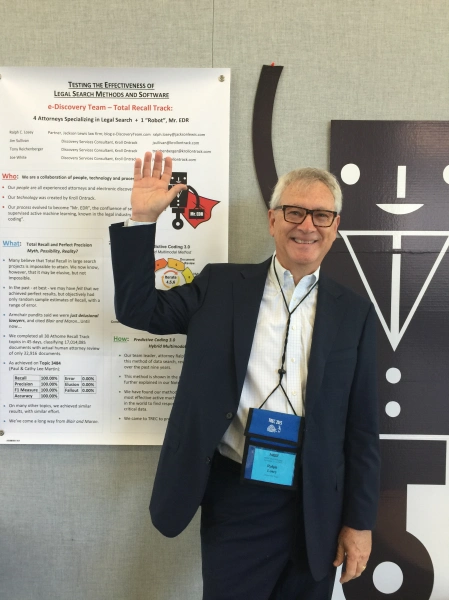

I have tried to dumb it down as much as possible to reduce human error and make it as accessible as possible, but it is still fairly complex. The latest version is called Predictive Coding 4.0, a Hybrid Multimodal IST method, where Hybrid means both man and machine, Multimodal means all types of search algorithms are used, and IST stands for Intelligently Spaced Training. IST means you keep training until first pass relevance review is completed, a type of Continuous Active Learning, which Dr.’s Grossman & Cormack, and then others, called CAL. I have had a chance to test out and prove this type of robot many times, including at NIST, with Maura and Gordon. After much hands on experience, I have overcome many of my robophobias, but not all. See: WHY I LOVE PREDICTIVE CODING: Making Document Review Fun Again with Mr. EDR and Predictive Coding 4.0.

I recognize the dangers of keeping humans in the loop that Professor Woods points out. That is one reason my teaching has always emphasized quality controls. That’s Step Seven in my semi-automated process of document review, where ZEN stands for Zero Error Numerics (explained in ZEN website here). Note this quality control step uses both robots (algorithms) and humans (lawyers).

Moreover, as I have discussed in my articles, with human lawyers as the subject matter experts in the machine training (step four), the old “garbage in, garbage out” problem remains. It is even worse if the human is unethical and intentionally games the machine training by calling black white, iw, intentionally calling a relevant document irrelevant. That’s a danger of any hybrid process of machine training and that’s one reason we have quality controls.

But eventually, the machines will get smart enough to see through intentionally bad training, weed out the treasure from the trash, find all of the highly relevant ESI we need. Our law robots can already do this, to a certain extent. They have been correcting my unintentional errors on relevance calls for years. That is part of the iterative process of active machine learning (steps four, five and six in my hybrid process). Such corrections are expected, and once your ego gets over it, you grow to like it. After all, it’s nice having a helper that can read at the speed of light, well, speed of electrons anyway, and has perfect recall.

Still, as of today at least, expert lawyers know more about the substantive law than robots do. When the day comes that a computer is the best overall SME for the job, and it surely will, maybe humans can be taken out of the loop. Perhaps just serve as an appeal if certain circumstances are met, much like trying to appeal an arbitration award (my new area of study and practice).

My conclusion is that you should read the whole article by Professor Andrew Woods and share it with your friends and colleagues. It deserves the attention. Robophobia, 93 U. Colo. L. Rev. 51 (Winter, 2022). I end with a quote of the last paragraph of Andrew’s article. For still more, look for webinars in the future where Andrew and I grapple with these questions. (We met for the first time after I began publishing this series.) I for one would like to know more about his debiasing and design strategies. Now for Andrew’s last word.

In this Article, I explored relatively standard approaches to what is essentially a judgment error. If our policymaking is biased, the first step is to remove the bias from existing rules and policies. The second step might be to inoculate society against the bias—through education and other debiasing strategies. A third and even stronger step might be to design situations so that the bias is not allowed to operate. For example, if people tend to choose poorer performing human doctors over better performing robot alternatives, a strong regulatory response would be to simply eliminate the choice. Should humans simply be banned from some kinds of jobs? Should robots be required? These are serious questions. If they sound absurd, it is because our conversation about the appropriate role for machines in society is inflected with a fear of and bias against machines.

Ralph Losey Copyright 2022 – ALL RIGHTS RESERVED