[Editor’s Note: EDRM does not take a position on rules or legislation, and is proud to publish the advocacy of our leaders. All images are Losey prompted through Midjourney unless noted.]

In a speech on June 21, 2023, to the Center for Strategic and International Studies (CSIS), Senate Majority leader, Charles Schumer, explained the plan that his technical advisors have formulated for regulation of Ai. The speech writers used well crafted language to make many important regulatory suggestions.

Favorite Quotes from Schumer’s Speech

I have studied the speech carefully and begin this article by sharing a few of my personal favorite quotes.

Change is the law of life, more so now than ever. Because of AI, change is happening to our world as we speak in ways both wondrous and startling.

It was America that revolutionized the automobile. We were the first to split the atom, to land on the moon, to unleash the internet, and create the microchip that made AI possible. AI could be our most spectacular innovation yet, a force that could ignite a new era of technological advancement, scientific discovery, and industrial might. So we must come up with a plan that encourages, not stifles, innovation in this new world of AI. And that means asking some very important questions.

AI promises to transform life on earth for the better. It will shape how we fight disease, how we tackle hunger, manage our lives, enrich our minds, and ensure peace. But there are real dangers too – job displacement, misinformation, a new age of weaponry, the risk of being unable to manage this technology altogether.

Even if many developers have good intentions there will always be rogue actors, unscrupulous companies, foreign adversaries, that will seek to harm us. Companies may not be willing to insert guardrails on their own, certainly not if their competitors won’t be forced to do so.

If we don’t program these algorithms to align with our values, they could be used to undermine our democratic foundations, especially our electoral processes.

Senator Schumer, 6/21/23

Proposed Legislative Initiative

Senator Schumer’s speech was based on a five point outline for proposed legislation, called SAFE, an acronym for “Security, Accountability, Foundations, Explain.” This is further set out in Senator Schumer’s press release, which summarizes the points as follows:

1. Security: Safeguard our national security with AI and determine how adversaries use it, and ensure economic security for workers by mitigating and responding to job loss;

2. Accountability: Support the deployment of responsible systems to address concerns around misinformation and bias, support our creators by addressing copyright concerns, protect intellectual property, and address liability;

3. Foundations: Require that AI systems align with our democratic values at their core, protect our elections, promote AI’s societal benefits while avoiding the potential harms, and stop the Chinese Government from writing the rules of the road on AI;

4. Explain: Determine what information the federal government needs from AI developers and deployers to be a better steward of the public good, and what information the public needs to know about an AI system, data, or content.

5. Innovation: Support US-led innovation in AI technologies – including innovation in security, transparency and accountability – that focuses on unlocking the immense potential of AI and maintaining U.S. leadership in the technology.

In elaborating on Security, a key issue for any government to focus on, Schumer said in his speech:

First comes security – for our country, for American leadership, and for our workforce. We do not know what artificial intelligence will be capable of two years from now, 50 years from now, 100 years from now, in the hands of foreign adversaries, especially autocracies, or domestic rebel groups interested in extortionist financial gain or political upheaval. The dangers of AI could be extreme. We need to do everything we can to instill guardrails that make sure these groups cannot use our advances in AI for illicit and bad purpose. But we also need security for America’s workforce, because AI, particularly generative AI, is already disrupting the ways tens of millions of people make a living.

Schumer 6/21/23

Summary of Senator Schumer’s Speech

Here is a short summary made by ChatGPT-4 of all of the key points of Senator Schumer’s long speech. I checked the GPT output for accuracy and no mistakes were found, but, sorry to say baby chatbot, I did have to make several edits to bring the writing quality up to an acceptable level.

Senator Chuck Schumer’s speech at the CSIS focused on the significance and impact of artificial intelligence (AI) in contemporary society. He drew parallels between the ongoing AI revolution and the historical industrial revolution, emphasizing the potential for transformative effects on various aspects of life, such as healthcare, lifestyle management, and cognitive enhancement. However, he also highlighted the associated risks, including job displacement, misinformation, and the development of advanced weaponry.

To address these challenges, Senator Schumer advocated for proactive involvement by the US government and Congress in regulating AI. He proposed the SAFE Innovation Framework for AI Policy, which aims to balance the benefits and risks of AI while prioritizing innovation. The framework consists of two main components: a structured action plan and a collaborative policy formulation process involving AI experts.

The proposed framework seeks to address crucial questions related to collaboration and competition among AI developers, the necessary level of federal intervention, the balance between private and open AI systems, and ensuring accessibility and fair competition for innovation. Schumer outlined the SAFE (Security, Accountability, Foundations, Explainability) Innovation Framework as a means to ensure national and workforce security, accountability for the impact of AI on jobs and income distribution, and explainability of AI systems. He warned against potential disruptions similar to those caused by globalization, emphasizing the need for proper management to prevent job losses.

Schumer stressed the importance of shaping AI development and deployment in a manner that upholds democracy and individual rights. He cautioned against the misuse of AI technology, such as tracking individuals, exploiting vulnerable populations, and interfering with electoral processes through fabricated content. The senator emphasized the necessity of establishing accountability in AI practices and protecting intellectual property rights. Unregulated AI development, he warned, could jeopardize the foundations of liberty, civil rights, and justice in the United States.

Transparency and user understanding of AI decisions were identified as key factors in maintaining accountability. Schumer called on companies to develop mechanisms that allow users to comprehend how AI algorithms arrive at specific answers while respecting intellectual property. To facilitate discussions and consensus-building on AI challenges, he proposed organizing ‘AI insight forums’ with top AI developers, executives, scientists, advocates, community leaders, workers, and national-security experts. The insights gained from these forums would inform legislative action and lay the groundwork for AI policy.

In conclusion, Schumer urged Congress, the federal government, and AI experts to adopt a proactive and inclusive approach in shaping the future of AI in the United States. He emphasized the necessity of embracing AI and ensuring its safe development for the benefit of society as a whole. This will require bipartisan cooperation, that sets aside ideological differences and self-interest, to tackle the complex, rapidly evolving field of AI. This collective effort, he asserted, would ensure that AI innovation serves humanity’s best interests while upholding the nation’s democratic principles.

Personal Analysis

This proposal is a good start for AI regulation. I especially like the linkage between innovation and regulation. I only hope enough politicians will put partisan bickering aside to unite on this key issue. For the sake of coming generations, we need to get this right the first time.

Ai will soon make the Internet look like small potatoes. We screwed up development and regulation of the early Internet, big time. It was completely unregulated. Few could see the potential. Our blinders are off now. We all see the potential of Ai and we must not get fooled again.

As a long time BBS user, including the big, pre-Internet online services like CompuServe and The Source, I was one of the first lawyers on the Internet. I even had my website challenged by the Florida Bar because they thought it was an unapproved television advertisement. I was able to get the Florida Bar to change its rules, then was invited to lecture all around Florida where I encouraged lawyers and judges to get into computers and try the Internet.

The World Wide Web then was still a wonderful, interlinked place of learning, academic resources and friendly discussions, with just a few flames (rude, angry comments) that online communities quickly put out. Then the commercial exploitations began and it exploded in size. It went from a technical community BBS mentality, to big business. Then we allowed our privacy to become the product. The end result is the mess you see today. If Ai regulation is ignored, there are far greater dangers ahead.

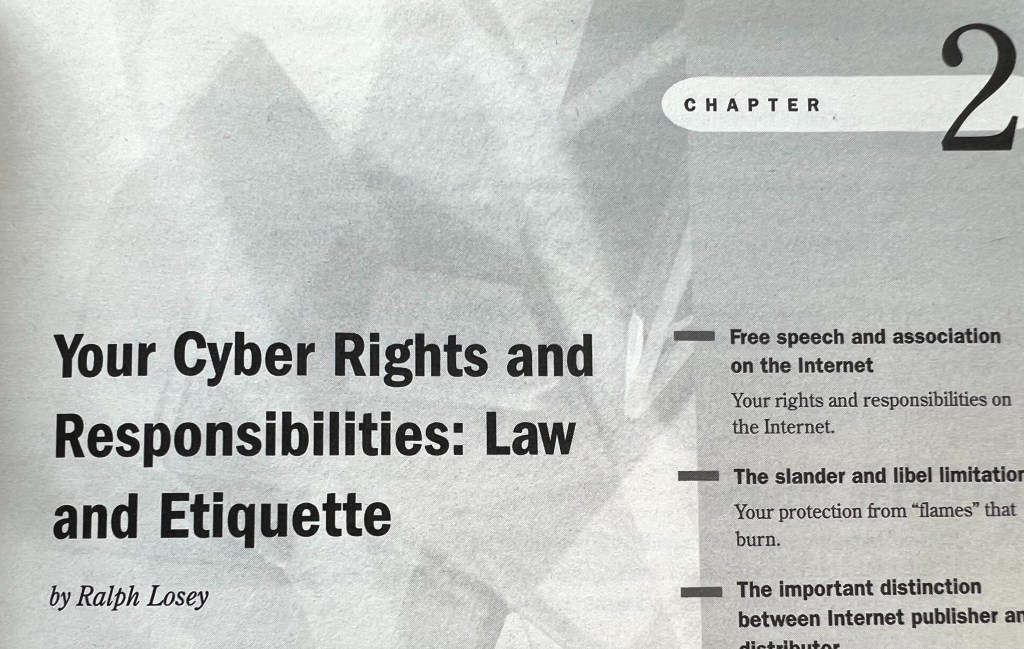

When the Internet was still young, 1996, Macmillan found me on the Internet and asked me to write a chapter on the law of the Internet. It was for a new edition of a then best selling book explaining everything about the Internet. The very thick book even came with a CD. Your Cyber Rights and Responsibilities: The Law and Etiquette of the Internet, Chapter 2 of Que’s Special Edition Using the Internet, (McMillian 3rd Ed, 1996). The subheadings of my lengthy chapter, that included numerous case links, should be familiar: “free speech and association on the Internet; the libel and slander limitation; the important distinction between Internet publisher and distributor; obscenity limitations, privacy, copyright, and fair trade on the Internet; and, protecting yourself from crime on the Internet.” Id.

I kept with my script and encouraged readers in 1996 to try the Internet, just like I am encouraging readers today to try generative Ai. I assured readers then that it was safe, that: “You have important legal rights and responsibilities in cyberspace, just like anywhere else.” My big warning concerned the dangers of computer viruses. Like most “computer lawyers” back then (that’s what we were called), I expected the Internet to continue to be a reasonable place of intellectual discourse. In retrospect, I realize my naïveté and unrealistic optimism. Today my Ai tech encouragement comes with warnings and calls for regulation.

Most online lawyers in the mid-nineties thought that the pre-cyberspace laws on the books would be adequate; individual citizens could self-regulate the Internet and prevent its exploitation. Lawyers would help. We did not want the help of Big Brother government. We were wrong. The Internet without regulation quickly became a dangerous, crass, commercial mess where billions of people were tricked into trading their personal privacy for cheap thrills.

Older now, I am still optimistic. That part is hard wired in. But I am no longer naive. We must regulate Ai and do it now. If the U.S. abdicates its legal leadership role, the E.U. will step in, or worse, the People’s Republic of China. The E.U., whom I greatly admire in many respects, seems likely to over-regulate, make everything a bureaucratic mess and stifle innovation. We do not want that.

If no government does anything to regulate, which is essentially what happened when the Internet was born with the WWW in the early 90s, the hustlers will take over again. So will the dictators of the world. Only this time, it will be worse, far worse, because now the tyrannical foreign powers, and the criminals and terrorists everywhere, know and understand the power of Ai. Few in the 90s realized the impact of the Internet. The 21st Century evil-doers have already started to use Ai for their self-serving greed and attempts of world domination. I agree with this quote from the Schumer Speech.

What if foreign adversaries embrace this technology to interfere in our elections? This is not about imposing one viewpoint, but it’s about ensuring people can engage in democracy without outside interference. This is one of the reasons we must move quickly. We should develop the guardrails that align with democracy and encourage the nations of the world to use them. Without taking steps to make sure AI preserves our country’s foundations, we risk the survival of our democracy.

Senator Schumer, 6/21/23

Fear the people who misuse the Ai – the terrorists, criminals and foreign agents – and not the Ai itself. That is why the U.S. needs to prepare good Ai regs now and follow-up with vigorous enforcement. Our democratic way of life hangs in the balance. We should not fall into the “paralysis by analysis” trap. We should not put off taking action based on the escape that things are moving too fast now to regulate. This is Congressman Ted Lieu’s current approach, a politician with a background in computer science whom I otherwise admire.

Congressman Lieu on June 20, 2023 said in an interview on MSNBC’s “Morning Joe”:

“I’m not even sure we would know what we’re regulating at this point because it’s moving so quickly. . . . And so, some of these harms may in fact happen, but maybe they don’t happen. Or maybe we see some new harm.”

This sounds like dangerous procrastination to me. It is not going to slow down and stop changing so you can leisurely study it more. The danger is real and it’s happening now. Congress needs to start actually doing something. If need be, we can always revise or enact more laws later. Remember, perfect is the enemy of good. Senator Schumer’s technical advisors and speech writers have it right. We need to convene the expert Forums now and get down to the details of legislation that implements the SAFE ideas.

All of the goals of the SAFE policy are good, but, in my view, one goal not emphasized enough by SAFE, is the need for the government to ensure the availability of free unbiased education for all. Retraining and quality GPT based tutoring must be open-sourced and freely available.

Another point that should be emphasized is fairness in the distribution of new wealth that will arise from Ai. The recent McKinsey Report predicts a $4.4 Trillion increase in the economy from generative Ai. See: McKinsey Predicts Generative AI Will Create More Employment and Add 4.4 Trillion Dollars to the Economy. This new wealth must be more fairly distributed than in the last economic boom triggered by the Internet and globalism.

Conclusion

Senator Schumer’s next step to advance the proposed regulation is to refine the SAFE Innovation plan and build consensus. He is asking for help from “creators, innovators, and experts in the field.” That means the politically well-connected or famous. The Senator said that he will soon “invite top AI experts to come to Congress and convene a series of first ever AI insight forums for a new and unique approach to developing AI legislation.” Senator Schumer Speech, 6/21/23. If you have friends in high places and get an invite to a forum, I hope you will go and be heard.

Although I like to be in the arena, I have no political contacts, nor fame; never been one to cultivate contacts and play politics. I am far too outspoken and idealistic for that. Just a Florida native living in the dangerous backwoods of the country, far from the D.C., N.Y. and Silicon Valley arenas. Still, I will keep reporting on the government activities. I hope to persuade as many decision makers as possible by these writings, right-brain graphics, and occasional talks, to take action now.

We need government to protect us from the abusers, those who would, and already are, exploiting Ai for their personal goals and not the greater good. We need to have an intelligent blueprint for regulation, one that still encourages innovation and distribution of these powerful new tools. The SAFE Innovation proposal looks like a good start.

Copyright Ralph Losey 2023 – ALL RIGHTS RESERVED – (May also be Published on EDRM.net and JDSupra.com with permission.)