Cyber Risk Management Chronicles, Episode X

Feeling seen, but maybe too seen

Stepping off the plane this past August after a long international flight, the last thing I wanted was a lengthy wait at US Customs, the final sentinel between me and a good night’s sleep. I had sat at the very back of the plane, and so even though I had the privilege of Global Entry to reduce my wait time, there was still a fairly daunting line that had developed by the time I arrived to make my way back into the country. To my surprise, however, it couldn’t have been easier.

Unlike returning from previous trips, the line for Global Entry stayed in perpetual motion, with passengers swiftly gliding through the process, without even having the chance to stand still. I quickly reached an open kiosk, where the system prompted me to center my face for a photo. Complying, I only waited seconds before being directed to proceed to the immigration officer.

As I approached the immigration officer’s desk he barely glanced up from his computer before asking, “Griffith?”

I simply nodded, astounded that they had already identified me.

As I approached the immigration officer’s desk he barely glanced up from his computer before asking, “Griffith?”

I simply nodded, astounded that they had already identified me.

“You’re good to go. Have a nice day,” he said, and I walked away, left to ponder the experience on the Uber ride home. The entire process had taken me less than two minutes, and all it required was my face, thanks to the apparent marvel of biometrics.

Do the benefits of biometrics outweigh the risks?

This experience both excited and concerned me. While it undeniably enhanced my travel experience, it also left me contemplating the implications of such technology for personal privacy and data security. If biometrics are this convenient and accurate, how can they be used for other applications? What will the future of biometric technology entail, and what other data can be used? Will it bring about new privacy and security challenges that we need to address?

In a world where security threats are constantly evolving, we’re only going to see more frequent adoption of biometrics for authentication as well as for other uses.[1] The appeal of biometrics lies in their user-friendliness and potential for reliability and accuracy. Together with AI, this technology can be seamlessly integrated into various applications to augment current authentication policies, providing robust security that is significantly more resistant to spoofing or fraudulent attempts, but is enough being done to help ensure that it doesn’t come at the cost of privacy and personal safety for users?

Biometrics as a means of authentication

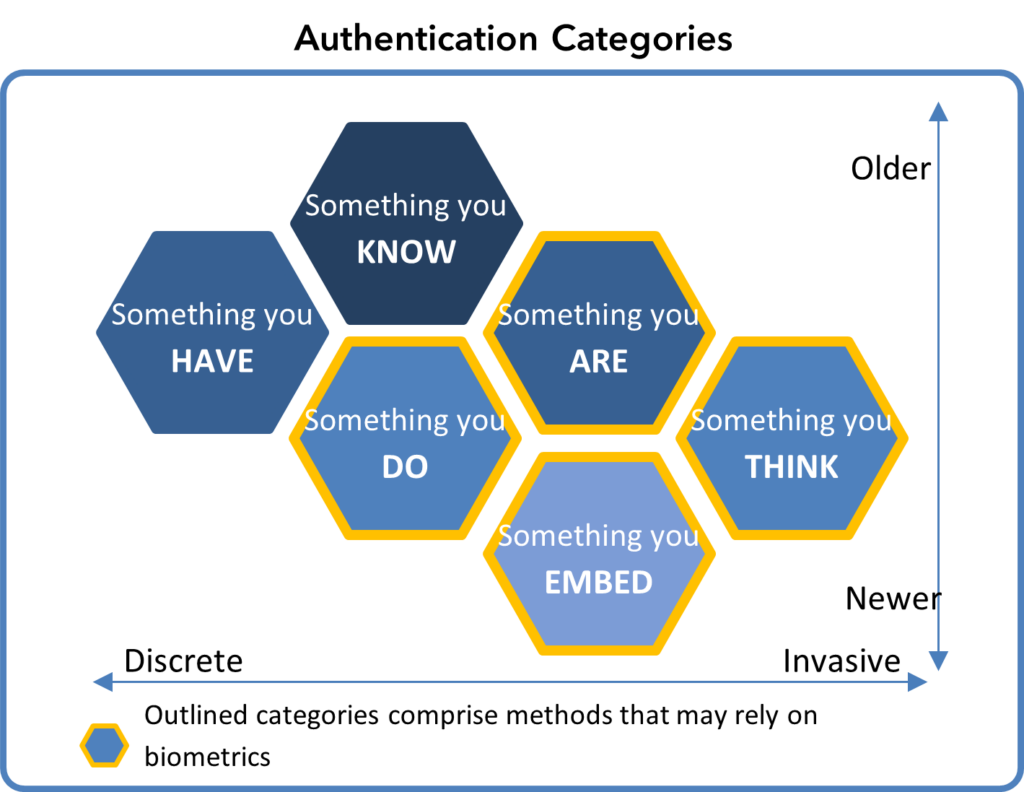

Let’s look at how security authentication works and the role that biometrics can play.

Authentication methods have come a long way from relying solely on passwords. Passwords, on their own, are vulnerable due to their susceptibility to theft or being cracked without detection. Implementing multiple forms of identification from diverse categories significantly bolsters security. While someone may have access to one category of identification, they are unlikely to possess the others, making it much more challenging for unauthorized access. Multifactor authentication has become a necessity, involving two or more forms of identification from various categories.

Broadly speaking, biometrics can be defined as measurable behavioral and physical characteristics, and detectable signals and properties that can be attributable to a specific individual. One of the reasons that Biometrics is so appealing to the security world is that it may offer new means to supplant traditional, sometimes less user-friendly, means of authentication.

Common forms of authentication typically involved one factor or a combination of two factors from amongst these three categories:

- Something you know: Think passwords and passphrases, PIN numbers, or challenge questions such as validating your mother’s maiden name.

- Something you have: This could be your laptop or your smartphone, a physical token such as key fob, or an encoded ID card.

- Something you are: Originally this included unique physical features such as your fingerprints, face shape, iris patterns, and even handwriting or signature. As technology has progressed, this category has expanded physical attributes to include walking gait, typing patterns and speaking style. This is why Deepfakes can be so risky.[2]

As cyberattacks have become more sophisticated, the means of authentication have had to advance as well, expanding into innovative techniques including:

- Something you do: Modern methods of authentication often consider your behavioral patterns, daily routines, places you visit, and your associations. That’s why you might get an alert when you try logging on from a new location or at an odd hour.

- Something you embed: This could include subcutaneously implanted RFID chips, brain implants, or even the device ID on a remotely monitored heart device could fall into this category.[3]

- Something you think: When it comes to behavioral analysis, instead of something you do, we may end up being authenticated through something you think, which would be an analysis of biofeedback, MRIs, and brainwave activity.[4]

Biometrics privacy risks can’t be overlooked

The marvel that streamlined my customs experience was none other than facial recognition technology. The system captured a picture of my face and compared it to the repository of photographic data that U.S. Customs and Border Protection had on me from my passport and global entry enrollment process[5]. The process was incredibly efficient, making it convenient for travelers like me to come through quickly and without the need to lift a finger. However, as I delved deeper into the intricacies of this system, it raised pressing questions.

For one, there was no apparent way to opt out of this experience. Travelers were subtly guided towards a kiosk that requested a photo, leaving no visible alternatives. This seems to be a common trend across every US airport where this technology is being implemented; as the New York Times recently found, at John F. Kennedy International Airport, the ability to request a traditional inspection process was outlined 16 lines down on a piece of paper taped to the side of the customs booth[6]. Whether this is sufficient notice and implicit consent is adequate in an environment where you’re already in a rush, trying to navigate through the process as swiftly as possible, is doubtful. Besides, even if travelers do read the fine print, there’s still the pressure of not objecting for fear of appearing more suspicious, and being subject to further, more intrusive security checks.

BIPOC (Black, Indigenous and People of Color) communities have been particularly impacted by this technology’s shortcomings. Facial recognition technology has been shown to be biased and has a harder time reading the faces of people of color.[7] In fact, Federal testing has shown that the majority of facial recognition algorithms perform poorly at identifying people who are not white males[8]. This is a problem for any projects that use facial recognition but is particularly troubling when it comes to government usage, considering how big the implications can be on someone’s life.

BIPOC (Black, Indigenous and People of Color) communities have been particularly impacted by this technology’s shortcomings. Facial recognition technology has been shown to be biased and has a harder time reading the faces of people of color.[7] In fact, Federal testing has shown that the majority of facial recognition algorithms perform poorly at identifying people who are not white males[8]. This is a problem for any projects that use facial recognition but is particularly troubling when it comes to government usage, considering how big the implications can be on someone’s life.

Another issue is control over personal information, such as who can access this data and how it’s monitored and protected. This brings us to the core concern of biometrics: privacy. Biometric data, by its very nature, is deeply personal. It encompasses what makes each of us unique—our faces, our fingerprints, our irises, and much more. As such, it demands stringent protection and careful consideration when implemented, balancing the advantages of convenience with the preservation of personal privacy.

During the U.S. Custom and Border Protection’s pilot of facial recognition technology in 2019, the agency experienced multiple major breaches of the data they had been collecting[9]. Around 200,000 images of travelers were compromised through government subcontractors using unencrypted devices, violating DHS security and privacy protocols, and contributing to a major cyberattack and breach.

AI both accelerates and threatens the adoption of biometrics

AI-driven algorithms have significantly improved the accuracy of biometric systems.[10] For analysis of biometric features that might change over time, AI is able to make connections that otherwise would not be possible. Faces are by no means static-changing with age, facial hair, and even in the short term with makeup- but these algorithms can analyze vast datasets and adapt to variations in data, ensuring reliable identification and authentication.

AI can even be used with facial recognition to authenticate someone wearing a mask, using past datasets to analyze facial structure[11]. Moreover, when it comes to fingerprint analysis AI can play a pivotal role in correcting for skin damage, scars, or even cases where the surface area is limited, allowing accurate identification even in less-than-ideal situations.

It’s critical to note that while AI has bolstered the security of biometric systems, it also poses a very real threat. AI’s capabilities in generating incredibly realistic synthetic videos, soundbites, and images, or “deepfakes” can potentially be used to deceive these systems. As AI advances, the ongoing cat-and-mouse game between biometrics and malicious actors who manipulate AI technology continues.[12]

An overview of the US legal landscape

State privacy laws, such as in California and Colorado, require organizations to conduct a data protection impact assessment to establish risks and mitigating controls if they intend to process sensitive personal information, for which biometric data would certainly qualify. With no comprehensive Federal laws, much of the country still has no guaranteed protection over their personal data, and only 11 U.S. states have enacted comprehensive consumer data privacy laws[13].

In terms of biometrics data specifically, the Illinois biometric information privacy act (BIPA) is the most comprehensive in the country, with other states having less inclusive laws or and other states biometric privacy bills. The BIPA prohibits the sale or profit from an individual’s biometric data. It also requires notice to individuals of the nature and purpose of the collection of biometric information, including the length of storage, type of collection, and usage. The act also requires obligations for things like retention and destruction policies for data, restrictions on what can be retained, and a restriction on the sharing of the data[14].

Taking a step further, in an effort to future-proof protection against overuse or abuse of implanted biometrics tokens, California, Maryland, New Hampshire, North Dakota, Oklahoma, Wisconsin and Utah have all passed laws to prohibit any organization from requiring any individuals, including employees from being subjected to microchip implantation.[15]

Key biometrics risk considerations to evaluate

While the implementation of biometrics has much to offer, and its usage will only continue to grow, the risks can’t be ignored. Before making the decision to implement biometrics, a thorough evaluation is crucial. It’s critical to evaluate how significant the cybersecurity and data privacy risks are, whether they outweigh the benefits, and, of course, if they’re appropriate, well communicated and tested, compliant with laws, and socially acceptable.

The business case for biometrics should address whether the chosen method is reasonable and appropriate to address authentication needs and how the solution addresses the following considerations:

| Factors for Evaluating Biometrics | |

| Universality | Circumventable |

| Distinctiveness | Valuation |

| Permanence | Safety |

| Collectability | Discriminatory |

| Accuracy | Compliant |

- Universality: Can the technique be applied to anyone, regardless of physical differences or impairment?

- Distinctiveness: Has the characteristic been proven to be sufficiently unique for all populations?

- Permanence: Is the characteristic subject to change based on circumstances or over time?

- Collectability: How easy is it to securely record and process the data or any individual at the point of collection?

- Accuracy: Is it difficult to reliably capture and consistently evaluate an individual’s characteristics?

- Circumventable: How likely is it that the characteristic can be spoofed or bypassed?

- Valuation: If stolen or replicated, could the characteristic be used online by a threat actor?

- Safety: Could the methods used to capture or record the characteristic pose a health threat?

- Discriminatory: Could the characteristic be used to segment individuals based on populations that are protected by law from discrimination?

- Compliant: Can the technique be adopted in a manner that complies with all applicable laws, regulations, and organizational policies?

[1] https://www.sciencedirect.com/science/article/abs/pii/S0148296321005014

[2] https://www.isaca.org/resources/news-and-trends/isaca-now-blog/2023/the-role-of-deepfake-technology-in-the-landscape-of-misinformation-and-cybersecurity-threats

[3] https://thehill.com/opinion/technology/3817029-human-microchip-implants-take-center-stage/

[4] https://www.sciencedirect.com/science/article/pii/S0167404823004303

[5] https://www.cbp.gov/newsroom/local-media-release/ord-and-mdw-encourages-travelers-use-facial-recognition

[6] https://www.nytimes.com/2022/10/05/travel/customs-kiosks-facial-recognition.html

[7] https://www.scientificamerican.com/article/police-facial-recognition-technology-cant-tell-black-people-apart/

[8] https://pages.nist.gov/frvt/reports/11/frvt_11_report.pdf

[9] https://www.oig.dhs.gov/reports/2020/review-cbps-major-cybersecurity-incident-during-2019-biometric-pilot/oig-20-71-sep20

[10] https://ieeexplore.ieee.org/document/10212224

[11] https://www.zdnet.com/article/facial-recognition-now-algorithms-can-see-through-face-masks/

[12] https://www.economist.com/biometrics-pod

[13] https://pro.bloomberglaw.com/brief/state-privacy-legislation-tracker/

[14] https://www.reuters.com/legal/legalindustry/beware-bipa-other-biometric-laws-an-overview-2023-06-22/

[15] https://pluribusnews.com/news-and-events/human-microchipping-bills-seek-to-rein-in-practice/