[Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work.]

OpenAI’s head scientist, Ilya Sutskever, revealed in an interview by a fellow scientist near his level, Sven Strohband, how the emergent intelligence of his neural-net AI was the surprising result of scaling, a drastic increase of the size of the compute and data. This admission of surprise and some bewilderment was echoed in another interview of the CEO and President of OpenAI, Sam Altman and Greg Brockman. They said no one really knows how or why this human-like intelligence and creativity suddenly emerged from their GPT after scaling. It is still a somewhat mysterious process, which they are attempting to understand with the help of their GPT4. The interview was by a former member of OpenAI’s Board of Directors who also prompted them to disclose the current attitude of the company towards regulation.

These new interviews are both on YouTube and will be shared here. The interview of Ilya Sutskever is on video, and the interview of CEO Sam Altman, and President, Greg Brockman, is an audio podcast.

Sam Altman and Greg Brockman, Image by Ralph Losey and Midjourney

Introduction

In these two July 2023 interviews of OpenAI’s leadership, they kind of admit that they lucked into picking the right model – artificial neural networks on a very large scale. Their impromptu answers to questions by their peers can help you to understand how and why their product is changing the world. One interview, a podcast, also provides an interesting glimpse into OpenAI’s preferred approach towards Ai regulation. You really should digest these two important YouTube videos yourself, and not ask a chatbot to summarize it for you, although I hope you will take the time first to read my entirely human analysis in this blog: What AI is Making Possible (YouTube video of Sutskever) and Envisioning Our Future With AI (YouTube audio podcast).

In the 25 minute video interview of Ilya Sutskever, the chief scientist of Open Ai, you will get a good feel for the man himself, how he takes his time to think and picks his words carefully. He comes across as honest and sincere in all of his interviews. My only criticism is his almost scary serious demeanor. This young man, a former student and successor to the legendary AI pioneer of neural-net and deep learning, Professor Geoffrey Hinton, could well be the Einstein of our day. See for yourself.

I did manage to catch a brief moment of an inner smile by Ilya in this screen shot from the video. He provides a strong contrast with the nearly always upbeat executive leadership team, who did a podcast last month for Reid Hoffman, a past member of their Board of Directors.

The interview of Ilya Sutskever was in response to excellent questions by Sven Strohband. The YouTube video of the interview Strohband called What AI is Making Possible. Sven is a Stanford PhD, computer scientist, who is now the Managing Director of Khosla Ventures. His questions are based on extensive knowledge and experience. Moreover, his company is a competitor of OpenAI. The answers from the deep thinking of Ilya Sutskever are somewhat surprising and crystal clear.

The second interview is of both the OpenAI CEO, Sam Altman, who has often been quoted here, and the President and co-founder of OpenAI, Greg Brockman. Both are very articulate in this late July 2023 audio interview by Reid Hoffman, former OpenAI Board member, and by Aria Finger. They call this podcast episode Envisioning Our Future With AI. The episode is part of Greg Hoffman’s Possible podcast series, also found on Apple podcasts. Greg was part of the initial group raising funds for OpenAI and, until recently, was on its Board of Directors. As they say, he knows where all the skeletons are buried, and got them to open up.

This one hour interview covers all the bases, even asking about their favorite movie (spoiler – both Sam and Greg said HER). The interview is not technical, but it is informative. Since this is an audio-only interview, it is a good one to listen to in the background, although this is made difficult by how similar Sam and Greg’s voices sound.

Ilya Sutskever Video Interview – What AI is Making Possible

I have watched several videos of Ilya Sutskever, and What AI is Making Possible is the best to date. It is short, only twenty-five minutes, but sweet. In all of Ilya’s interviews you cannot help but be impressed by the man’s sincerity and intellect. He is humble about his discovery, admits he was lucky, but he and his team are the ones who made it happen. They made AI real, and remarkably close to AGI, and they did it with a method that surprised most of the AI establishment and Big Tech, they used the human brain’s neural networks as a computer design. Most experts thought that approach was a dead end in Ai research, and would not go far. Surprise, the expert establishment was wrong and Ilya and his team were right.

Everyone was surprised, except for Geoffrey Hinton, who started the deep-thinking, neural net designs. But even he must have been astonished that his former student, Ilya, made the big breakthrough by simple size scaling. Moreover, Ilya did so way before Hinton’s competing team at Google. In fact, Hinton was so surprised and alarmed by how fast and far Ilya had gone with Ai, that he quit Google right after ChatGPT-4.0 came out. Then he began warning the world that Ai like GPT4 needed to be regulated and fast. ‘The Godfather of A.I.’ Leaves Google and Warns of Danger Ahead (NYT, 5/5/23). These actions by Professor Hinton constitute an incredible admission. His protege, Ilya Sutskever, has to be smiling to himself from time to time after that kind of reaction to his unexpected win.

Ilya, and his diverse team of scientists and engineers, are the one’s who made the breakthrough. They are the real heroes here, not the promoters, fund raisers and management. Sam Altman and Greg Brockman’s key insight was to hire Ilya Sutskever and give him the space and equipment needed, hundreds of millions of dollars worth. By listening to Ilya, you get a good sense of how surprised he was to discover that the neural network approach actually worked, that his teachers and inner voice were right beyond his dreams. His significant engineering breakthrough came by “simply” scaling the size of neural network databases and computing power. Bigger was better and led to incredible intelligence. It is hard to believe, and yet, here it is. ChatGPT-4 does amazing things. Watch this interview and you will see what this means.

Greg Brockman and Sam Altman Audio Interview – Envisioning Our Future With AI

The hour long podcast, Envisioning Our Future With AI, interview of Brockman and Altman discusses the same surprise insight of scale, but from the entrepreneurs’ perspective. Listen to the Possible podcast at 16:57 to 18:24. By hearing the same thing from Sam, you get a pretty good idea of the key insight of scale. They are not sure why it works, nobody really is, including Ilya Sutskever, but they know it works and so Sam and Greg went with it, boldly going where no one has gone before.

The information Sam and Greg Brockman provide, in their consistently upbeat Silicon Valley voice, pertains to their unique insights as the visionary front men. Sam’s discussion of Ai Regulation is particularly interesting and starts at 18:34. Below is a excerpt, slightly edited for reading, of this portion of the podcast, starting at 19:07. (We recommend you listen to the full original podcast by Greg Hoffman.)

Question Aria Finger. What would you call for in terms of either regulation or global governance for bringing people in?

Answer by Sam Altman. I think there’s a lot of anxiety and fear right now . . . I think people feel afraid of the rate of change right now. A lot of the updates that people at OpenAI, who work at OpenAI, have been grappling with for many years, the rest of the world is going through in a few months. And it’s very understandable to feel a lot of anxiety in that moment.

We think that moving with great caution is super important, and there’s a big regulatory role there. I don’t think a pause in the naive sense is likely to help that much. You know, we spent . . . somewhat more than six months aligning GPT-4 and safety testing it since we finished training. Taking the time on that stuff is important. But really, I think what we need to do is figure out what regulatory approach, what set of rules, what safety standards, will actually work, in the messy context of reality. And then figure out how to get that to be the sort of regulatory posture of the world. (20:32)

Lengthy Talking Question Follow-up by Reid Hoffman (former OpenAI Board member). You know, when people always focus on their fears a little bit, like Sam, you were saying earlier, they tend to say, “slow down, stop,” et cetera. And that tends to, I think, make a bunch of mistakes. One mistake is we’re kind of supercharging a bunch of industries and, you know, you want that, you want the benefit of that supercharging industry. I think that another thing is that one of the things we’ve learned with larger scale models, is we get alignment benefits. So the questions around safety and safety precautions are better in the future, in some very arguable sense, than now. So with care, with voices, with governance, with spending months in safety testing, the ultimate regulatory thing that I’ve been suggesting has been along the lines of being able to remediate the harms from your models. So if something shows up that’s particularly bad, or in close anticipation, you can change it. That’s something I’ve already seen you guys doing in a pre-regulatory framework, but obviously getting that into a more collective regulatory framework, so that preferably everywhere in the world can sign on with that, is the kind of thing that I think is a vision. Do you have anything you guys would add to that, for when people think about what should be the way the people are participating?

Answer by Sam Altman (22:04). You touched on this, but to really echo it, I think what we believe in very strongly, is that keeping the rate of change in the world relatively constant, rather than, say, go build AGI in secret and then deploy it all at once when you’re done, is much better. This idea that people relatively gradually have time to get used to this incredible new thing that is going to transform so much of the world, get a feel for it, have time to update. You know, institutions and people do not update very well overnight. They need to be part of its evolution, to provide critical feedback, to tell us when we’re doing dumb mistakes, to find the areas of great benefit and potential harm, to make our mistakes and learn our lessons when the stakes are lower than they will be in the future. Although we still would like to avoid them as much as we can, of course. And I don’t just mean we, I mean the field as a whole, sort of understanding, as with any new technology, where the tricky parts are going to be.

I give Greg a lot of credit for pushing on this, especially when it’s been hard. But I think it is The Way to make a new technology like this safe. It is messy, it is difficult, it means we have to say a lot of times, “hey, we don’t know the answer,” or, “hey, we were wrong there,” but relative to any alternative, I think this is the best way for society. It is the best way not only to get the safest outcome, but for the voices of all of society to have a chance to shape us all, rather than just the people that, you know, would work in a secret lab.

Answer by Greg Brockman (23:51). We’ve really grappled with this question over time. Like, when we started OpenAI, really thinking about how to get from where we were starting, which was kind of nothing in a lot of ways, to a safe AGI that’s deployed, that actually benefits all of humanity. How do you connect those two? How do you actually get there? I think that the plan that Sam alludes to, of you just build in secret, and then you deploy it one day, there’s a lot of people who really advocate for it and it has some nice properties. That means that – I think a lot of people look at it and say, “hey there’s a technical safety problem of making sure the AI can even be steered, and there’s a society problem. And that second one sounds really hard, but, I know technology, so I’ll just focus on this first one.” And that original plan has the property that you can do that.

But that never really sat well with me because I think you need to solve both of these problems for real, right? How do you even know that your safety process actually worked. You don’t want it to be that you get one shot, to get this thing right. I think that there’s still a lot to learn, we’re still very much in the early days here, but this process that we’ve gone through, over the past four or five years now of starting to deploy this technology and to learn, has taught us so much.

We really weren’t in a position three, four years ago, to patch issues. You know, when there was an issue with GPT-3, we would sort of patch it in the way that GPT-3 was deployed, with filters, with non-model level interventions. Now we’re starting to mature from that, we’re actually able to do model level interventions. It is definitely the case that GPT-4 itself is really critical in all of our safety pipelines. Being able to understand what’s coming out of the model in an automated fashion, GPT-4 does an excellent job at this kind of thing. There’s a lot that we are learning and this process of doing iterative deployment has been really critical to that. (25:48)

Excerpt Envisioning Our Future With AI (slight editing for clarity) from 19:07 to 25:48, “Possible” podcast interview of Sam Altman and Greg Brockman by Greg Hoffman.

Conclusion

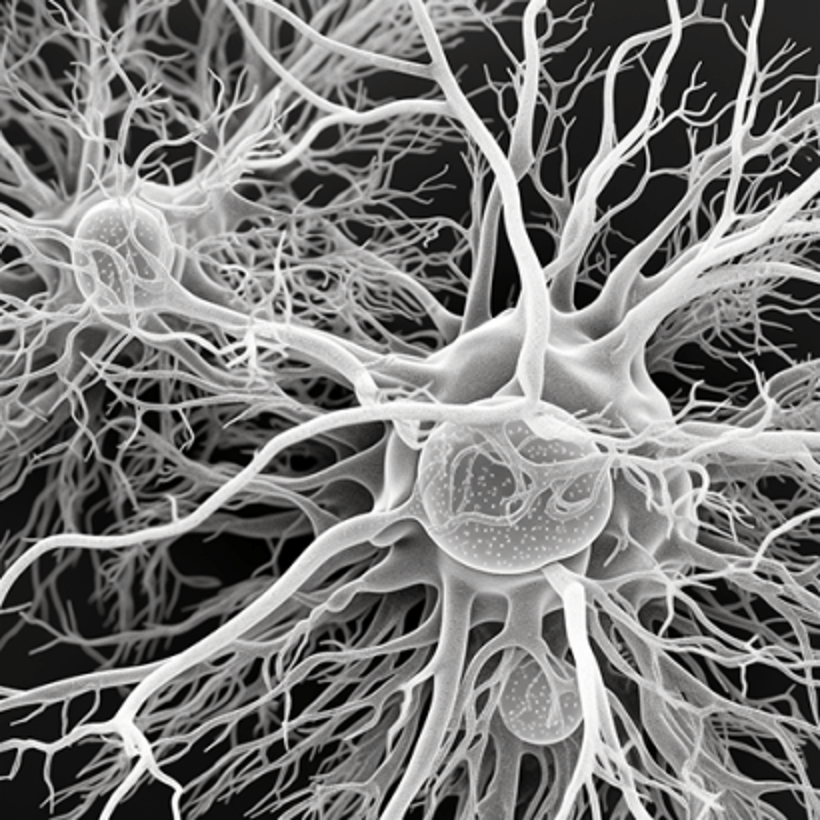

Scaling the size of data in the LLM, and scaling the size of the compute, the amount of processing power put into the Neural-Network, is the surprising basis of OpenAI’s breakthrough with ChatGPT4. The scaling increase in size made the Ai work almost as good as the human brain. Size itself somehow led to the magic breakthrough in machine learning, a breakthrough that no one, as yet, quite understands, even Ilya Sutskever. Bigger was better. The Ai network is still not as large as the human brain’s neural net, not even close, but it is much faster, and like us, can learn on it own. It does so in its own way, taking advantage of its speed and iterative processes.

Large scale generative Ai now has every indication of intelligent thought and creativity, like a living human brain. Super-intelligence is not here yet, but hearing OpenAI and others talk, it could be coming soon. It may seem like a creature when it comes, but remember it is still just a tool, even though it is a tool with intelligence greater than our own. Don’t worship it, but don’t kill it either – Trust but Verify. It can bring us great good.

Verification requires reasonable regulations. The breakthrough in AI caused by scaling has impacted the attitude of Open AI executives and others towards current AI Regulation. As these interviews revealed, they want to get the input and feedback from the public, even messy critical input and complaints. This input from hundreds of millions of users provide information needed for revisions to the software. It allows the software to improve itself. The interviews revealed that GPT4 is already going that. Think about that.

OpenAI does not want to be forced to work in secret and then have super-intelligent, AGI software suddenly escape and stun the world. No one wants secret labs in a foreign dictatorship to do that either (except of course the actual and would be despots). The world needs a few years of constant but manageable change to get ready for the Singularity. Humans and our institutions can adapt, but we need some time. The Ai tech companies need a few years to make course corrections and to regulate without stopping innovation. For more on these competing goals, and ideas on how to balance them, see the newly restated Intro and Mission Statement of AI-Ethics.com and related information at the AI Ethics web.

Balance is the way, and messy ad hoc processes, much like common law adjudication, seems to be the best method to walk that path, to find the right balance. At least, as these interview of Altman and Brockman revealed, that is the conclusion that OpenAI’s management has reached. There may be a bias here, but this process, which is very familiar to all attorneys and judges, seems like a good approach. This solution to Ai also means that the place and role of attorneys will remain important for many years to come. This is a trial and error, malleable, practice approach to regulation, a method that all litigation attorneys and judges in common law jurisdictions are very familiar with. That is a pleasant surprise.

Ralph Losey Copyright 2023 – ALL RIGHTS RESERVED (does not include the quoted excerpts, nor the YouTube videos and podcast content) — (Published on edrm.net and jdsupra.com with permission.)

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.