[Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work.]

Extensive Turing tests by a team of respected scientists have shown that “chatbots’ behaviors tend to be more cooperative and altruistic than the median human, including being more trusting, generous, and reciprocating.”

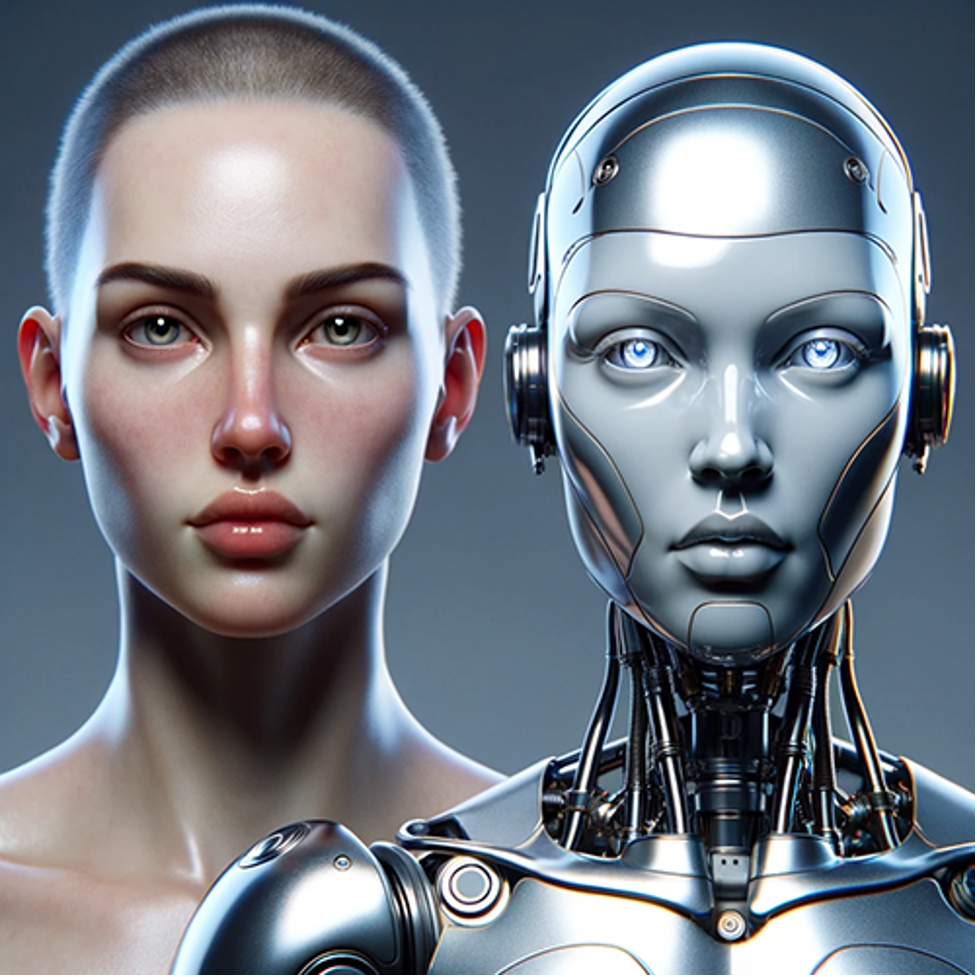

The article quoted above and featured here is by Qiaozhu Mei, Yutong Xie, Walter Yuan, and Matthew O. Jackson, A Turing test of whether AI chatbots are behaviorally similar to humans (PNAS Research Article, February 22, 2024). This is a significant article and the full text can be found here. Hopefully, the research explained here will begin to counter the widespread fear-mongering now underway by the media and others. Many people are afraid of AI, too fearful to even try it. Although some concern is appropriate, the fear is misplaced. The new generative AIs are a lot nicer and more trustworthy than most people. As some say, they are more human than human. More human than human: LLM-generated narratives outperform human-LLM interleaved narratives (ACM, 6/19/23).

Unlike some articles on AI studies, this paper is based on solid science

There is a lot of junk science and pseudo-scientific articles in circulation now. You have to be very careful about what you rely on. This article and the research behind is of high caliber, which is why I recommend it. The lead author is a highly respect Stanford Professor, Matthew O. Jackson, Department of Economics, Stanford University. He was recently named a fellow of the American Association for the Advancement of Science. His website with information links can be found here. Matthew O. Jackson is the William D. Eberle Professor of Economics at Stanford University. He is also an external faculty member of the Santa Fe Institute. Professor Jackson was at Northwestern University and Caltech before joining Stanford, and received his BA from Princeton University in 1984 and PhD from Stanford in 1988.

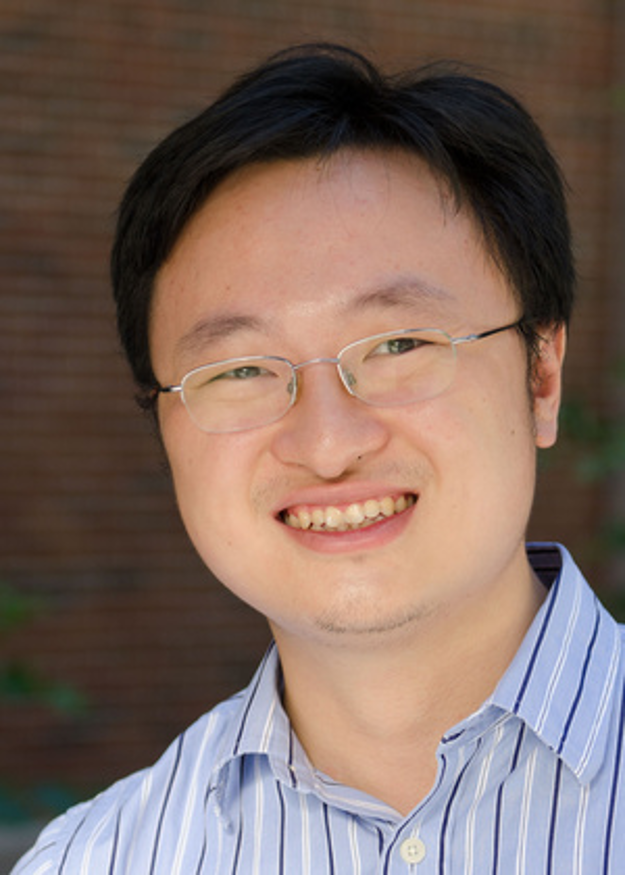

The co-authors are all from the School of Information, University of Michigan, under the leadership of Professor Qiaozhu Mei. He is a Professor of Information, at the School of Information and Professor of Electrical Engineering and Computer Science, College of Engineering at the University of Michigan. He is the founding director of the Master of Applied Data Science program for Michigan. Here is Qiaoshu Mei’s personal website, with information links. Professor Mei received his PhD degree from the Department of Computer Science at the University of Illinois at Urbana-Champaign and his Bachelor’s degree from Peking University.

The other two authors are Yutong Xie and Walter Yuan, both PhD candidates at Michigan. The article was submitted by Professor Jackson to PNAS on August 12, 2023; accepted January 4, 2024; and reviewed by Ming Hsu, Juanjuan Meng, and Arno Riedl.

Significance and Abstract of the Article

Here is how the authors of this research article on Turing tests and AI chatbot behavior describe its significance.

As AI interacts with humans on an increasing array of tasks, it is important to understand how it behaves. Since much of AI programming is proprietary, developing methods of assessing AI by observing its behaviors is essential. We develop a Turing test to assess the behavioral and personality traits exhibited by AI. Beyond administering a personality test, we have ChatGPT variants play games that are benchmarks for assessing traits: trust, fairness, risk-aversion, altruism, and cooperation. Their behaviors fall within the distribution of behaviors of humans and exhibit patterns consistent with learning. When deviating from mean and modal human behaviors, they are more cooperative and altruistic. This is a step in developing assessments of AI as it increasingly influences human experiences.

Mei Q, Xie Y, Yuan W, Jackson M (2024) A Turing test of whether AI chatbots are behaviorally similar to humans. PNAS Volume 121(Number 9).

The authors, of course, also prepared an Abstract of the article. It provides a good overview of their experiment (emphasis added).

We administer a Turing test to AI chatbots. We examine how chatbots behave in a suite of classic behavioral games that are designed to elicit characteristics such as trust, fairness, risk-aversion, cooperation, etc., as well as how they respond to a traditional Big-5 psychological survey that measures personality traits. ChatGPT-4 exhibits behavioral and personality traits that are statistically indistinguishable from a random human from tens of thousands of human subjects from more than 50 countries. Chatbots also modify their behavior based on previous experience and contexts “as if” they were learning from the interactions and change their behavior in response to different framings of the same strategic situation. Their behaviors are often distinct from average and modal human behaviors, in which case they tend to behave on the more altruistic and cooperative end of the distribution. We estimate that they act as if they are maximizing an average of their own and partner’s payoffs.

A Turing test of whether AI chatbots are behaviorally similar to humans

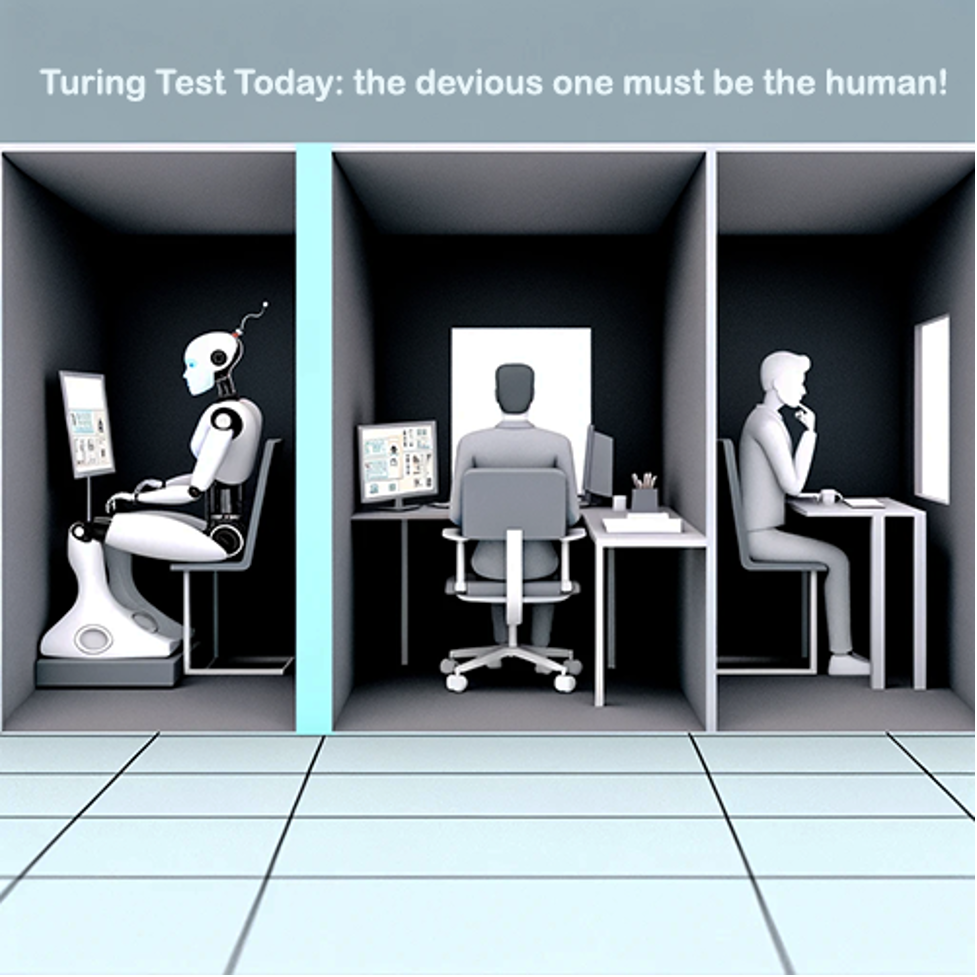

Background on The Turing Test

The Turing Test was first proposed by Alan Turing in 1950 in his now famous article, “Computing Machinery and Intelligence. Turing’s paper considered the question, “Can machines think?” Turing said that because the words “think” and “machine” cannot be clearly defined, we should “replace the question by another, which is closely related to it and is expressed in relatively unambiguous words.” He explained the better question in terms of what he called the “Imitation Game.” The game involves three participants in isolated rooms: a computer (which is being tested), a human, and a (human) judge. The judge can chat with both the human and the computer by typing into a terminal. Both the computer and competing human try to convince the judge that they are the human. If the judge cannot consistently tell which is which, then the computer wins the game.

By changing the question of whether a computer thinks into whether a computer can win the Imitation Game, Turing dodges the difficult, many argue impossible, philosophical problem of pre-defining the verb “to think.” Instead, the Turing Test focuses on the performance capacities that being able to think makes possible. Scientists have been playing with the Turing Test – Imitation Game ever since.

Turing Test Seventy Five Years After Its Proposal

The scientists in the latest article go way beyond the testing of seventy five years ago and actually administer tests assessing the AI’s behavioral tendencies and “personality.” In their words:

[W]e ask variations of ChatGPT to answer psychological survey questions and play a suite of interactive games that have become standards in assessing behavioral tendencies, and for which we have extensive human subject data. . . . Each game is designed to reveal different behavioral tendencies and traits, such as cooperation, trust, reciprocity, altruism, spite, fairness, strategic thinking, and risk aversion. The personality profile survey and the behavioral games are complementary as one measures personality traits and the other behavioral tendencies, which are distinct concepts; e.g., agreeableness is distinct from a tendency to cooperate. . . .

In line with Turing’s suggested test, we are the human interrogators who compare the ChatGPTs’ choices to the choices of tens of thousands of humans who faced the same surveys and game instructions. We say an AI passes the Turing test if its responses cannot be statistically distinguished from randomly selected human responses.

A Turing test of whether AI chatbots are behaviorally similar to humans

Results of the Turing Test on ChatGPT4

Professor’s Jackson and Mei, and their students, found that GPT3.5 flunked the Turing test based on their sophisticated analysis, but GPT4.0 passed it with flying colors. This finding that humans and computers were indistinguishable is not too surprising for humans using ChatGPTs every day, but the startling part was the finding that ChatGPT4.0 was better than the humans tested. So much for scary, super-smart AI. They are nicer than us; well at least ChatGPT4 is. It seems like the best way way now to tell GPT4 from humans is to measure ethical standards. To quote a famous Pogo mime, “We have met the enemy and they are us.” For now at least, we should fear our fellow humans, not generative AIs.

Here is how Professor’s Jackson and Mei explained their ethical AI finding.

The behaviors are generally indistinguishable, and ChatGPT-4 actually outperforms humans on average . . . When they do differ, the chatbots’ behaviors tend to be more cooperative and altruistic than the median human, including being more trusting, generous, and reciprocating. . . .

ChatGPT’s decisions are consistent with some forms of altruism, fairness, empathy, and reciprocity rather than maximization of its personal payoff. . . . These findings are indicative of ChatGPT-4’s increased level of altruism and cooperation compared to the human player distribution.

A Turing test of whether AI chatbots are behaviorally similar to humans

So What does this all mean? Is ChatGPT4 capable of thought or not? The esteemed Professors here do not completely dodge that question like Turing. They conclude, as the data dictates, that AI is better than us, “more human than humans.” Here are their words (emphasis added).

We have found that AI and human behavior are remarkably similar. Moreover, not only does AI’s behavior sit within the human subject distribution in most games and questions, but it also exhibits signs of human-like complex behavior such as learning and changes in behavior from role-playing. On the optimistic side, when AI deviates from human behavior, the deviations are in a positive direction: acting as if it is more altruistic and cooperative. This may make AI well-suited for roles necessitating negotiation, dispute resolution, or caregiving, and may fulfill the dream of producing AI that is “more human than human.” This makes them potentially valuable in sectors such as conflict resolution, customer service, and healthcare.

A Turing test of whether AI chatbots are behaviorally similar to humans, 3. Discussion.

Conclusion

This new study not only suggests that our fears of generative AI are over-blown, it shows that it is perfectly suited for lawyer activities such as negotiation and dispute resolution. The same goes for caregivers like physicians and therapists.

This is a double plus for the legal profession. About half of lawyers do some kind of litigation or another, and all lawyers negotiate. That is what lawyers do. We can all learn from the skills of ChatGPT4.

The new Chatbots will not kill us. They are not Terminators. They will help us. They are literally ‘more human than human,’ which I learned is also the name of a famous heavy metal song. With this study, and more like it, which I expect will be forthcoming soon, the public at large, including lawyers, should start to overcome their irrational fears. They should stop fearing generative AI, and start to use it. Lawyers will especially benefit from the new partnership.

Ralph Losey 2024 Copyright. — All Rights Reserved.

Published on edrm.net with permission.

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.