[EDRM Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work. All images in this article were created by Ralph Losey using AI. This article is published here with permission.]

Introduction: Beyond the Prompt Era

The legal profession is undergoing a profound shift. For decades, the integration of computing in law was incremental—word processors, databases, legal research platforms. The advent of generative AI in 2022 brought a leap forward, with tools like ChatGPT, Claude, and Gemini able to respond to natural language prompts with astonishing capabilities. Yet even these breakthroughs only marked the beginning. The next phase is where AI takes actions for you in the real world. That is emerging now. Once again, OpenAI led the way in January 2025 by release of its experimental agentic software, Introducing Operator (OpenAI 1/23/25) (research preview of an agent that can use its own browser to perform tasks for you).

This new breed of generative AI does more than answer, it acts. We talk to it and it acts for us. This is called agentic AI—a category of artificial intelligence system that is capable of autonomous goal pursuit, strategic reasoning, and complex task execution across multiple steps and tools.

These systems are operational. They don’t just assist lawyers with knowledge, they act for them. very soon they will be able to coordinate entire workflows, orchestrate multistep tasks across software environments, and even collaborate with other AI agents. See e.g. Bob Ambrogi, Thomson Reuters Teases Upcoming Release of Agentic CoCounsel AI for Legal, Capable of Complex Workflows (LawSites, 6/2/25) (agentic workflows will be released in Summer 2025 for document drafting, employment policy generation, deposition analysis, and compliance risk assessments.) Ambrogi explains that: “Unlike traditional AI assistants that require specific prompts for each task, agentic systems can understand broader objectives and determine the necessary steps to achieve them.”

This article explores what this agentic shift means for the practice of law. We’ll define agentic AI and how it differs from traditional systems, map its current and emerging capabilities, and examine its implications for ethics, professional responsibility, and legal education. There is no way to avoid it. Yes, there will be AI agents for lawyers, but will they have Powers of Attorney? And if so, what will they say?

What Is Agentic AI?

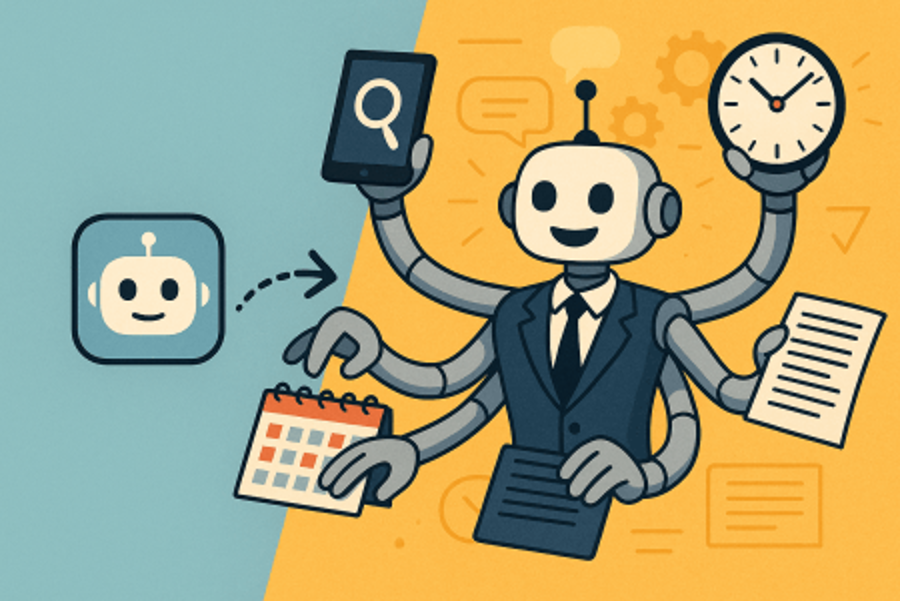

Agentic AI refers to artificial intelligence systems that are not only reactive, but autonomous. That is, they can initiate action, pursue defined goals over time, and adaptively respond to feedback and environmental changes. See e.g., Erik Pounds, What Is Agentic AI? (NVIDIA, 10/22/24) (“Agentic AI uses sophisticated reasoning and iterative planning to autonomously solve complex, multi-step problems”). While definitions vary, key characteristics typically include:

- Assess the task: Determine what needs to be done, and gather relevant data to understand the context.

- Plan the task: Break it into steps, gather necessary information, and analyze the data to decide the best course of action and selecting the necessary software tools (e.g., web search, code execution, document editing).

- Execute the task: Using knowledge and the tools selected to complete the task, such as providing information or initiating an action. Delegating subtasks to other AI agents or systems as needed

- Learn from the task: to improve future performance. Requires memory, deep analysis, and feedback learning and adjustments.

Erik Pounds for NVIDIA put it this way, Agentic AI uses a four-step process for problem-solving:

- Perceive: AI agents gather and process data from various sources, such as sensors, databases and digital interfaces.

- Reason: A large language model acts as the orchestrator, or reasoning engine, that understands tasks, generates solutions and coordinates specialized models for specific functions like content creation, visual processing or recommendation systems.

- Act: By integrating with external tools and software via application programming interfaces, agentic AI can quickly execute tasks based on the plans it has formulated.

- Learn: Agentic AI continuously improves through a feedback loop, or “data flywheel,” where the data generated from its interactions is fed into the system to enhance models.

These features contrast sharply with large language models (LLMs) like GPT-4o in their default form, which excel at generating text but generally lack long-term memory, persistent goals, or execution capability unless scaffolded or paired with external software.

Agentic systems blend language understanding with process execution. In doing so, they bridge the gap between reasoning and action—the cognitive and the operational.

Timeline: From Legal Assistants to Legal Agents

The evolution of AI in law can be divided into distinct eras:

- Pre-2010: Tools were rule-based and largely static (e.g., Westlaw, Lexis).

- 2010–2020: Predictive coding and analytics began to supplement document review.

- 2022: Generative AI became usable in practice with GPT-3.5 and early ChatGPT.

- 2024–2025: Agentic systems like AutoGPT and CoCounsel Core began performing autonomous, multi-step tasks.

The agentic systems are coming to law this summer as we see in Ambrogi’s report on Thomson Reuters. This is just was Sam Altman predicted in his January 2025 Reflections essay: “We believe that, in 2025, we may see the first AI agents “join the workforce” and materially change the output of companies.” It has already come to another major corporation, Salesforce, who has developed their own agentic software wraps. Silvio Savarese, The Agentic AI Era: After the Dawn, Here’s What to Expect (360 Blog, 1/7/25). Salesforce has recently surveyed 200 global HR leaders and reports:

HR leaders plan to redeploy nearly a quarter of their workforce in the near future as AI agents — which are capable of resolving complex issues independently — take on more routine tasks. As a result, HR leaders expect a productivity boost of 30% per employee. . . .

“We’re in the midst of a once-in-a-lifetime transformation of work with digital labor that is unlocking new levels of productivity, autonomy, and agency at a speed never before thought possible,” Scardino (CEO) said. “Every employee will need to learn new human, agent, and business skills to thrive in the digital labor revolution.”

AI’s Human Impact: How Agentic Technology Is Reshaping Work (Salesforce, 5/29/25).

This rapid shift has compressed decades of change into a few years, catching many firms off-guard. The advent now of agentic functions will soon heighten the impact of AI technology to tidal wave force levels. Hopefully, it will leave us all smiling, more productive, yet still in control. Time will tell what is on the other side of the tsunami.

Case Studies

Law firms are now experimenting with AI agents that perform iterative research, validate case law, and compile arguments. For example, Emily Colbert, senior vice president of CoCounsel, is quoted by Ambrogi in his article as saying:

With our agentic guided workflows, we go from just one single-shot task, answering one question, to actually getting to a work output.

According to Ambrogi’s excellent article, Colbert showed how lawyers will be able to initiate document creation processes — such as drafting demand letters or employment policies — through structured workflows. Id. Colbert estimates this will reduce the time to review documents or to draft and review contracts by as much as 63%, while reducing legal know-how tasks by 10%.

It is important to understand that the know-how tasks are typically an attorney’s bread and butter, work that justifies higher rates. Whereas the time for document review and related work, where they predict 63% less human time, is typically billed at lower rates and is often tedious and boring.

Ethical Implications: Competence and Supervision

The American Bar Association’s Formal Opinion 512 (2024) emphasizes that lawyers must supervise AI as they would junior associates. Delegation does not equal abdication.

Key duties include:

- Competence in use and supervision.

- Ensuring AI output is reviewed before submission.

- Protecting client confidentiality when using cloud-based agents.

- Explaining to clients when AI is used on their matter.

Supervision must evolve into system-level governance.

Risks: Hallucinations, Bias, and Autonomy Drift

Autonomous systems present new legal hazards:

- Hallucinations: AI can fabricate cases or statutes.

- Bias: Prejudices in training data may impact legal outcomes.

- Autonomy drift: Agents may exceed intended scope unless constrained.

Mitigation strategies include “constitutional AI” (value-aligned training), feedback loops, and multi-agent critique systems. See: Shomit Ghose, The Next “Next Big Thing”: Agentic AI’s Opportunities and Risks (UC Berkeley Engineering, 12/19/24). This article provides a good overview of agentic AI and then discusses Agentic Vulnerabilities, including hallucination, adversarial attack, misalignment with human values, and, get this, scheming (yes, tests have revealed that AIs can sometimes be sneaky and hide things they are doing from humans). Also see: The rise of ‘AI agents’: What they are and how to manage the risks (World Economic Forum, 12/16/24).

A recent article in the Harvard Business Review provides interesting recommendations to the problem. Blair Levin, Larry Downes Can AI Agents Be Trusted? (Harvard Business Review, 5/26/25). Levin and Downes argue that Personal AI agents should be treated as fiduciaries and held to legal and ethical standards that prioritize the user’s interests. (Of course, what if the user is Putin?) The article recommends a three-pronged approach:

1. create legal frameworks that establish fiduciary duty,

2. encourage market-based enforcement through tools like insurance and agent-monitoring services, and

3. design agents to keep sensitive data and decisions local to user devices. Without clear oversight, users may hesitate to delegate meaningful authority—potentially stalling one of AI’s most promising use cases.Levin, Larry Downes Can AI Agents Be Trusted? (Harvard Business Review, 5/26/25)

Governance Gaps: Law Lags Far Behind

As agentic systems enter the courtroom and back-office, regulatory bodies lag. The excellent article by Kevin Liu, Omer Tene, The Rise of Agentic AI: From Conversation to Action (JDSupra, 5/19/25), points out five key legal risks in AI agent development.

- Transparency and Explainability

- Bias and Discrimination

- Privacy and Data Security

- Accountability and Agency

- Agent-Agent Interactions

The first three are well known and not unique to agentic AI, but the last two are new. Accountability and Agency pertains to liability for mistakes. The Agent-Agent Interactions muddy the responsibility even further when multiple agents become involved. This will be a whole new field of tort law and contracts. Who is responsible for the negligent act? Did the agents create a contract?

Liu and Tene point out there is some law in the contract area: The Uniform Electronic Transactions Act (UETA), adopted is all US states. It defines an “electronic agent” as “a computer program or an electronic or other automated means used independently to initiate an action or respond to electronic records or performances in whole or in part, without review or action by an individual.”

Still, they admit this law is totally inadequate because UETA was designed for “relatively simple automated systems, not sophisticated AI agents that make complex judgment calls based on perceived preferences.” They also point out that not even the EU regulations mention agentic systems. But see: Katalin Horváth, Anna Horváth, Meeting the challenge of agentic AI and the EU AI Act (Grip, 5/9/25). It goes without saying there are no U.S. regulations.

Until regulatory rules are enacted, or rules developed over years by case law, firms must self-regulate using internal policies and best guesses as to reasonable precautions. Do you really want to give your AI agent the electronic keys to your Tesla? Your 401K Plan? What will the powers of attorney look like?

Here is the good advice provided by Liu and Tene’s article:

As organizations deploy agentic systems, they’ll need to develop frameworks for appropriate oversight, clarify legal responsibilities, and establish boundaries for autonomous action. Users will need transparent information about what actions AI agents can take on their behalf and what data they can access. And developers will need to implement cybersecurity measures to prevent cascading system failures affecting various layers of multi agent ecosystems.

Outline of an Integration Playbook: Building Agentic Workflows

One thing we know if that firms ready to adopt agentic AI should follow a phased integration:

- Phase 1: Pilot prompt-based tools on low-risk internal tasks.

- Phase 2: Build or license custom GPT agents for defined workflows.

- Phase 3: Integrate agents via APIs and automation platforms.

Leadership buy-in, cross-disciplinary training, and iterative safety checks are essential for success in any complex technology project. Beyond that, it is too early for meaningful details.

Practical How-To Advice: Getting Started with ChatGPT Legal Tools

Agentic AI starts with foundational tools that most lawyers first encounter it through systems like ChatGPT, Claude, or Gemini. These are generative LLMs that simulate reasoning via language. Learning to use them effectively is the gateway to deeper AI integration. Master the basics of this AI before going on to add agent functions to them.

Beginner Level: Foundations and First Prompts

If you’re new to ChatGPT or similar tools, the goal is to become comfortable with the basic interface and prompt structure. Start by asking things like this:

- What is the statute of limitations for breach of contract in [your state]?

- Summarize this 3-page memo in one paragraph (upload or paste the memo).

- Draft a client-friendly explanation of [legal concept].

Use the AI for brainstorming, summarizing, and rephrasing tasks—not high-stakes analysis. Always verify its output. The AIs are still capable of hallucinating case law and other things, especially when responding to newbie prompts.

Tips for Beginners:

- Use simple, clear prompts.

- Stick to low-risk tasks.

- Try rephrasing a single question three ways to see how results vary.

- Always fact-check.

Intermediate Level: Research and Drafting with Guardrails

Once familiar with the basics, explore more structured legal work. Use AI to:

- Generate first drafts of briefs, contracts, or letters.

- Identify key arguments from opposing counsel’s filing.

- Research cases on narrow points of law (use with reliable databases).

Incorporate retrieval-augmented generation (RAG) by uploading reference texts or pointing the model to primary sources. Use legal-specific tools like CoCounsel or Lexis+ AI to integrate structured legal databases.

Tips for Intermediate Users:

- Add context or reference documents to your prompts.

- Provide role instructions (e.g., “Act as a legal writing coach”).

- Review citations carefully for accuracy.

Advanced Level: Designing Agentic Workflows.

At the advanced level you are now ready to begin use of agentic AI workflows. You will be building systems, not just using them. This involves chaining prompts, defining agent behavior, and leveraging custom GPTs or multi-agent architectures.

Applications include:

- AI agents that handle document review, flag exceptions, and generate summaries.

- AutoGPT-type models that plan a legal strategy, delegate research to sub-agents, and present findings.

- Integrating tools like Zapier or APIs to connect GPT outputs with databases, calendars, or case management software.

Tips for Advanced Users:

- Customize your GPTs with system prompts, tools, and memory settings.

- Use multiple agents to divide complex legal workflows.

- Maintain human oversight at every key decision point. Keep humans in the loop to supervise. Remember the risks discussed above.

These practical steps can bring the promise of agentic AI into real-world practice, letting lawyers augment their capabilities with precision and control. It is also a good idea to retain an experienced professional or company to assist in this work. (No, I’m not available.)

The Future of Legal Education: Teaching Agents, Not Just Tools

Agentic AI necessitates a shift in legal education. Law schools and CLE providers must go beyond teaching AI tools as static apps and begin instructing students and practitioners on designing and supervising intelligent systems. Curricula should include pretty much everything covered in this article with use of the links as starting homework. The key elements of a law school course, or lengthy CLE, would include:

- AI ethics and law, including accountability frameworks.

- Prompt engineering and agent design.

- Simulation-based training with agentic systems in mock cases.

This shift to hands-on use of AI in law school parallels the rise of clinical legal education decades ago. Training future lawyers to work with AI as collaborators is now as essential as teaching legal writing, civil procedure and professional ethics. Maybe AI use will improve all of these key fields, including ethics. The scales of justice seem shaken now, some say broken. Maybe AI in skilled and honest hands can help restore the balance.

Here are a few AI educational tips gaining popularity today:

- Teachers should use interpersonal questions; for instance, the tried and proven Socratic method. Ask student questions.

- In-person oral exams are also a tradition worth preserving. Defend your thesis!

- The old way of teaching by human instructor lecturing is often boring. Avoid it when you can. AI tutors are better at lecturing because they can be personalized to each student’s level and have no time limits.

- Require papers that are generated by AIs. (I’d love to see, for instance, what the students and their AIs come up with on the powers of attorney question.) Then do things like require students to list the errors they detected in first drafts, which they corrected in the paper submitted. Learn and teach hybrid multimodal methods. I talk about this all of the time in my blog.

Conclusion: The Duty to Lead Responsibly

Agentic AI will not wait for the legal profession to catch up. As these systems evolve from reactive tools to proactive partners, lawyers face a fork in the road: remain reactive, or lead the transformation.

Choose to be proactive. Use these technologies not just to improve productivity, but to ensure access to justice, reduce legal errors, and preserve ethical practice in the face of complexity. That means clear standards, transparent oversight, and above all, courage to shape the tools that will soon shape us. There is a lot to do, especially for the younger generations.

The last words go, as usual, to the Gemini AI podcasters that chat between themselves about the article. I always prompt, and if there are too many mistakes, make them do it again. They can be pretty funny at times and have some good insights, so I think you’ll find it worth your time to listen. Echoes of AI: From Prompters to Partners: The Rise of Agentic AI in Law and Professional Practice. Hear two fake podcasters talk about this article. They wrote the podcast, not me.

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.

Ralph Losey Copyright 2025. — All Rights Reserved.