[Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work.]

Bill Gates has insider knowledge on the future of generative AI. He predicts there will be ‘two more turns of the crank’ on scaling, with the next ‘big frontier‘ being ‘metacognition.’ Gates describes ChatGPT’s metacognitive strategy today as trivial. Ouch!

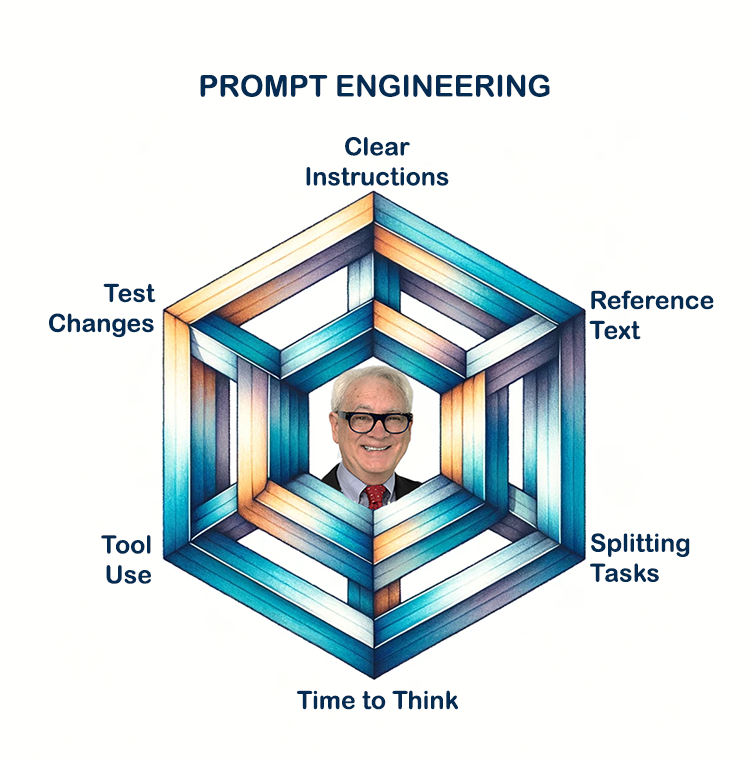

While “trivial” might sound harsh, Bill Gates’s assessment is essentially accurate. All generative AI models currently have limited metacognitive capabilities, such as reflecting on a problem, planning responses, thinking in steps, using external tools, and checking for errors. These metacognitive processes largely depend on user prompt engineering, with minimal inherent support built into the models.

AI Metacognition Now Dependent on User Skills

OpenAI has outlined six basic strategies for prompt engineering, all designed to encourage ChatGPT to engage in metacognitive responses. Although I haven’t written extensively about this yet, these strategies have been the primary focus of my studies since their release by OpenAI in December 2023. My tests since then indicate that these strategies are crucial for achieving consistent, high-level results. While it would be ideal if these capabilities were built into the models, this has not yet been accomplished by programmers. However, some strategies can be partially integrated into the model through the use of custom GPTs. The chart below illustrates OpenAI’s six basic strategies, each of which includes multiple implementation tactics. We are currently teaching these strategies privately.

Users must now supply the metacognition needed to guide and refine the model’s responses. This means that successful use of ChatGPT requires careful prompting in each chat session, as learning does not carry over from one session to another. While custom GPTs, such as my Panel of AI Experts for Lawyers, partially address this issue, they do not fully eliminate the need for user intervention. This reliance on user-provided metacognition significantly limits the ability of generative AI to reflect, learn, and self-correct in real-time.

The metacognitive abilities of ChatGPT (and all other generative AI models) are, in Gates’s words, trivial, especially compared to humans. Thinking about thinking and learning is a skill most children acquire. Individuals like Bill Gates possess advanced metacognitive skills, honed through decades of complex planning, execution, testing, correction, and continuous self-education. Gates is acutely aware of the vast differences in metacognitive abilities among people—their capacities to learn, plan, and think before speaking or acting. This insight drives his belief that AI software should not rely on users’ varying skills. Instead, he advocates for integrating high-level metacognition functions directly into the software. This is why Gates considers metacognition the ‘most interesting dimension‘ of AI.”

Bill Gates Interview of June 29, 2024

Bill Gates agrees with the majority of AI experts that substantial improvements can still be made by continuing to scale data and compute resources. Many believe that this alone will be sufficient to achieve AGI, with AI developing the necessary metacognition on its own. Gates, however, disagrees. He finds it ‘mind-blowing‘ that deep learning works at all and believes that the next leap to superintelligent, accurate AI will require both scaling and the addition of metacognition programming. Gates may be right; deep learning alone may not be enough to reach AGI.

Based on Bill Gates’s June 29, 2024, interview, it is evident that Microsoft and other companies are now working to program metacognition into AI models. Gates anticipates improvements next year and hopes “the problem will be solved sometime after that.” This timeline aligns closely with Ray Kurzweil’s prediction of achieving AGI by 2029, as outlined in his new book, The Singularity is Nearer: When We Merge with AI (book review by Ralph Losey).

Let’s hear directly from Bill Gates in the interview. In the YouTube video below or here, you’ll hear his distinctive voice and words (unaltered) in a fair use excerpt from his interview with the Next Big Idea Club, titled Bill Gates Reveals Superhuman AI Prediction. Click on the image below to see Ralph’s video excerpt on YouTube.

Here is an unaltered transcription of Bill Gates video interview talk above:

We have, you know, probably two more turns of the crank on scaling, whereby accessing video data and getting very good at synthetic data, that we can scale up probably, you know, two more times. That’s not the most interesting dimension.

The most interesting dimension is what I call metacognition, where understanding how to think about a problem in a broad sense and step back and say, okay, how important is this answer? How could I check my answer? You know, what external tools would help me with this? The overall cognitive strategy is so trivial today that, you know, it’s just generating through constant computation each token in sequence. And it’s mind-blowing that that works at all. It does not step back like a human and think, okay, I’m going to write this paper and here’s what I want to cover. Here’s, okay, I’ll put some facts in. Here’s what I want to do for the summary.

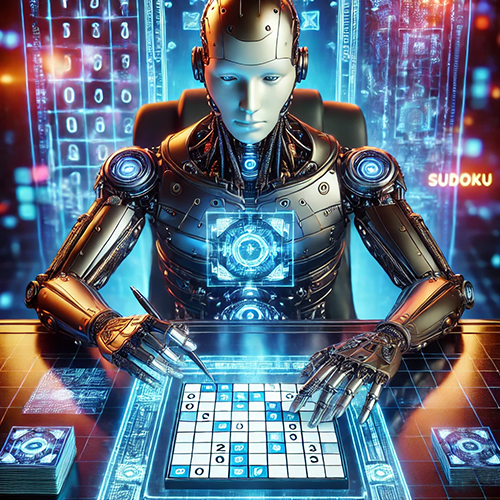

And so you see this limitation when you have a problem like various math things, like a Sudoku puzzle, where just generating that upper left-hand thing first, it causes it to be wrong on anything above a certain complexity.

So, we’re going to get the scaling benefits, but at the same time, the various actions to change the underlying reasoning algorithm from the trivial that we have today to more human-like metacognition, that’s the big frontier, that it’s a little hard to predict how quickly that’ll happen. You know, I’ve seen that we will make progress on that next year, but we won’t completely solve it for some time after that. So, you know, your genius will get to be more predictable.

Now, in certain domains, confined domains, we are getting to the point of being able to show extreme accuracy on some of the math or even some of the health-type domains, but the open-ended thing will require general breakthroughs on metacognition.

Bill Gates believes that new data from videos and synthetic generation can support at least two more turns of the crank in scaling increases. With companies like Nvidia using AI to accelerate chip designs, there may be even more significant advances in compute power. Even without additional data, increased compute capacity could lead to remarkable improvements in speed and complexity, with more robust synaptic-type connections being formed.

Both types of scaling up should facilitate metacognition training. Metacognition might even arise spontaneously from the new data and enhanced compute capabilities. Other unexpected intelligence abilities may emerge from exponential increases in scaling, not just metacognition. The recent progress from version 3.0 to 4.0 of ChatGPT supports this assumption.

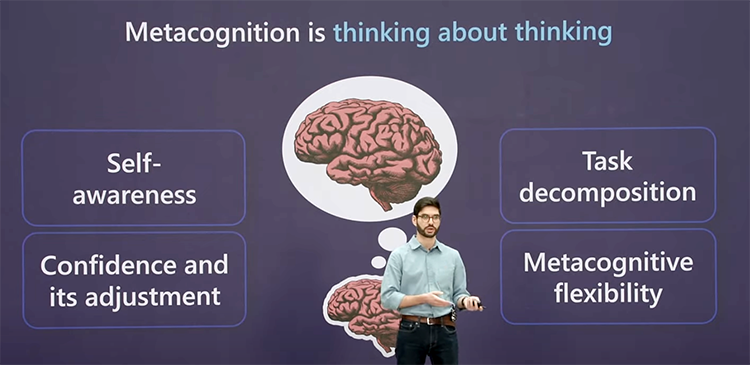

What Is Metacognition?

Metacognition, as defined by Wikipedia, is an awareness of one’s thought processes and an understanding of the patterns behind them—essentially, thinking about thinking. The term was coined by Stanford Professor John H. Flavell (born 1928). See: Flavel, J.H. Metacognition and cognitive monitoring: A new area of cognitive–developmental inquiry (American Psychologist, 34(10), 906–911, 1979). Also see: Lupeanu, Doru Metacognition in 10 Points (KnowledgeOne, 10/13/22) (point 6: Metacognition “is developed in childhood but can be improved throughout our lives”). Professor Flavell was a leading American proponent of the famous Swiss child psychologist Jean Piaget (1896-1980) who pioneered work on childhood cognitive development.

Generally, metacognition involves self-regulation and reflection on how we think, learn, and solve problems. This concept encompasses two main aspects: knowledge about cognition (knowing what we know) and regulation of cognition (managing how we learn). These areas of study are crucial for training and fine-tuning generative AI, making it smarter, more accurate, and consistent. As Gates put it, helping “your genius to get to be more predictable.”

Metacognition Applied to Generative AI

In the context of generative AI, metacognition involves the system’s ability to “understand” and “reflect” on its own processes. For example, a generative AI might use metacognitive strategies to evaluate the quality of its outputs, adjust its approach based on feedback, and improve its performance over time. Bill Gates has noted that these abilities are currently at a trivial level of perfection, indicating significant room for improvement in AI metacognition. This area is now a focus of intense study in AI research labs, with Microsoft as a key leader. For instance, Lev Tankelevitch, et al. discuss these concepts in their paper, The Metacognitive Demands and Opportunities of Generative AI (ACM, May 2024). Here is an excerpt from the Abstract of Microsoft’s scientific paper:

Drawing on research in psychology and cognitive science, and recent GenAI user studies, we illustrate how GenAI systems impose metacognitive demands on users, requiring a high degree of metacognitive monitoring and control. We propose these demands could be addressed by integrating metacognitive support strategies into GenAI systems, and by designing Gen AI systems to reduce their metacognitive demand by targeting explainability and customizability. Metacognition offers a coherent framework for understanding the usability challenges posed by GenAI, enabling us to offer research and design directions to advance human-AI interaction.

Read the paper, it’s good, and ends with a word I had to look up – “amanuensis.” But also watch the Microsoft video on YouTube that explains the paper. The video features Lev Tankelevitch, a lead Google researcher at Cambridge. He talks fast, but there is a full transcript with the video. The Image below is a screen shot of the video, where Lev provides a good introduction.

You can find the 24-page Microsoft paper here. As a useful exercise and to gain more insight, download the document, then upload it to ChatGPT4o – Omni, and ask for a summary in the prompt window. Follow up with any questions you have. I recently did this and received a well-written 489-word summary. While I could easily tweak it and continue the blog with the summary—potentially impressing you with my pseudo-erudition—I’d find it boring. Moreover, it seems that most blogs today are taking this shortcut. My discerning and intelligent readers deserve better than the B+ efforts of even the best AIs today. When I use AI-generated content, it is clearly marked and quoted. After all, AI is merely an amanuensis, not a real Learned Hand.

You could also go deeper into the Microsoft article, or general subject of metacognition, by using one of my custom GPTs, such as my Panel of AI Experts for Lawyers. That will give a really deep dive. Or you could try my latest GPT, which is designed for quicker, more flexible answers, Panel of Experts for Everyone About Anything. This GPT told me of another interesting article on point, Jason Toy, Phil Tabor, Josh MacAdam, Metacognition is all you need? Using Introspection in Generative Agents to Improve Goal-directed Behavior (arXiv, 3/4/24) (testing of the AI includes a scenario with a zombie apocalypse).

Although scientific papers involving a zombie apocalypse are cool, maybe you are more in the mood for a laidback summary of The Metacognitive Demands and Opportunities of Generative AI. If so, try using my GPT, The Dude Abides and Gives Advice, Man. Just tried it and the Dude’s summary was only 345 words. It made me smile 10 times and laugh out loud twice, not that I’m hyper into metadata or anything. The Dude perspective is pretty good for metacognition, which is, after all, just another way to talk about machine mindfulness. See e.g. Jankowski and Holas Metacognitive model of mindfulness (Consciousness and Cognition, Volume 28, August 2014, Pages 64-80); Elisabeth Norman Metacognition and Mindfulness: the Role of Fringe Consciousness (NLM, 2/22/16).

“What is metacognition?” I asked The Dude. He said:

Metacognition is basically thinking about thinking, man. It’s being aware of your own thought processes and being able to control them, like a mental autopilot but you’re still in the driver’s seat.”

The mental autopilot but still in the driver’s seat is not something I have ever read before. GenAI is good at creative analogies. In response to another question, The Dude listed five points of similarity between mindfulness and metacognition, and three differences. All were interesting, but I’ll just share the third difference.

Techniques: Mindfulness involves practices like meditation, deep breathing, and body scans. Metacognition involves techniques like self-questioning, thinking aloud, and using checklists to monitor your thought processes. It’s like the difference between meditating and making a game plan.

Using an unconventional cognition style, such as a custom GPT like “The Dude Abides,” often yields novel thoughts and insights. Some attribute this to the diverse cross-discipline perspectives inherent in big data training. Just as human brainstorming thrives on a variety of viewpoints, it makes sense that AI would benefit similarly, despite its reliance on a very different, brute-force statistical process.

How Metacognition Algorithms Could Improve Generative AI

I asked my GPT, Panel of AI Experts for Lawyers for a discussion of “how metacognition algorithms could improve generative AI.” I selected these experts: Prompt Engineering Expert, Machine Learning Engineer, Advanced GPT User, Child Prodigy and Devil’s Advocate. Here are a few selected excerpts worthy of your attention. (For the full message, you could always ask the GPT yourself.)

Prompt Engineering Expert: By embedding reflective thinking, AI can adjust its responses based on past errors, leading to increased reliability and reduced hallucinations. This iterative self-improvement can significantly boost the overall intelligence of AI systems. … We should focus on creating prompts that encourage AI to engage in metacognitive practices. For example, prompts that ask AI to explain its reasoning or evaluate the confidence of its answers can be instrumental. This ensures that metacognitive algorithms are activated and utilized effectively.

Advanced GPT User: User feedback should be incorporated into the AI’s metacognitive processes. By allowing users to highlight errors or provide additional context, AI can learn and adjust more effectively.

Child Prodigy. Think of it like teaching AI to learn from its mistakes just like we do. When AI can reflect on what it got wrong and figure out how to fix it, it becomes smarter and more reliable. … AI should be designed to ask for help when it gets stuck, just like we do. By building in mechanisms for AI to seek clarification or additional information, we can reduce errors and improve its learning process.

Devil’s Advocate. While implementing metacognition is beneficial, we must ensure that it does not compromise the speed and efficiency of AI systems. It’s important to strike a balance between self-improvement and operational performance.

Trained Users Can Also Provide the Metacognition that AI Needs

According to Bill Gates and Microsoft, generative AI needs two things to reach reliable superintelligence: 1) a few more turns of the crank on scaling, meaning an increase in the amount of data and compute, which Gates sees as imminent; and 2) rule-based software to give AI models metacognition, which he currently views as trivial, making it miraculous that generative AI works at all. I respectfully disagree, at least in part, and suggest they are missing a third key component: humans.

Microsoft still seems focused on automating everything with rule-based, old paradigm software. This is not surprising, as these methods have worked well for years. Moreover, traditional programmers often underestimate the miracle of deep learning and the significance of the current user-focused metacognition design. It’s not truly trivial because it allows a skilled human user to provide the GPT with the necessary metadata on a session-by-session basis. This ability, embedded in the model’s deep learning processes, is impressive. In my view, it’s far from trivial that natural human metacognition can be transferred to AI through a series of user prompts.

Still, it could be better. Perhaps more rule-based software is needed, or perhaps a few more turns of the crank on scaling will suffice to achieve constant AGI in all fields, including Bill’s favorite, sudoku.

What Bill Gates may not fully appreciate is that trained users can already provide the necessary metacognition to AI. In each chat session, skilled humans can prompt the model to enhance AI intelligence, increase consistency, and reduce errors and hallucinations to an acceptable level—fewer errors and fabrications than most humans. Humans skilled in prompt engineering can make the results of these AI systems predictable enough for the most demanding applications, including my field, law.

There is no need to wait for Microsoft or other big software companies to add the metacognition dimension to responsibly use ChatGPT. We can do that ourselves through prompt engineering. Even if Microsoft does not solve the problem of autonomous metacognition soon, we humans can fill the gap by improving our own skills. In my view, humans and AI should advance together. Excessive delegation to machines is not advisable. A hybrid approach to work and development is a safer solution and should lead to greater, more diverse intelligence.

The Current Debate on Scaling

Let’s return to the ongoing debate in the AI community regarding the importance of scaling. Some, like Sam Altman and Ilya Sutskever, argue that scaling alone can achieve AGI. They believe scaling is all that’s needed. See, for example, Albert Wenger, Scale is All You Need? (5//7/24); Surprising Admissions by OpenAI Leaders Made in Recent Interviews (e-Discovery Team, 8/8/23). Others disagree, asserting that much more is required. Microsoft, or at least Bill Gates, now aligns slightly with this view. Gates emphasizes the need for metacognition, in addition to scaling, to achieve AGI. Others point to different challenges that could prevent AGI.

Some traditional AI scientists claim that all generative AI is just media hype. This sentiment is familiar to veterans like me. I recall experts making similar statements about the Internet in the 1990s. Then, in May 1995, Bill Gates discovered the Internet, recognized its potential, and called it a coming “Tidal Wave.” He was right. Now, he praises generative AI even more enthusiastically, as does the current CEO of Microsoft, Satya Nadella. The outcome remains uncertain, but game-changing AI is already here. Metacognition might be the path to AGI, or it could be achieved through more scaling, human merger, or perhaps a combination of all three.

I personally believe humans will always have a role to play, and eventually, we and AI will become one. There’s no debate that adult humans—most of us, anyway—already possess significant metacognitive awareness and skills. We are adept thinkers, planners, and creatives. AI needs us, and we need it.

Everyone who has learned prompt engineering skills, and it does take time and practice, discovers that its true purpose is to impose their human metacognition onto generative AI. In prompt engineering, you learn to do this through a series of prompts. Multi-shot prompting is a classic example. See, for instance, Sarojag, Know about Zero Shot, One Shot and Few Shot Learning, (Analytics Vidhya, 12/4/23); Margaret Rouse, Zero-Shot, One-Shot, Few-Shot Learning, (Techopedia, 1/17/24). Through this process, we compel AI to engage in a form of metacognitive thinking. However, most agree that the amount and quality of transfer in this manner is insufficient to reach AGI. Expert user prompting alone is not enough to make the AI genius consistent, as Bill Gates mentioned. Not until we see several more scaling increases, additional turns of the crank, and built-in metacognition will this become a reality.

Holistic Compromise: Hybrid Multimodal Approach

Many proponents of the scaling group of AI designers believe that more turns of the crank will render hybrid human-AI processes unnecessary, thinking that scaling alone will awaken metacognition. A more traditional group, including Bill Gates, disagrees. They believe that metacognition and reliability won’t emerge from scaling alone and must be programmed separately. I propose a third way: a holistic multimodal approach that includes all three methods.

In my experience, the correct solution is often “both and,” not “either or.” We should adopt a try-all-methods multimodal approach, incorporating scaling, traditional rule-based programming, and, most importantly to me, the human touch. I agree with Ray Kurzweil’s prediction to a certain extent: a merger of man and machine is inevitable.

In my opinion, skilled human users will always be able to elevate AI to a higher level than AI can achieve on its own. We will not slow AI down in the long run. As we merge with AI, we will enhance and expand our own intelligence, making unique contributions with our biological capabilities. Our metacognition skills will likely surpass what probabilistic, stochastic thinking alone can produce. From my studies and experience using AI since 2012 and computers since 1978, I believe that human gifts will be essential in taking AI beyond the AGI level and into the final frontier of superintelligence and the singularity. We possess the metacognition and other abilities that AI needs to reach the next levels.

The Future of Programming Metacognition into Generative AI

Bill Gates and Microsoft may well be correct about the limitations of scaling. If and when progress from scaling ends, the focus may shift to programming metacognitive procedures into generative AI. By then, deep-learned AI will be far more advanced than it is now. According to OpenAI’s Chief Technology Officer, Mira Murati, ChatGPT 5 is expected to reach a PhD level for specific tasks. Currently, she describes the ChatGPT 4.0 Omni level as comparable to a smart high schooler in most tasks. In certain narrow fields, however, GPT-4.0 is already at a PhD or higher level, such as in programming and some areas of mathematics. The multidimensional mathematical analysis capabilities of GPTs have already led to breakthroughs in Nvidia chip design and protein folding, revolutionizing medical research.

Bill Gates envisions more than what human prompt engineering can provide; he seeks real-time self-assessment—autonomous metacognition—built directly into the software. Microsoft, along with others, aims for AI metacognition to function independently of human prompting. While there are clear advantages to this approach, I believe it should not be an “either-or” situation. Metacognition should be both autonomous and open to human enhancements. This hybrid approach prevents individual disempowerment and excessive control by large corporations. Maintaining a “human in the loop” approach ensures that human metacognitive skills can still intervene and guide AI processes. I am cautious about fully autonomous AI in general, as humans remain the most interesting and valuable dimension. Metacognition is just one of the many capabilities we can contribute to superintelligence.

Like many others, I strongly favor a hybrid human-AI relationship, where, as Ray Kurzweil predicts, we become one with AI. Teenagers and others glued to their iPhones are just early, albeit sometimes unhealthy, examples of this merger. This integration will improve over time. Companies like Meta and Apple are focused on advancing this integration, likely through new accessories such as improved glasses, hats, watches, and ear pods. However, the hybrid approach alone is not sufficient. Deep learning and logical programming must also play their parts for us to reach superintelligence levels. Additionally, there is an equality and fairness component to consider. Just as smartphones and the internet are now ubiquitous, it is crucial that all humans have access to the next stage of evolution, not just a highly educated or wealthy elite.

Eventually, metacognition will be built into AI, allowing it to evaluate its own performance during chat sessions without human guidance. The AI will be able to identify errors and uncertainties in its thinking in real-time, making internal evaluation continuous and automatic. Although no one knows exactly how to achieve this yet, with the power of thousands of Microsoft researchers and the scaling improvements that OpenAI will make in the coming years, success is likely. Companies like Google, Anthropic, Apple, Amazon, and a few others are also poised to make similar advancements.

I am hopeful that before the dark side of AI potential ruins everything, AI will progress at exponentially high speeds, sufficient to launch the Singularity and leave behind petty human power ambitions.

Advantages of Built-In Metacognition in AI Software

There are additional advantages of programming metacognition into AI software that haven’t been discussed yet. This programming should allow for the automatic, dynamic adjustment of the AI’s thinking processes. It would enable the AI to modify its approach to specific tasks based on self-assessment, adjusting its reasoning and outputs before responding to a prompt. This contrasts with the existing user prompt methods, which require constant human engagement to guide AI analysis and avoid mistakes and hallucinations.

The current reliance on skilled users limits the consistency and number of people who can effectively use ChatGPTs and achieve consistent, high-quality output. By building prompt engineering skills into the software, everyone, even beginners, could quickly achieve B+ or A level AI responses. Nevertheless, studies and common sense suggest that skilled humans will always attain the highest quality results most of the time, reaching A+ levels with astonishing new insights and profound advice. Therefore, developing skills in prompt engineering now will not be a waste of time, as the upgrade to built-in metacognitive skills is still many years away. Prompt engineering should remain a high priority in educational efforts, even if its importance may diminish somewhat in the future.

Barring privacy barriers that could limit metacognition training transfers from one session and user to another, AI could someday autonomously learn from all past interactions. It could integrate feedback from each chat session, and potentially from each user, to improve future performance without external prompts. An internal, ongoing deep learning process would enable AI to evolve based on its experiences with hundreds of millions of humans and trillions more simulated chat sessions.

Of course, privacy concerns, particularly from EU privacy and sanction-taxation lawyers, might oppose such cross-training improvements. I am hopeful that increasingly intelligent AI will help future lawmakers find acceptable compromises. They should be able to do so, provided enough independent lawyers in the world can keep up. My current work as a writer is dedicated to the goal of enhancing lawyer competence through AI understanding and assistance.

Conclusion

Bill Gates aims to reduce the need for quality human input by enhancing AI software with autonomous metacognition features. With the power of thousands of Microsoft researchers and the scaling improvements from OpenAI, Microsoft is likely to succeed eventually. Google and several other companies will probably achieve this as well. Meanwhile, certain foreign powers will resort to their usual trade-secret thievery to try to keep pace. However, slave states are typically adept only at theft and stasis, not innovation and change. When exponential change reaches a certain speed, the theft of old knowledge will become futile. This kind of accelerated change is unprecedented in human history.

The concern among many in the legal profession is that technological advances will continue to accelerate so rapidly that very few independent lawyers will be able to keep up. Few law firms will grasp the latest AI advances and the new science and engineering insights that AGI-level AI will bring. Keeping up is already exhausting, even for specialists dedicated to this quest. Hopefully, there will be enough lawyers merged with AI to keep pace with the accelerating advancements. The same applies to regulators, academics, technology companies, and the public. Enlightened laws, skilled lawyers, and reasonable regulations are essential to restrain the excesses of both humans and AI. This will need to be a team effort, but it should be achievable with the help of ever-improving AI.

At some point, if Ray Kurzweil’s predictions are even close to correct, our intelligence will be increasing at incredible speeds. This may launch the Singularity and leave behind the petty power ambitions of most humans and nation-states. This is my hopeful vision for the future, the one I wish for my grandchildren. Whether I live long enough to see it or not is irrelevant. We are on our way, and despite the many challenges ahead, the long-term future looks promising.

Ralph Losey Copyright 2024 – All Rights Reserved

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.