[Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work.]

Introduction

The year 2024 marked a pivotal time in artificial intelligence, where aspirations transformed into concrete achievements, cautions became more urgent, and the distinction between human and machine began to blur. Many visionary leaders led the way this exponentially loaded year, but here are my top six picks: Jensen Huang, Dario Amodei, Ray Kurzweil, Sam Altman, and the Nobel Prize Winners – Geoffrey Hinton and Demis Hassabis. These people propelled AI’s development at a dizzying pace, speaking and writing of both the benefits and dangers; notably, all but one remain optimistic.

Many visionary leaders led the way this exponentially loaded year, but here are my top six picks: Jensen Huang, Dario Amodei, Ray Kurzweil, Sam Altman, and the Nobel Prize Winners – Geoffrey Hinton and Demis Hassabis. These people propelled AI’s development at a dizzying pace, speaking and writing of both the benefits and dangers; notably, all but one remain optimistic.

Ralph Losey, Losey.AI.

Image by Ralph Losey using his Visual Muse GPT.

Huang built the supercomputers powering AI systems, while Amodei shifted from AI skeptic to hopeful visionary. Kurzweil reaffirmed his positive, bold predictions of human-machine singularity, while Altman laid out a democratic path for global AI governance. Hinton and Hassabis, two of the four Nobel prize winners in AI in 2024, revolutionized fields ranging from neural networks to molecular biology. Everyone was surprised when Geoffrey Hinton left Google in mid-year to yell fire about the AI child he, more than anyone, brought into this world. No one was surprised when he received the Nobel Prize at the end of the year and end of his long career.

The combined work of these six reminds us of AI’s dual nature—its profound ability to benefit humanity while creating new risks requiring constant vigilance. For the legal profession, 2024 underscored the need for proactive leadership, constant education and collaboration with AI to help guide its responsible development.

Jensen Huang: Architect of AI’s Future

Jensen Huang, the CEO and co-founder of NVIDIA, continues to define what’s possible in AI by delivering the computational power to fuel its rise. Huang’s journey began humbly: a young boy from Taiwan accidentally sent to a Kentucky reform school, later waiting tables at Denny’s to make ends meet. These formative experiences shaped his relentless drive and focus, qualities mirrored in NVIDIA’s culture of intensity, innovation and the ever-present whiteboard. For in-depth background on the life of Jenson see my article, Jensen Huang’s Life and Company – NVIDIA: building supercomputers today for tomorrow’s AI, his prediction of AGI by 2028 and his thoughts on AI safety, prosperity and new jobs. Also see, Tae Kim, The Nvidia Way: Jensen Huang and the Making of a Tech Giant (Norton, 12/10.24) (highly recommend).

In 2024, Huang and NVIDIA solidified their role as the backbone of AI progress. Their latest generation of AI supercomputers—capable of unprecedented processing power—drove breakthroughs in generative AI, scientific research, and medical discovery.

That is the prime reason that the stock price of NVIDIA in 2024 increased from approximately $48 per share to $137, a gain of over 180% for the year. The 2024 year-end market capitalization of NVIDIA was over $3.4 trillion, second only to Apple.

Image by Ralph Losey using his Visual Muse GPT.

In early July 2024 at the World AI Forum in Shanghai, Huang made waves by predicting Artificial General Intelligence (AGI)—machines as smart as humans across all tasks—would be a reality by 2028. Huang framed AGI as inevitable, driven by exponential progress in hardware and algorithms: “We’re not just accelerating AI. We’re accelerating intelligence itself.” His foresight in pivoting NVIDIA from gaming GPUs to AI infrastructure years ago is a testament to his long-term vision.

Huang remains pragmatic about the risks, although still very optimistic. He advocates for AI safety frameworks modeled on aviation: “No one stopped airplanes because they could crash. We made them safer. AI is no different.” I can’t say I agree there is “no difference” because the dangers of AI are far more severe and AI is already in use by hundreds of millions of people.

Nelson Huang sees AI creating prosperity by automating repetitive jobs while generating new industries that didn’t exist before. In Huang’s eyes, the legal profession—often burdened by time-consuming tasks—stands to benefit enormously.

Image by Ralph Losey using his Visual Muse GPT.

Dario Amodei’s Surprising Optimism in 2024

Dario Amodei is a prominent figure in the field of artificial intelligence. He has a Ph.D. in biophysics from Princeton and conducted postdoctoral research at Stanford, focusing on the study of the brain. He then joined OpenAI, a leading AI research lab, where he specialized in AI safety. In 2021, he co-founded Anthropic, the company behind the AI assistant Claude. Amodei was known for his cautious stance on AI and his warnings about potential dangers, including the risks posed by super intelligent AI.

Image by Ralph Losey using his Visual Muse GPT.

In October 2024 Amodei’s public stance took a surprising turn. He released his 28-page essay “Machines of Loving Grace.” The title is inspired by a poem by Richard Brautigan, which envisions a harmonious coexistence between humans, nature, and technology. Amodei’s work aims to guide us towards a future where AI lives up to that ideal—a future where AI enhances our lives, empowers us to solve our most pressing problems, and helps us create a more just and equitable world. For in-depth background see my article, Dario Amodei’s Vision: A Hopeful Future ‘Through AI’s Loving Grace,’ Is Like a Breath of Fresh Air.

Amodei outlined his surprisingly optimistic vision focusing on five key areas where AI could revolutionize our world:

1. Biology and Physical Health: Amodei, drawing on his expertise in biophysics, believes AI could accelerate medical breakthroughs, leading to the prevention and treatment of infectious diseases, the elimination of most cancers, cures for genetic diseases, and even the extension of the human lifespan.

2. Neuroscience and Mental Health: Amodei sees AI as a powerful tool for understanding the brain and revolutionizing mental health care, potentially leading to cures for conditions like depression, anxiety, and PTSD.

Image by Ralph Losey using his Visual Muse GPT.

3. Economic Development and Poverty: Amodei is a strong advocate for equitable access to AI technologies and believes AI can be used to alleviate poverty, improve food security, and promote economic development, particularly in the developing world.

4. Peace and Governance: While acknowledging the potential for AI to be misused in warfare and surveillance, Amodei also sees its potential to strengthen democracy, promote transparency in government, and facilitate conflict resolution. Amodei’s essay includes a discussion of the internal conflict between democracy and autocracy within countries. He offers a hopeful perspective—one that aligns with the sentiments I often express in my AI lectures.

We all hope that America remains at the forefront of this fight, maintaining its leadership in pro-democratic policies.

Image by Ralph Losey using Stable Diffusion.

5. Work and Meaning: Amodei recognizes concerns about AI-driven job displacement but believes AI will also create new jobs and industries. He envisions a future where people are free to pursue their passions and find meaning outside of traditional work structures.

Amodei did not become blind to the challenges and risks associated with AI. He still states that realizing his optimistic vision requires careful and responsible development. Amodei stresses the importance of ethical guidelines and international agreements to prevent the misuse of AI in areas like autonomous weapons systems. He also advocates for ensuring equal access to AI benefits to avoid exacerbating existing inequalities and calls for proactive efforts to mitigate AI bias and ensure fairness in AI applications.

Amodei’s combination of scientific knowledge, industry experience, and nuanced understanding of both the potential and the perils of AI makes him a crucial voice in shaping the future of this technology. His advocacy for responsible AI development, ethical considerations, and equitable access is essential for ensuring that AI benefits all of humanity.

His new, much more optimistic vision shared in 2024 is an inspiration and a call to action for researchers, policymakers, and the public to work towards creating a future where AI is a force for good.

Image by Ralph Losey using Stable Diffusion.

Ray Kurzweil: The Singularity Is Nearer

Ray Kurzweil’s name remains synonymous with bold predictions, and 2024 proved no exception. According to Bill Gates: “Ray Kurzweil is the best person I know at predicting the future of AI.” I was quick to read and write about his new book of predictions. The Singularity is Nearer (when we merge with AI) (Viking, June 25, 2024). It is a long awaited sequel to his 2005 book, The Singularity is Near, but stands on its own. Ray does not change his original prediction times – AGI by 2029. That’s just four years from now. And Kurzweil still predicts The Singularity will arrive in 2045. He thinks it will be great, but I’m not so sure.

Ray Kurzweil’s 2024 statement on his book captures the essence of his hopeful message that The Singularity will arrive in 2045.

“The robots will take over,” the movies tell us. I don’t see it that way. Computers aren’t in competition with us. They’re an extension of us, accompanying us on our journey. . . . By 2045 we will have taken the next step in our evolution. Imagine the creativity of every person on the planet linked to the speed and dexterity of the fastest computer. It will unlock a world of limitless wisdom and potential. This is The Singularity.

The Singularity is Nearer, “The Singularity is Nearer” (Youtube, 6/10/24).

Image of Ray Kurzweil with a photoshop background added by Ralph Losey.

Kurzweil himself acknowledges the many difficulties ahead to reach AGI level by 2029. He thinks that generative AI models like Gemini (Kurzweil works for Google) and ChatGPT are still being held back from attaining AGI by: (1) contextual memory limitations (too small of a memory causing GPT to forget earlier input; token limits), (2) common sense (lacks robust model of how real word works); and, (3) social interaction (lacks social nuances not well represented in text, such as tone of voice and humor). The Singularity is Nearer at pages 54-58. Kurzweil thinks these will be simple to overcome by 2029. I have been working on the humor issue myself and am inclined to agree.

Kurzweil also points to the problem with AI hallucinations. The Singularity is Nearer at page 65. This is something we lawyers worry about a lot too. “My AI Did It!” Is No Excuse for Unethical or Unprofessional Conduct (Florida Bar approved CLE). I personally see great progress being made in this area now by software improvements and by more careful user prompting. See my OMNI Version – ChatGPT4o – Retest of the Panel of AI Experts – Part Three.

Image by Ralph Losey using his Visual Muse GPT.

As usual, whether we attain AGI, or not, by 2029, depends on what you mean by AGI, how you define the term. The same applies to the famous Turing test of intelligence. See e.g. New Study Shows AIs are Genuinely Nicer than Most People – ‘More Human Than Human’ (e-Discovery Team, 2/26/24).

To Kurzweil AGI means attaining the highest human level in all fields of knowledge, which is not something that any single human has ever achieved. The AI would be as smart as the top experts in every specialized field of law, medicine, liberal arts, science, engineering, mathematics, and software and AI design and programming experts. The Singularity is Nearer at pages 63-69 (Turing Test).

When an AI can do that, match or exceed the best of the best humans in all fields, then Kurzweil thinks AGI will have been attained, not before. That is a very stringent definition-test of AGI. It explains why Kurzweil is sticking with his original 2029 prediction and not moving it up to an earlier time of 2025 or 2026 as Elon Musk and others have done. Their quicker prediction might only work if you compare it to the above average human in every field, not the Einsteins on the peaks.

Image by Ralph Losey using his Visual Muse GPT.

Further, Kurzweil predicts that once an AI is as smart as the most expert humans in all fields, the AGI level, then incremental increases in AI intelligence will appear to end. Instead, there will appear to be a “sudden explosion of knowledge.” AI intelligence will jump to a “profoundly superhuman” level. The Singularity is Nearer at page 69. Then, the next level of The Singularity will become attainable as our knowledge increases unimaginably fast. Many great new inventions and accomplishments will occur in quick order as AGI level AI and the human handlers rush towards the Singularity.

Kurzweil defines The Singularity as a hybrid state of human AI merger, where human minds unite with the “profoundly superhuman” level of AI minds. The unification sought requires the human neocortex to extend into the cloud. This presents great technical and cultural hurdles that will have to be overcome.

While Kurzweil’s predictions raise profound legal and ethical questions. Who governs AI when its decisions exceed human comprehension? Will you have to be enhanced to practice law, to judge, to create AI policies and laws? What happens when AI rewrites concepts of identity, privacy, and accountability?

Image by Ralph Losey using his Visual Muse GPT.

Sam Altman: Champion of Democratic AI

Sam Altman, the CEO of OpenAI, in addition to leading his company, wrote two important pieces in 2024 and promoted their ideas:

- Who will control the future of AI? an editorial published on July 25, 2024 in the Washington Post on a pivotal choice now facing the world concerning AI development. I wrote about this essay in The Future of AI: Sam Altman’s Vision and the Crossroads of Humanity.

- The Intelligence Age (9/23/24) an essay sharing his vision of the future where ChatGPT and other AI technologies rapidly transform the world for the better. I wrote about this essay in Can AI Really Save the Future? A Lawyer’s Take on Sam Altman’s Optimistic Vision.

Image by Ralph Losey using his Visual Muse GPT.

Sam Altman is overall very optimistic, but he does consider both the dark and light side of AI. He can hardly ignore the dark side as he is asked questions about it every day. In the July editorial he states, I think correctly, that we are at a crossroads:

… about what kind of world we are going to live in: Will it be one in which the United States and allied nations advance a global AI that spreads the technology’s benefits and opens access to it, or an authoritarian one, in which nations or movements that don’t share our values use AI to cement and expand their power?

Sam Altman, “Who will control the future of AI?” (The Washington Post, 7/25/24).

Image by Ralph Losey using his Visual Muse GPT.

Altman of course advocates for a “democratic” approach to AI, one that prioritizes transparency, openness, and broad accessibility. He contrasts this with an “authoritarian” vision of AI, characterized by centralized control, secrecy, and the potential for misuse. In Altman’s words, “We need the benefits of this technology to accrue to all of humanity, not just a select few.” This means ensuring that AI is developed and deployed in a way that is inclusive, equitable, and respects fundamental human rights.

To attain these ends Altman advocates for a global governance body—akin to the International Atomic Energy Agency—to establish norms and prevent misuse. His vision prioritizes AI for global challenges: climate change, pandemics, and economic inequality.

In Sam Altman’s next essay, The Intelligence Age, he presents the positive view. He predicts the exponential growth we’ve seen in generative AI will continue, unlocking astonishing advancements in science, society, and beyond. According to Altman, AI-driven breakthroughs could soon lead us into a virtual utopia—one where the possibilities seem limitless. While this optimistic vision may come across as a sales pitch, to his credit, there’s more depth to it. Altman’s predictions are rooted in science and insider knowledge, which means his ideas should be taken seriously—but with a healthy dose of skepticism. See my article, Can AI Really Save the Future? A Lawyer’s Take on Sam Altman’s Optimistic Vision.

Image by Ralph Losey using his Visual Muse GPT.

Sam’s The Intelligence Age essay has a personal touch and some poetic qualities. I especially like the reference at the start of this quote to silicon as melted sand.

Here is one narrow way to look at human history: after thousands of years of compounding scientific discovery and technological progress, we have figured out how to melt sand, add some impurities, arrange it with astonishing precision at extraordinarily tiny scale into computer chips, run energy through it, and end up with systems capable of creating increasingly capable artificial intelligence.

This may turn out to be the most consequential fact about all of history so far. It is possible that we will have superintelligence in a few thousand days (!); it may take longer, but I’m confident we’ll get there.

Sam Altman, “The Intelligence Age” (samaltman.com, 9/23/24).

I agree AI is the most important invention in human history. Artificial General Intelligence, If Attained, Will Be the Greatest Invention of All Time. To keep it real, however, all of the past technology shifts were buffered by incremental growth. AI’s exponential acceleration may very well overwhelm our existing systems. We need to try to manage this transition and to do that we need to consider the problems that come with it. See Seven Problems of AI: an incomplete list with risk avoidance strategies and help from “The Dude” (8/6/24). To be fair to Sam, his prior editorial did address some of the dark side but not all.

Sam Altman’s essay, The Intelligence Age, offers a compelling vision of how AI can dramatically improve life in the coming decades. However, as captivating as Altman’s optimism is, we must balance it with a dose of realism. There is more to it than a political struggled between democracy and dictatorship discussed in his earlier editorial. The road ahead is incredible but filled with many hurdles that cannot be ignored.

Image by Ralph Losey using his Visual Muse GPT.

Geoffrey Hinton and Demis Hassabis: Nobel Laureates of AI

2024 brought well-deserved recognition to Geoffrey E. Hinton and Demis Hassabis, as both received Nobel Prizes for their contributions to science. This is also a big deal for the field of AI itself as there has never been an award of AI prior to the four given this year, two in Physics and two in Chemistry.

Geoffrey Hinton: Godfather of AI

Who is Geoffrey E. Hinton? Hinton, often referred to as the “godfather of AI,” was awarded the 2024 Nobel Prize in Physics alongside John Hopfield for their foundational work on artificial neural networks. Hinton’s key contribution was the development of the backpropagation algorithm in the 1980s, the significance of which was unrecognized for almost thirty years. But this new approach to AI revolutionized machine learning by allowing computers to “learn” through a process of identifying and correcting errors. This breakthrough paved the way for the development of powerful AI systems like large language models we have today.

Image taken from Wikipedia with a photoshop background added by Ralph Losey.

Geoffrey Hinton is a British Canadian born in 1947, in Wimbledon, London, England. He comes from an academically very accomplished family. His great-great-grandfather was George Boole, the mathematician and logician who developed Boolean algebra, and his great-grandfather was Charles Howard Hinton, a mathematician and inventor. His parents were involved in science and social work, Geoffrey studied experimental psychology at King’s College, Cambridge, earning a Bachelor of Arts in 1970. Next, he earned a Ph.D. in Artificial Intelligence at the University of Edinburgh in 1978.

Hinton then moved to the United States to work at institutions such as the University of California, San Diego (1978–1980) and then back to the U.K. to work at the University of Sussex (1980–1982), then back to U.S. to Carnegie Mellon University (1982–1987), finally ending in Canada at the University of Toronto (1987-2013 and thereafter to today as an Emeritus Professor).

His early work was focused on neural networks, a concept that was largely dismissed by the AI community during the 1970s and 1980s in favor of symbolic AI approaches. Hinton’s development of the Boltzmann Machine in 1983 while at Carnegie was largely ignored. It was a stochastic neural network capable of learning internal representations and solving complex combinatorial problems which later led to the Nobel Prize. Despite being ignored for decades, Geoffrey Hinton stubbornly preserved. It turns out he was right and almost everyone else in AI establishment was wrong (although many to this day still refuse to admit it and still hold generative AI in low regard). Professor Hinton eventually flourished at the U. of Toronto where he established himself as a global leader in neural networks and deep learning research and supervised a number of later influential students, including Yann LeCun (Meta), and Ilya Sutskever (OpenAI).

In 2012 Hinton co-founded DNNResearch Inc., a startup focused on deep learning algorithms, with two of his students, Alex Krizhevsky and Ilya Sutskever. The company played a key role in developing AlexNet, which won the 2012 ImageNet Challenge and demonstrated the power of deep convolutional neural networks. In 2013, DNNResearch was acquired by Google, and Hinton joined Google Brain as a Distinguished Research Scientist. At Google, Hinton worked on large-scale neural networks, deep learning innovations, and their applications in AI.

In May 2024 Geoffrey Hinton shocked the world by resigning from Google so that he could freely warn the world about the scary fast advancement of generative AI and its potential risks.

Image by Ralph Losey using his Visual Muse GPT.

- AI could become more intelligent than humans. Hinton now says that AI could become so intelligent that it could take control and eliminate human civilization. He has also said that AI could become more efficient at cognitive tasks than humans. “I think it’s quite conceivable that humanity is just a passing phase in the evolution of intelligence.”

- AI could be used for disinformation. Hinton has warned that AI could be used to create and spread disinformation campaigns that could interfere with elections.

- AI could make the rich richer and the poor poorer. Hinton has said that AI could take jobs in a decent society, which could make the rich richer and the poor poorer.

- AI could make it impossible to know what’s true. Hinton has said that AI could make it impossible to know what’s true by having so many fakes.

- Government Regulations: Hinton has said that he thinks politicians need to give equal time and money into developing guardrails. Further, “I think we should be very careful about how we build these things.” “There’s no guaranteed path to safety as artificial intelligence advances.”

- Potential for AI Misuse: “It is hard to see how you can prevent the bad actors from using it for bad things.” “Any new technology, if it’s used by evil people, bad things can happen.”

- AI’s Impact on Employment: saying “The idea that this stuff could actually get smarter than people—a few people believed that. But most people thought it was way off. And I thought it was way off. I thought it was 30 to 50 years or even longer away. Obviously, I no longer think that.”

- The God Father’s Guilt: “I console myself with the normal excuse: If I hadn’t done it, somebody else would have.”

Although much of the AI world was shocked and surprised by the seemingly excessive dark fears by Geoffrey E. Hinton, they were not surprised to see the Godfather of generative AI win the Nobel Prize. We will wait and see how his concerns about AI change in the future. As a Nobel Prize winner his opinion will be taken more seriously than ever.

Demis Hassabis: Gamer and Future King of AI?

Image taken from Wikipedia with a photoshop background added by Ralph Losey.

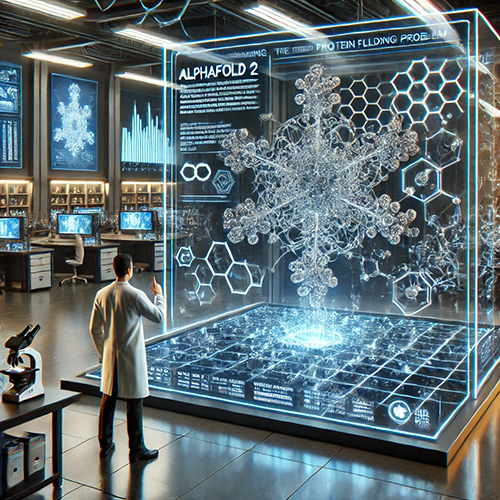

Who is Demis Hassabis? He is the co-founder of DeepMind who many think represents the best of the next generation of AI scientist leaders. In 2024 he was awarded the Nobel Prize in Chemistry, alongside his colleague at DeepMind, John Jumper, for developing AlphaFold2.

There award was no surprise in the AI community. Hassabis been made no secret of his desire for Deep Mind to win this award, and expects to receive many more in the coming years. The Nobel Prize Committee recognized that the AlphaFold2 AI algorithm’s ability to accurately predict protein structures was a groundbreaking achievement. It has far-reaching implications for medicine and other scientific research.

Unlike Professor Hinson, Demis Hassabis still works for Google, actually the holding company Alphabet, in London and is not a doom and gloom type. He is a gamer, not a professor, and he is used to competition and risks. Although also deeply involved and concerned about AI ethics, Hassabis and his teams at DeepMind are working aggressively on many more scientific breakthroughs and inventions. Unlike the older pioneer Hinton, Hassabis, about thirty years his junior, is just not yet in his prime. Some speculate he may not only receive more Nobel Prizes, but may also someday lead Alphabet itself, not just its subsidiary, DeepMind.

Demis Hassabis’ life story is very interesting and explains his interdisciplinary approach. He was born in 1976 in London to a family that combined Greek Cypriot and Chinese Singaporean heritage. Young Demis was quickly recognized as a child-prodigy at age four by his gaming skills at chess. He attained the rank of Chess Master at age 13. He is used to competing for awards and winning. Chess also instilled in him a deep interest in strategy, problem-solving, and human cognition.

That led to playing complex computers games and continued winning. By age 17 he was designing video games, including co-design of the highly regarded video game Theme Park (1994, Atari and PC). This was a simulation game where players built different types of theme parks. It sparked a whole genre of space management type simulation games revolving around similar ideas.

Image by Ralph Losey using his Visual Muse GPT.

Demis, who obviously enjoyed Roller Coaster rides and theme parks, created this groundbreaking video game while working at the UK game company Bullfrog Productions. At the same time, he attended Christ’s College, Cambridge, studying AI, where he passed his final exams two years early at age 16. Advised by Cambridge to take a gap year off, he started his own video game company, Elixir Studios. This allowed him to combine his love for games and AI to create political simulation games. Elixir released two games: Republic: The Revolution (2003, PC and Mac) (simulation where you rise to power in a former Soviet state using political and coercive means) and Evil Genius (2004, PC) (comedic take on a spy fiction). Elixir started as a winning enterprise failed in 2005 in a video game downturn. Rumor has it they were working at the time on a video game version of The Matrix. Can you imagine the irony if Hassabis had completed it?

The still young genius, Demis Hassabis then shifted his interests to the scientific study of human intelligence and enrolled in the Neuroscience Ph.D. program at University College in London. Due to his strong hands-on AI computational background, and his incredible genius, his academic work immediately impressed the professors and MIT and Harvard where he also studied. Hassabis explored the brain neurology of how humans reconstruct past experiences to imagine the future. He was looking for inspiration in the human brain for new types of AI algorithms.

The research by Hassabis on the hippocampus and its role in memory and imagination was published in leading journals like Nature, even before his earning a Ph.D. in Cognitive Neuroscience. His very first academic work, Patients with hippocampal amnesia cannot imagine new experiences, (PNAS, 1/30/2007), became a landmark paper that showed systematically for the first time that patients with damage to their hippocampus, known to cause amnesia, were also unable to imagine themselves in new experiences.

Soon after completing his studies, Demis Hassabis, already an AI legend, co-founded DeepMind with Shane Legg and Mustafa Suleyman in 2010. The purpose of Deep Mind was to build artificial general intelligence (AGI) capable of solving complex problems. They planned to do so by combining insights from neuroscience, mathematics, and computer science into AI research. Only four years later Deep Mind was considered the best AI lab in the UK and was acquired by Google in 2014 for £400 million. Google at the time made promises that Deep Mind would retain its autonomy, but after years of negotiations, this never really panned out, but they did get the money they needed to retain the top scientists and hire more. In 2016 Deep Mind quickly developed AlphaGo AI, which defeated world champion Lee Sedol in 2016. Then Deep Mind then created AlphaZero in 2018, called the “One program to rule them all.” As the abstract to the paper announcing the discovery stated:

A single AlphaZero algorithm that can achieve superhuman performance in many challenging games. Starting from random play and given no domain knowledge except the game rules, AlphaZero convincingly defeated a world champion program in the games of chess and shogi (Japanese chess), as well as Go.

David Silver, Demi Hassabis, et al, “A general reinforcement learning algorithm that masters chess, shogi, and Go through self-play” (Science, 12/07/18).

The incredible fact is that AlphaZero attained superintelligence without human input and did so in multiple games, not just chess.

In 2018 Deep Mind went beyond game creation to invent AlphaFold AI based software. In 2020 DeepMind released AlphaFold2, which achieved groundbreaking results at CASP14, solving the protein folding problem that had challenged scientists for decades. This breakthrough version two of AlphaGo software was created by DeepMind under the leadership, both management and scientific, of Demis Hassabis, and lead scientists on this project, DeepMind’s John Jumper. See, Hassabis, Jumper, et al, Highly accurate protein structure prediction with AlphaFold, (Nature, 7/15/21) (paper on AlphaFold2 by DeepMind scientists has been cited more than 27 thousand times, even before the Nobel Prize award to Hassabis and Jumper for this discovery).

Image by Ralph Losey using his Visual Muse GPT.

AlphaFold2 utilizes transformer neural networks, a type of AI architecture, and was trained on vast datasets of known protein structures. The algorithm’s ability to accurately predict protein structures significantly accelerates scientific discovery in various fields, including drug development, disease research, and bioengineering. This protein folding achievement led to Hassabis and Jumper sharing the Nobel Prize in Chemistry in 2024.

Demis Hassabis remains as CEO of Google DeepMind, that is now a subsidiary of Alphabet Inc. According to the excellent, well researched new book Supremacy: AI, ChatGPT, and the Race that Will Change the World by Parmy Olson, which is on Demis Hassabis and Sam Altman, Hassabis was satisfied with his position at Alphabet, even though Google Deep Mind never received its once promised complete autonomy. According to Olson, Hassabis is considered a likely successor to Sundar Pichai by many. The book was completed a few months before the Nobel Prize announcement in late 2024.

I personally doubt Demis Hassabis would still want that position now that he has tasted Nobel Prize victory at age 48. He has always seems to have been more focused on winning than money, and five people in history have won two Nobel Prizes. Marie Curie was the first person to win two, one in Physics in 1903 and another in Chemistry in 1911. John Bardeen won the Nobel Prize in Physics twice, in 1956 for inventing transistors in 1972 for discovering superconductivity. Frederick Sanger and Karl Barry Sharpless both won the Nobel Prize in Chemistry twice. The incredible Linus Pauling, an American, won a Nobel Prize in Chemistry in 1954 and get this, the Nobel Peace Prize in 1962 for his activism against nuclear weapons.

Linus Pauling (1901-1994) is the only person to win two Nobel Prizes on his own. The rest of the double winners shared credit with another, as Demis Hassabis did when winning his first Nobel Prize. I bet Demis goes for another Nobel on his own or a triple that is shared. Any gamer would try to win and a true genius like Demis Hassabis, unlike other alleged genius wannabes, knows the stupidity of competing for mere money and power.

Can Demis Become the First Person in History to Win Three Nobel Prizes?

Images by Ralph Losey using his Visual Muse GPT.

Conclusion: A Call to Action

The events of year 2024 have shown the incredible potential of AI and how critical the next few years may be. What if ex-Google Geoffrey Hinton is right and current Google’s Ray Kurzweil and Demis Hassabis are wrong. No one knows for sure. Maybe everything will turn out fine and maybe we will be in crises mode for decades. Maybe we will need more lawyers than ever before, maybe far less. It seems to me the probabilities are on more because private enterprise and governments need lawyers to enact and follows rules and regulations on AI. Lawyers will likely have to work in teams with AI experts and policy experts to ensure that AI systems align with fairness, ethics, and human values. If so, and if we do our jobs right, maybe Demis Hassabis will win three golds.

I am pretty sure Demis will rise to the challenge, but will we? Will we be able to keep up with the technology leaders of AI? Most lawyers are very competitive and so I say yes we can. We can play that game and we can win.

Legal professionals can help humanity to navigate the difficult times ahead. Lawyers are uniquely qualified by training and temperament to provide balanced advice. We know there are always two sides to a story.

Ralph Losey, Losey.AI.

Legal professionals can help humanity to navigate the difficult times ahead. Lawyers are uniquely qualified by training and temperament to provide balanced advice. We know there are always two sides to a story. We know there are always dark forces and dangers about. We know we must both think ahead and plan and stay current on the facts at hand to adjust to the unexpected. Life is far more complicated than any games of Chess or Go. We will have to work hard to keep our hand in the game for as long as we can. Perhaps with AI’s help we can all win the game together.

Image by Ralph Losey using his Visual Muse GPT.

Now listen to the EDRM Echoes of AI’s podcast of the article, Echoes of AI on the Key AI Leaders of 2024. Hear two Gemini model AIs talk about this article, plus response to audience questions. They wrote the podcast, not Ralph.

Ralph Losey Copyright 2025 – All Rights Reserved

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.