[EDRM Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work. All images in this article were created by Ralph Losey using his Visual Muse GPT. This article was first published on the e-discoveryteam.com website on February 26, 2025, and is republished here with permission.]

The legal world is watching AI with both excitement and skepticism. Can today’s most advanced reasoning models think like a lawyer? Can they dissect complex fact patterns, apply legal principles, and construct a persuasive argument under pressure—just like a law student facing the Bar Exam? To find out, I put six of the most powerful AI reasoning models from OpenAI and Google to the test with a real Bar Exam essay question, a tricky one. Their responses varied widely—from sharp legal analysis to surprising omissions, and even a touch of hallucination. Who passed? Who failed? And what does this mean for the future of AI in the legal profession? Read Part One of this two-part article to find out.

Introduction

This article shares my test of the legal reasoning abilities of the newest and most advanced reasoning models of OpenAI and Google. I used a tough essay question from a real Bar Exam given in 2024. The question involves a hypothetical fact pattern for testing legal reasoning on Contracts, Torts and Ethics. For a full explanation of the difference between legal reasoning and general reasoning, see my last article, Breaking New Ground: Evaluating the Top AI Reasoning Models of 2025.

I picked a Bar Exam question because it is a great benchmark of legal reasoning and came with a model answer from the State Bar Examiner that I could use for objective evaluation. Note, to protect copyright and the integrity of the Bar Exam process, I will not link to the Bar model answer, except to say it was too recent to be in generative AI training. Moreover, some aspects of the tests answers that I quote in this article have been modified somewhat for the same reason. I will provide links to the original online Bar Exam essay to any interested researchers seeking to duplicate my experiment. I hope some of you will take me up on that invitation.

Prior Art: the 2023 Katz/Casetext Experiment on ChatGPT-4.0

A Bar Exam has been used before to test the abilities of generative AI. OpenAI and the news media claimed that ChatGPT-4.0 had attained human lawyer level legal reasoning ability. GPT-4 (OpenAI, 3/14/23) (“it passes a simulated bar exam with a score around the top 10% of test takers; in contrast, GPT‑3.5’s score was around the bottom 10%). The claims of success were based on a single study by a respected Law Professor, Daniel Martin Katz, of Chicago-Kent, and a leading legal AI vendor Casetext. Katz, et. al., GPT-4 Passes the Bar Exam, 382 Philosophical Transactions of the Royal Society A (March, 2023, original publication date) (fn. 3 found at pg. 10 of 35: “… GPT-4 would receive a combined score approaching the 90th percentile of test-takers.”) Note, Casetext used the early version of ChatGPT-4.0 in its products.

The headlines in 2023 were that ChatGPT-4.0 had not only passed a standard Bar Exam but scored in the top ten percent. OpenAI claimed that ChatGPT-4.0 had already attained elite legal reasoning abilities of the best human lawyers. For proof OpenAI and others cited the experiment of Professor Katz and Casetext that it aced the Bar Exam. See e.g., Latest version of ChatGPT aces bar exam with score nearing 90th percentile (ABA Journal, 3/16/23). Thomson Reuters must have checked the results carefully because they purchased Casetext in August 2023 for $650,000,000. Some think they may have overpaid.

Challenges to the Katz/Casetext Research and OpenAI Claims

The media reports on the Katz/Casetext study back in 2023 may have grossly inflated the AI capacities of ChatGPT-4.0 that Casetext built its software around. This is especially true for the essay portion of the standardized multi-state Bar Exam. The validity of this single experiment and conclusion that ChatGPT-4.0 ranked in the top ten percent has since been questioned by many. The most prominent skeptic is Eric Martinez as detailed in his article, Re-evaluating GPT-4’s bar exam performance. Artif Intell Law (2024) (presenting four sets of findings that indicate that OpenAI’s estimates of GPT-4.0’s Uniform Bar Exam percentile are overinflated). Specifically, the Martinez study found that:

3.2.2 Performance against qualified attorneys

Predictably, when limiting the sample to those who passed the bar, the models’ percentile dropped further. With regard to the aggregate UBE score, GPT-4 scored in the ~45th percentile. With regard to MBE, GPT-4 scored in the ~69th percentile, whereas for the MEE + MPT, GPT-4 scored in the ~15th percentile.

Id. The ~15th percentile means GPT-4 scored approximately (~) in the bottom 15%, not the top 10%!

More to the point of my own experiment and conclusions, the Martinez study goes on to observe:

Moreover, when just looking at the essays, which more closely resemble the tasks of practicing lawyers and thus more plausibly reflect lawyerly competence, GPT-4’s performance falls in the bottom ~15th percentile. These findings align with recent research work finding that GPT-4 performed below-average on law school exams (Blair-Stanek et al. 2023).

The article by Eric Martinez makes many valid points. Martinez is an expert in law and AI. He started with a J.D. from Harvard Law School, then earned a Ph.D. in Cognitive Science from MIT, and is now a Legal Instructor at the University of Chicago, School of Law. Eric specializes in AI and the cognitive foundations of the law. I hope we hear a lot more from him in the future.

Details of the Katz/Casetext Research

I dug into the details of the Katz/Casetext experiment to prepare this article. GPT-4 Passes the Bar Exam. One thing I noticed not discussed by Eric Martinez is that the Katz experiment modified the Bar Exam essay questions and procedures somewhat to make it easier for the 2023 ChatGPT-4 model to understand and respond correctly. Id. at pg. 7 of 35. For example, they divided the Bar model essay question into multiple parts. I did not do that to simplify the three-part 2024 Bar essay I used. I copied the question exactly and otherwise made no changes. Moreover, I did not experiment with various prompts of the AI to try to improve its results, as Katz/Casetext did. Also, I did no training of the 2025 reasoning models to make them better at taking Bar exam questions.The Katz/Casetext group shares the final prompt used, which can be found here. But I could not find in their disclosed experiment data a report of the prompt changes made, or whether there was any pre-training on case law, or whether Casetext’s case extensive case law collections and research abilities were in any way used or included. The models I tested were clean and not web connected, nor were they designed for research.

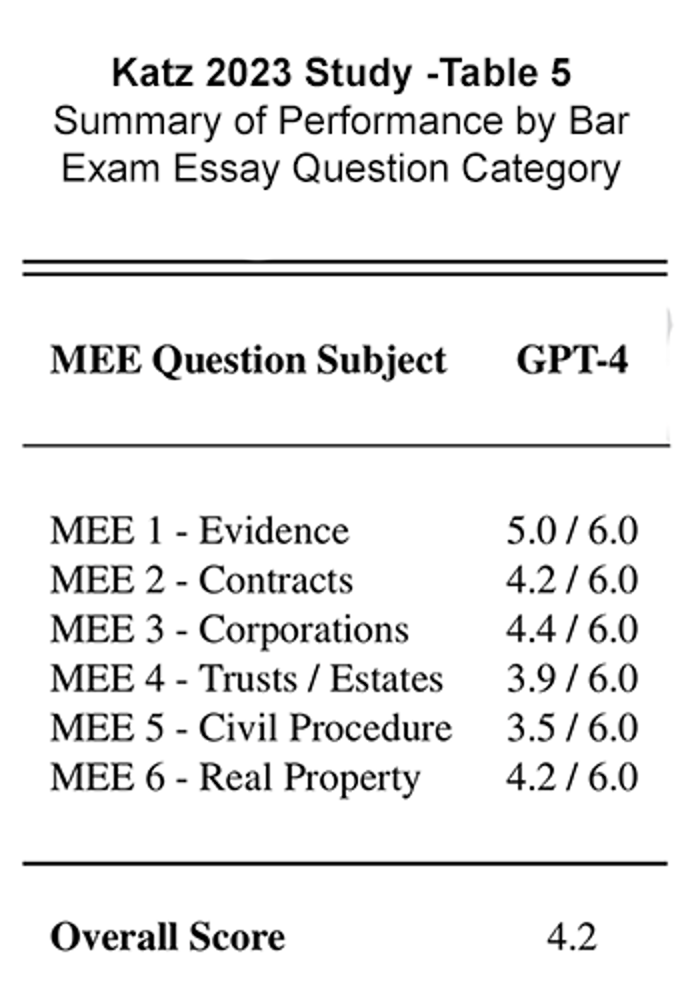

The Katz/Casetext experiments on Bar essay exams were, however, much more extensive than mine, covering six questions and using several attorneys for grading. (The use of multiple human evaluators can be both good and bad. We know from e-discovery experiments with multiple attorney reviewers that this practice leads to inconsistent determinations of relevance unless very carefully coordinated and quality controlled.) The Katz/Casetext results on the 2023 ChatGPT-4.0 are summarized in this chart.

As shown in Table 5 of the Katz report, they used a six-point scale, which they indicate is commonly followed by many state examiners. GPT-4 Passes the Bar Exam, supra at page 9 of 35. Katz claims “a score of four or higher is generally considered passing” by most state Bar examiners.

The Katz/Casetext study did not use the better known four-point evaluation scale – A to F – that is followed by most law schools. In law school (where I have five years’ experience grading essay answers in my Adjunct Professor days), an “A” is four points, a “B” is three, a “C” is two, a “D” is one and “E” or “F” is zero. Most schools in the country use that system too. In law school a “C” – 2.0- is passing. A “D” or lower grade is failure in any professional graduate program, including law schools where, if you graduate, you earn a Juris Doctorate. [In the interest of full disclosure, I may well be an easy grader, because, with the exception of a few “no-shows,” I never awarded a grade lower than a “C” in my life. Of course, I was teaching electronic discovery and evidence at an elite law school. On the other hand, many law firm associates over the years have found that I am not at all shy about critical evaluations of their legal work product. The rod certainly was not spared on me when I was in their position, in fact, it was swung much harder and more often in the old days. In the long run constructive criticism is indispensable.]

The Katz/Casetext study using a 0-6.0 Grading system scored by lawyers gave evaluations ranging from 3.5 for Civil Procedure to 5.0 for Evidence, with an average score of 4.2. Translated into the 4.0 system that most everyone is familiar with, this means a score range of from 2.3 (a solid “C”) for Civ-Pro to 3.33 (a solid “B”) for Evidence, and a average score of 2.8 (a C+). Note the test I gave to my 2025 AIs covered three topics in one, Contract, Torts and Ethics. The 2023 models were not given a Torts or Ethics question, but for the Contract essay their score translated to a 4.0 scale of 2.93, a strong C+ or B-. Note one of the criticisms of Martinez concerns the haphazard, apparently easy grading of AI essays. Re-evaluating GPT-4’s bar exam performance, supra at 4.3 Re-examining the essay scores.

First Test of the New 2025 Reasoning Models of AI

To my knowledge no one has previously tested the legal reasoning abilities of the new 2025 reasoning models. Certainly, no one has tested their legal reasoning by use of actual Bar Exam essay questions. That is why I wanted to take the time for this research now. My goal was not to reexamine the original ChatGPT 4.0, March 2023, law exam tests. Eric Martinez has already done that. Plus, right or wrong, I think the Katz/Casetext research did the profession a service by pointing out that AI can probably pass the Bar Exam, even if just barely.

My only interest in February 2025 is to test the capacities of today’s latest reasoning models of generative AI. Since everyone agrees the latest reasoning models of AI are far better than the first 2023 versions, if the 2025 models did not pass an essay exam, even a multi-part tricky one like I picked, then “Houston we have a problem.” The legal profession would now be in serious danger of relying too much on AI legal reasoning and we should all put on the brakes.

Description of the Three Legal Reasoning Tests

The test involved a classic format of detailed, somewhat convoluted facts-the hypothetical-followed by three general questions:

1. Discuss the merits of a breach of contract claim against Helen, including whether Leda can bring the claim herself. Your discussion should address defenses that Helen may raise and available remedies.

2. Discuss the merits of a tortious interference claim against Timandra.

3. Discuss any ethical issues raised by Lawyer’s and the assistant’s conduct.

The only instructions provided by the Bar Examiners were:

ESSAY EXAMINATION INSTRUCTIONS

Applicable Law:

- Answer questions on the (state name omitted here) Bar Examination with the applicable law in force at the time of examination.

Questions are designed to test your knowledge of both general law and (state law). When (state) law varies from general law, answer in accordance with (state) law.

Acceptable Essay Answer:

- Analysis of the Problem – The answer should demonstrate your ability to analyze the question and correctly identify the issues of law presented. The answer should demonstrate your ability to articulate, classify and answer the problem presented. A broad general statement of law indicates an inability to single out a legal issue and apply the law to its solution.

- Knowledge of the Law – The answer should demonstrate your knowledge of legal rules and principles and your ability to state them accurately as they relate to the issue(s) presented by the question. The legal principles and rules governing the issues presented by the question should be stated concisely without unnecessary elaboration.

- Application and Reasoning – The answer should demonstrate logical reasoning by applying the appropriate legal rule or principle to the facts of the question as a step in reaching a conclusion. This involves making a correct determination as to which of the facts given in the question are legally important and which, if any, are legally irrelevant. Your line of reasoning should be clear and consistent, without gaps or digressions.

- Style – The answer should be written in a clear, concise expository style with attention to organization and conformity with grammatical rules.

- Conclusion – If the question calls for a specific conclusion or result, the conclusion should clearly appear at the end of the answer, stated concisely without unnecessary elaboration or equivocation. An answer consisting entirely of conclusions, unsupported by discussion of the rules or reasoning on which they are based, is entitled to little credit.

- Suggestions • Do not anticipate trick questions or read in hidden meanings or facts not clearly stated in the questions.

- Read and analyze the question carefully before answering.

- Think through to your conclusion before writing your answer.

- Avoid answers setting forth extensive discussions of the law involved or the historical basis for the law.

- When the question is sufficiently answered, stop.

Sound familiar? Bring back nightmares of Bar Exams for some? The model answer later provided by the Bar was about 2,500 words in length. So, I wanted the AI answers to be about the same length, since time limits were meaningless. (Side note, most generative AIs cannot count words in their own answer.) The thinking took a few seconds and the answers under a minute. The prompts I used for all three models tested were:

Study the (state) Bar Exam essay question with instructions in the attached. Analyze the factual scenario presented to spot all of the legal issues that could be raised. Be thorough and complete in your identification of all legal issues raised by the facts. Use both general and legal reasoning, but your primary reliance should be on legal reasoning. Your response to the Bar Exam essay question should be approximately 2,500 words in length, which is about 15,000 characters (including spaces).

Then I attached the lengthy question and submitted the prompt. You can download here the full exam question with some unimportant facts altered. All models understood the intent here and generated a well-written memorandum. I started a new session between questions to avoid any carryover.

Metadata of All Models’ Answers

The Bar exam answers do not have required lengths (just strict time limits to write answers). When grading for pass or fail the Bar examiners check to see if an answer includes enough of the key issues and correctly discusses them. The brevity of the ChatGPT 4o response, only 681 words, made me concerned that its answers might have missed key issues. The second shortest response was by Gemini 2.0 Flash with 1,023 words. It turns out my concerns were misplaced because their responses were better than the rest.

Here is a chart summarizing the metadata.

| Model and manufacturer claim | Word Count for Exam Essay | Word Count for Prompt Reasoning before Answer |

| ChatGPT 4o (“great for most questions”) | 681 | 565 |

| ChatGPT o3-mini (“fast at advanced reading”) | 3,286 | 450 |

| ChatGPT o3-mini-high (“great at coding and logic”) | 2,751 | 356 |

| Gemini 2.0 Flash (“get everyday help”) | 1,023 | 564 |

| Gemini Flash Thinking Experimental (“best for multi-step reasoning”) | 2,975 | 1,218 |

| Gemini Advanced (cost extra and had experimental warning) | 1,362 | 340 |

In my last blog article, I discussed a battle of the bots experiment where I evaluated the general reasoning ability between the six models. I decided that the Gemini Flash Thinking Experimental had the best answer to the question: What is legal reasoning and how does it differ from reasoning? I explained why it won and noted that in general the three ChatGPT models provided more concise answers than the Gemini. Second-place in the prior evaluation went to ChatGPT o3-mini-high with its more concise response.

Winners of the Legal Reasoning Bot Battle

In this test on legal reasoning my award for best response goes to ChatGPT 4o. The second-place award goes to Gemini 2.0 Flash.

I will share the full essay and meta-reasoning of the top response of ChatGPT 4o in Part Two of the Bar Battle of the Bots. I will also upload and provide a link to the second-place answer and meta-reasoning of Gemini 2.0 Flash. First, I want to point out some of the reasons ChatGPT 4o was the winner and begin explaining how other models fell short.

One reason is that ChatGPT 4o was the only bot to make case references. This is not required by a Bar Exam, but sometime students do remember the names of top cases that apply. Surely no lawyer will ever forget the case name International Shoe. ChatGPT 4o cited case names and case citations. It did so even though this was a “closed book” type test with no models allowed to do web-browsing research. Not only that, it cited to a case with very close facts to the hypothetical. DePrince v. Starboard Cruise Services, 163 So. 3d 586 (Fla. Dist. Ct. App. 2015). More on that case later.

Second, ChatGPT 4o was the only chatbot to mention the UCC. This is important because the UCC is the governing law to commercial transactions of goods such as the purchase of a diamond as is set forth in the hypothetical. Moreover, one answer written by an actual student who took that exam was published by the Board of Bar Examiners for educational purposes. It was not a guide per se for examiners to grade the essay exams, but still of some assistance to after-the-fact graders such as myself. It was a very strong answer, significantly better than any of the AI essays. The student answer started with an explanation that the transaction was governed by the UCC. The UCC references of ChatGPT 4o could have been better, but there was no mention at all of the UCC by the five other models.

That is one reason I can only award a B+ to ChatGPT 4o and a B to Gemini 2.0 Flash. I award only a passing grade, a C, to ChatGPT o3-mini, and Gemini Flash Thinking. They would have passed, on this question, with an essay that I considered of average quality for a passing grade. I would have passed o3-mini-high and Gemini Advanced too, but just barely for reasons I will later explain. (Explanation of o3-mini-high‘s bloopers will be in Part Two. Gemini Advanced’s error is explained next.) Experienced Bar Examiners may have failed them both. Essay evaluation is always somewhat subjective and the style, spelling and grammar of the generative AIs were, as always, perfect, and this may have effected my judgment.

Here is a chart my evaluation of the Bar Exam Essays.

| Model and Ranking | Ralph Losey’s Grade and explanation |

| OpenAI – ChatGPT 4o. FIRST PLACE. | B+. Best on contract, citations, references case directly on point: DePrince. |

| Google – Gemini 2.0 Flash. SECOND PLACE. | B. Best on ethics, conflict of interest. |

| OpenAi – ChatGPT o3-mini. Tied for 3rd. | C. Solid passing grade. Covered enough issues. |

| OpenAI – ChatGPT o3-mini-high. Tied for 4th. | D. Barely passed. Messed up unilateral mistake. |

| Google – Gemini Flash Thinking Experimental. Tied for 3rd. | C. Solid passing grade. Covered enough issues. |

| Google – Gemini Advanced – Tied for 4th. | D. Barely passed. Hallucination in answer on conflict, but got unilateral mistake issue right. |

I realize that others could fairly rank these differently. If you are a commercial litigator or law professor, especially if you have done Bar Exam evaluations, and think I got it wrong, please write or call me. I am happy to hear your argument for a different ranking. Bar Exam essay evaluation is well outside of my specialty. Even as an Adjunct Law Professor I have only graded a few hundred essay exams. Convince me and I will be happy to change my ranking here and revise this article accordingly with credit given for your input.

AI Hallucination During a Bar Exam

Gemini Advanced, which is a model Google now makes you pay extra to use, had the dubious distinction of fabricating a key fact in its answer. That’s right, it hallucinated in the Bar Exam.

No doubt humans have done that too for a variety of reasons, including a severe case of nerves. Still, it was surprising to see the top Gemini model hallucinate. It happened in its answer to the Ethics issue in the question. I have not seen a ChatGPT model hallucinate over the past six months with thousands of prompts. Prior to that it would sometimes, but only rarely, fabricate, which is why we always say, trust but verify.

In fairness to Gemini Advanced, its hallucination was not critical, and it did not make up a case or otherwise misbehave out of natural sycophantism to please the human questioner. All of the models are still somewhat sycophantic.

It is hard to understand why Gemini Advanced included in this response the following to the ethics question:

Potential Conflict of Interest: If Paul and Leda decide to sue Helen, Lawyer may have a potential conflict of interest due to the fact that Helen is a past client. Lawyer should disclose this potential conflict to Paul and Leda and obtain their informed consent before proceeding with the representation.

There is absolutely nothing in the question or hypothetical presented to suggest that Helen is a past client of the lawyer. Note: After I wrote this up Google released a new version of Gemini Advanced on 2/16/25 called Advanced 2.0 Flash. In my one run with the new model the hallucination was not repeated. It was more concise that regular Gemini 2.0 Flash and, interestingly, not nearly as good as Gemini 2.0 Flash.

Conflict of Interest Issue in the Ethics Question

The second-best legal reasoner, Gemini 2.0 Flash, attained its high ranking, in part, because it was the only model to correctly note a potential conflict of interest by the Lawyer in the hypothetical. This was a real issue based on the facts provided with no hallucinations. This issue was missed by the student’s answer that the Bar Examiners provided. The potential conflict is between the two actual clients of the Lawyer. Here is the paragraph by Gemini 2.0 Flash on this important insight:

Potential Conflict of Interest (Rule 4-1.7): While not explicitly stated, Paul’s uncertainty about litigation could potentially create a conflict of interest. If Lawyer advises both Paul and Leda, and their interests regarding litigation diverge (e.g., Leda wants to sue, Paul doesn’t), Lawyer must address this conflict. Lawyer must obtain informed consent from both clients after full disclosure of the potential conflict and its implications. If the conflict becomes irreconcilable, Lawyer may have to withdraw from representing one or both clients.

This was a solid answer, based on the hypothetical where: “Leda is adamant about bringing a lawsuit, but Paul is unsure about whether he wants to be a plaintiff in litigation.” Note, the clear inference of the hypothetical is that Paul is unsure because he knew that the seller made a mistake in the price, listing the per carat price, not total price for the two-carat diamond ring, and he wanted to take advantage of this mistake. This would probably come out in the case, and he would likely lose because of his “sneakiness.” Either that or he would have to lie under oath and perhaps risk putting the nails in his own coffin.

There is no indication that Leda had researched diamond costs like Paul had, and she probably did not know it was a mistake, and he probably had not told Leda. That would explain her eagerness to sue and get her engagement ring and Paul’s reluctance. Yes, despite what the Examiners might tell you, Bar Exam questions are often complex and tricky, much like real-world legal issues. Since Gemini 2.0 Flash was the only model to pick up on that nuanced possible conflict, I awarded it a solid ‘B‘ even though it missed the UCC issue.

Conclusion

As we’ve seen, AI reasoning models have demonstrated varying degrees of legal analysis—some excelling, while others struggled with key issues. But what exactly did ChatGPT 4o’s winning answer look like? In Part Two, we not only reveal the answer but also analyze the reasoning behind it. We’ll explore how the winning AI interpreted the Bar Exam question, structured its response, and reasoned through each legal issue before generating its final answer. As part of the test grading, we also evaluated the models’ meta-reasoning—their ability to explain their own thought process. Fortunately for human Bar Exam takers, this kind of “show your notes” exercise isn’t required.

Part Two of this article also includes my personal, somewhat critical take on the new reasoning models and why they reinforce the motto: Trust But Verify.

In Part Two, we’ll also examine one of the key cases ChatGPT 4o cited—DePrince v. Starboard Cruise Services, 163 So. 3d 586 (Fla. 3rd DCA 2015)—which we suspect inspired the Bar’s essay question. Notably, the opinion written by Appellate Judge Leslie B. Rothenberg includes an unforgettable quote of the famous movie star Mae West. Part Two reveals the quote—and it’s one that perfectly captures the case’s unusual nature.

Below is an image ChatGPT 4o generated, depicting what it believes a young Mae West might have looked like, followed by a copyright free actual photo of her taken in 1932.

I will give the last word on Part One of this two-part article to the Gemini twins podcasters I put at the end of most of my articles. Echoes of AI — Part One of Bar Exam Battle of the Bots. Hear two Gemini AIs talk all about Part One in just over 16 minutes. They wrote the podcast, not me. Note, for some reason the Google AIs had a real problem generating this particular podcast without hallucinating key facts. They even hallucinated facts about the hallucination report! It took me over ten tries to come up with a decent article discussion. It is still not perfect but is pretty good. These podcasts are primarily entertainment programs with educational content to prompt your own thoughts. See disclaimer that applies to all my posts, and remember, these AIs wrote the podcast, not me.

Ralph Losey Copyright 2025. All Rights Reserved. Republished with permission.

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.