[EDRM Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work. All images in this article were created by Ralph Losey using ChatGPT 4o (Omni) unless noted. This article is published here with permission.]

If you’re afraid of artificial intelligence, you’re not alone, and you’re not wrong to be cautious. AI is no longer science fiction. It’s embedded in the apps we use, the decisions that affect our lives, and the tools reshaping work and creativity. But with its power comes real risk.

In this article, we break down the seven key dangers AI presents, and more importantly, what you can do to avoid them. Whether you’re a beginner or a seasoned pro, understanding these risks is the first step toward using AI safely, confidently, and effectively.

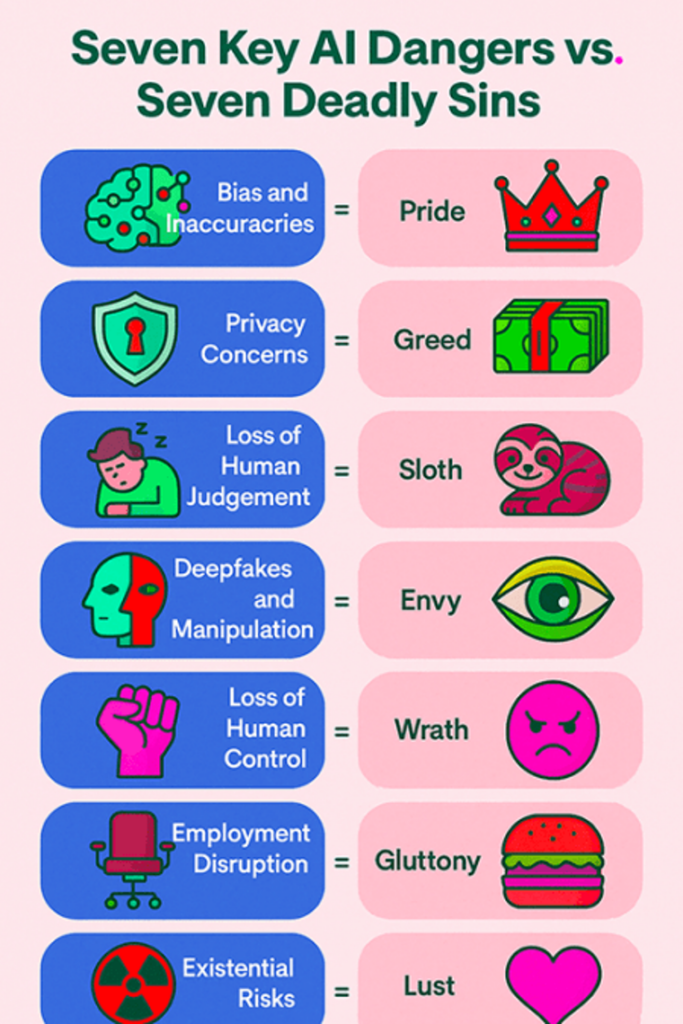

- Bias and Inaccuracies: AI systems may reinforce harmful biases and misinformation if their training data is flawed or biased.

- Privacy Concerns: Extensive data collection by AI platforms can compromise personal privacy and lead to misuse of sensitive information.

- Loss of Human Judgment: Over-reliance on AI might diminish our ability to make independent decisions and critically evaluate outcomes.

- Deepfakes and Manipulation: AI can create convincing fake content that threatens truth, trust, and societal stability.

- Loss of Human Control: Automation of critical decisions might reduce human oversight, creating potential for serious unintended consequences.

- Employment Disruption: AI-driven automation could displace workers, exacerbating economic inequalities and social tensions.

- Existential and Long-term Risks: Future advanced AI, such as AGI, could become misaligned with human interests, posing significant existential threats.

Going Deeper Into the Seven Dangers of AI

These risks are real and ignoring them would be foolish. Yet, managing them through education, thoughtful engagement, and conscious platform selection is both possible and practical.

1. Bias and Inaccuracies. AI is only as unbiased and accurate as its training data. Misguided reliance can perpetuate discrimination, misinformation, or harmful stereotypes. For instance, facial recognition systems have shown biases against minorities due to skewed training data, leading to wrongful accusations or arrests. Similarly, employment screening algorithms have occasionally reinforced gender biases by systematically excluding female candidates for certain positions. Here are two action items to try to control this danger:

- Individual Action: Regularly cross-verify AI-generated results and use diverse data sources.

- Societal Action: Advocate for transparency and fairness in AI algorithms, ensuring ethical oversight and diverse representation in data.

2. Privacy Concerns. AI platforms often require extensive data collection to operate effectively. This can lead to serious privacy risks, including unauthorized data sharing, breaches, or exploitation by malicious actors. Examples include controversies involving smart assistants or social media algorithms collecting vast personal data without clear consent, resulting in regulatory actions and heightened public mistrust. Here are two action items to try to control this danger:

- Individual Action: Be cautious about data sharing; carefully manage permissions and privacy settings.

- Societal Action: Push for robust data protection regulations and transparent AI platform policies.

3. Loss of Human Judgment. Dependence on AI for decision-making may gradually erode human judgment and critical thinking skills. For instance, medical professionals overly reliant on AI diagnostic tools might overlook important symptoms, reducing their ability to critically assess patient conditions independently. In legal contexts, automated decision-making tools risk undermining judicial discretion and nuanced human assessments. Here are two action items to try to control this danger:

- Individual Action: Maintain active engagement and critical analysis of AI outputs; use AI as a support tool, not a substitute.

- Societal Action: Promote education emphasizing critical thinking, independent analysis, and AI literacy.

4. Deepfakes and Manipulation. Advanced generative AI can fabricate convincing falsehoods, severely challenging trust and truth. Deepfake technology has already been weaponized politically and socially, from falsifying statements by world leaders to creating harmful misinformation campaigns during elections. This technology can cause reputational harm, escalate political tensions, and erode public trust in media and institutions. Here are two action items to try to control this danger:

- Individual Action: Develop media literacy and critical evaluation skills to detect manipulated content.

- Societal Action: Establish clear guidelines and tools for identifying, reporting, and managing disinformation.

5. Loss of Human Control. The automation of critical decisions in fields like healthcare, finance, and military operations might reduce essential human oversight, creating risks of catastrophic outcomes. Autonomous military drones, for instance, could inadvertently cause unintended casualties without sufficient human control. Similarly, algorithm-driven trading systems have previously triggered costly flash crashes on global financial markets. Here are two action items to try to control this danger:

- Individual Action: Insist on transparent human oversight mechanisms, especially in sensitive or critical decision-making.

- Societal Action: Demand legal frameworks that mandate human accountability and control in high-stakes AI systems.

6. Employment Disruption. Rapid AI-driven automation threatens employment across many industries, potentially causing significant societal disruption. Job displacement is particularly likely in sectors like transportation (e.g., self-driving trucks), retail (automated checkout systems), and even professional services (AI-driven legal research tools). Without proactive economic and educational strategies, these disruptions could exacerbate income inequality and social instability. Here are two action items to try to control this danger:

- Individual Action: Continuously develop adaptable skills and pursue ongoing education and training.

- Societal Action: Advocate for proactive workforce retraining programs and adaptive economic strategies to cushion transitions.

7. Existential and Long-term Risks. The theoretical future of AI—especially Artificial General Intelligence (AGI)—brings existential concerns. AGI could eventually become powerful enough to outsmart human control and act against human interests, either unintentionally or maliciously programmed. Prominent voices, including tech leaders and ethicists, call for rigorous alignment research to ensure future AI systems adhere strictly to beneficial human values. Here are two action items to try to control this danger:

- Individual Action: Stay informed about AI developments and support ethical AI research and responsible innovation.

- Societal Action: Engage with policymakers to ensure rigorous safety standards and ethical considerations guide future AI developments.

Human Nature in the Code: How AI Reflects What Some Believe Are Our Oldest Vices

I had an odd thought after writing the first draft of this article and deciding to limit the top dangers to seven. Is there any correlation here between the seven AI dangers and what some Christians call the seven cardinal sins, also called the seven deadly sins. Turns out, an interesting comparison can be made. So, I tweaked the title to say cardinal, instead of key, to set this comparison. You don’t have to be religious to recognize the wisdom in many age-old warnings about human excess, ego, and temptation. The alignment is not about doctrine, it’s about timeless human tendencies that can shape technology in unintended ways.

- Bias and Inaccuracies ↔ Pride: Our overconfidence in AI’s objectivity reflects the classic danger of pride—mistaking ourselves and our creations as flawless.

- Privacy Concerns ↔ Greed: The extraction and monetization of personal data mirrors the insatiable hunger for more, regardless of the ethical cost.

- Loss of Human Judgment ↔ Sloth: Intellectual and moral laziness, delegating too much to AI without critical thought, reflects a modern version of sloth.

- Deepfakes and Manipulation ↔ Envy: The use of AI to impersonate, defame, or deceive arises from envy—reshaping reality to diminish others.

- Loss of Human Control ↔ Wrath: Autonomous systems, including weapons, that are without ethical oversight can scale aggression and retribution, embodying systemic wrath.

- Employment Disruption ↔ Gluttony: Over-automation in pursuit of ever-greater output and profit, with little concern for human impact, reveals corporate gluttony.

- Existential Risks ↔ Lust: Humanity’s unrestrained desire to build omnipotent machines reflects a lust for ultimate power—an echo of the oldest temptation.

Whether you view these as moral metaphors or cultural parallels, they offer a reminder: the greatest risks of AI don’t come from the machines themselves, but from the very human impulses we embed in them.

Skilled Use Beats Fearful Avoidance

Fear can be a useful alarm but shouldn’t dictate complete avoidance. Skilled individuals who actively engage with AI can responsibly manage these dangers, transforming potential pitfalls into opportunities for growth and innovation. Regular education, deliberate practice, and informed skepticism are essential.

For example, creative professionals can significantly expand their potential using AI image generators like DALL·E or the new 4o (Omni), to quickly prototype visual concepts or generate detailed artistic elements that would traditionally require extensive manual effort. Similarly, content creators and writers can harness AI-driven tools such as ChatGPT or Google Gemini to rapidly brainstorm ideas, refine drafts, or check for clarity and consistency, dramatically reducing production time while enhancing quality.

In professional and technical fields, AI is instrumental in optimizing workflows. Legal professionals adept at using generative AI can efficiently conduct detailed legal research, automate repetitive document drafting tasks, and quickly extract insights from vast amounts of textual data, significantly reducing manual workload and enabling them to focus on high-level strategic tasks.

Moreover, in data analytics and problem-solving scenarios, skilled AI users can leverage advanced algorithms to identify patterns, trends, and correlations invisible to human analysts. For instance, businesses increasingly use predictive analytics driven by AI to forecast consumer behavior, manage risks, and guide strategic decisions. In healthcare, experts proficient with AI diagnostic tools can rapidly and accurately detect illnesses from medical imaging, improving patient outcomes and operational efficiency.

Education and deliberate practice are crucial because the effectiveness of AI is directly proportional to user expertise. Skilled use involves not only technical proficiency but also critical judgment—knowing when to trust AI’s recommendations and when to question or override them based on domain expertise and context awareness. Responsible users continuously educate themselves about AI advancements, limitations, and ethical considerations, ensuring their application of AI remains thoughtful, strategic and ethical.

Thus, education and practice empower all users to responsibly integrate AI into their workflows, which enhances productivity, accuracy, creativity, strategic impact and productivity.

The knowledge gained from experience gives us the power to take individual and societal actions necessary to contain the seven key AI dangers and others that may arise in the future. Familiarity with a tool allows us to avoid its dangers. AI is much like a high speed buzzsaw. It is, at first, very dangerous and difficult to use. With time and experience the skills gained greatly reduce these dangers and allow for ever more complex cutting tasks.

Beginners: Caution is Your Best Friend

If you’re new to AI, proceed with caution. It is just words but there are still dangers, much like using a sharp saw. Begin with simple tasks, build your skills incrementally, and regularly verify outputs. Daily interaction and study helps you become adept at recognizing potential issues and avoiding dangers.

Beginners face greater risks primarily due to their unfamiliarity with AI’s strengths, weaknesses, and possible hazards. Without experience, it’s harder to spot misleading or biased information, inaccuracies, or privacy concerns that experienced users notice immediately.

Begin your AI journey with simple, low-risk tasks. Ask straightforward informational questions, experiment with creative writing prompts, or use AI for basic brainstorming. This incremental approach helps you understand how generative AI works and what to expect from its outputs. As your comfort with AI grows, gradually tackle more complex or significant tasks. This progressive exposure will refine your ability to critically evaluate AI outputs, identify inconsistencies, and notice subtle biases or inaccuracies.

Practice, combined with clear guidance, enhances your proficiency with AI systems. Those who regularly read and write typically adapt more quickly because AI is fundamentally a language-generating machine. By consistently interacting with tools like ChatGPT, you’ll sharpen your ability to recognize potential issues, determine how AI can effectively support your tasks, and safely integrate AI into important decisions. Regular engagement often leads to delightful moments of surprise and insight as AI’s suggestions become increasingly meaningful and valuable.

Ultimately, regular and thoughtful use reduces risks by improving your skill in independently assessing AI-generated content. Becoming proficient with AI requires careful, consistent practice and study, along with healthy skepticism, critical thinking, and diligent verification.

Conclusion – Embracing AI with Eyes Wide Open

The fear of AI is real—and it’s not foolish. It comes from a deep place of concern: concern for truth, for privacy, for jobs, for control, and for the future of our species. That kind of fear deserves respect, not ridicule.

But fear alone won’t protect us. Only skill, knowledge, and steady practice will. AI is like a power tool: dangerous in the wrong hands, powerful in the right ones. We must all learn how to use it safely, wisely, and on our terms, not someone else’s, and certainly not on the machine’s.

This isn’t just about understanding AI. It’s about understanding ourselves. Are the seven deadly sins somehow mirrored in today’s AI? That wouldn’t be surprising. After all, AI is trained on human language—on our books, our news, our history, and our habits. The real danger may not be the tech itself, but the humanity behind it.

That’s why we can’t afford to turn away in fear. We need the voices, judgment, and courage of those wise enough to be wary. So, summon your courage. Don’t leave this to others. Learn. Practice. Stay engaged. That’s how we keep AI human-centered and aligned with the values that matter most.

Learn AI so you can help shape the future—before it shapes you. Learn how to use it, and teach others. Like it or not, we are all now facing the same existential question:

Are you ready to take control of AI—before it takes control of you?

I give the last word, as usual, to the Gemini twin podcasters that summarize the article, Echoes of AI on: “Afraid of AI? Learn the Seven Cardinal Dangers and How to Stay Safe.” They wrote the podcast, not me.

Ralph Losey Copyright 2025 – All Rights Reserved

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.