[EDRM Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work. All images in the article are by Ralph Losey using AI. This article is published here with permission.]

Humans are inherently pattern-seeking creatures. Our ancestors depended upon recognizing recurring patterns in nature to survive and thrive, such as the changing of seasons, the migration of animals and the cycles of plant growth. This evolutionary advantage allowed early humans to anticipate danger, secure food sources, and adapt to ever-changing environments. Today, the recognition and interpretation of patterns remains a cornerstone of human intelligence, influencing how we learn, reason, and make decisions.

Pattern recognition is also at the core of artificial intelligence. In this article, I will test the ability of advanced AI, specifically ChatGPT, to uncover meaningful new patterns across different fields of knowledge. The goal is ambitious: to discover genuine epiphanies—true moments of insight that expand human understanding and open new doors of knowledge—while avoiding the pitfalls of apophenia, the human tendency to perceive illusions or false connections. This experiment probes an age-old tension: can AI reliably distinguish between genuine breakthroughs and compelling yet misleading illusions?

We will begin by exploring the risks of apophenia, understanding how this psychological tendency can mislead human and possibly AI perception. Throughout, videos created by AI will help illustrate key points and vividly communicate these ideas. There are twelve new videos in Part One and another fourteen in Part Two.

Apophenia: Avoiding the Pitfalls of False Patterns

We humans are masters of pattern detection, but we do have hinderances to this ability. Primary among them is our limited information and knowledge, but also our tendency to see patterns that are not there. We tend to assume the stirring we hear in the bushes is a tiger ready to pounce when really it is just the breeze. Evolution tends to favor this phobia. So, although we can and frequently do miss real patterns, fail to recognize the underlying connections between things, we often make them up too.

Here it is hoped that AI will boost our abilities on both fronts. It will help us to uncover true new patterns, genuine epiphanies, moments where profound insights emerge clearly from the complexity of data. At the same time, AI may expose illusions, false connections we mistakenly believe are real due to our natural cognitive biases. Even though we have made great progress over the millennia in understanding the Universe, we still have a long way to go to see all of the patterns, to fully understand the Universe, and to free ourselves of superstitions and delusions. We are especially weak at seeing patterns and intertwined with different fields of knowledge.

Apophenia is a kind of mental disorder where people think they see patterns that are not there and sometimes even hallucinate them. Most of the time when people see patterns, for instance, faces in the clouds, they know it cannot be real and there is no problem. But sometimes when people see other images, for instance, rocks on Mars that look like a face, or even images on toast, they delude themselves into believing all sorts of nonsense. For instance, the below 10-year old grilled cheese sandwich, which supposedly bears the image of the Virgin Mary, sold to an online casino on eBay in 2004 for $28,000.

In a similar vein, some people suffering from apophenia are prone to posit meaning – causality – in unrelated random events. Sometimes the perceptions of new patterns is a spark of genius, which is later verified, think of Einstein’s epiphany at age 16 when he visualized chasing a beam of light. The new pattern recognitions can lead to great discoveries or detect real tigers in the bush. Epiphanies are rare but transformative moments, like Einstein’s visualization of chasing a beam of light, Newton’s realization of gravity beneath the apple tree, or the insights behind Darwin’s theory of evolution. They genuinely advance human understanding. Apophenia, by contrast, deceives with illusions—patterns that seem meaningful but lead nowhere.

It is probably more often the case that when people “see” new connections and then go on to act upon them with no attempts to verify, they are dead wrong. When that happens, psychologists call this apophenia, the tendency to see meaningful patterns where none exist. This can lead to strange and aberrant behaviors: burning of witches, superstitious cosmology theories, jumping at shadows, addiction to gambling.

Unfortunately, it is a natural human tendency to think you see meaningful patterns or connections in random or unrelated data. That is a major reason casinos make so much money from poor souls suffering from a form of apophenia called the Gambler’s Fallacy. Careful scientists look out for defects in their own thinking and guide their experiments accordingly.

In everyday life, apophenia can also cause some people, even scientists, academics and professionals, to have phobic fears of conspiracies and other severe paranoid delusions. Think of John Nash, a Nobel Prize winning mathematician, and the movie A Beautiful Mind, that so dramatically portrayed his paranoid schizophrenia and involuntary hospitalization in 1959. Think of politics in the U.S today. Are there really lizard people among us? In some cases, as we’ve seen with Nash, apophenia can lead to severe schizophrenia.

The Greek roots of the now generally accepted medical term apophenia are:

- Apo- (ἀπο-): Meaning “away from,” “detached,” “from,” “off,” or “apart”.

- Phainein (φαίνειν): Meaning “to show,” “to appear,” or “to make known”.

The word was first coined by Klaus Conrad, an otherwise apparently despicable person whom I am reluctant to cite, but feel I must, due to the general acceptance of word and diagnosis today. Conrad was a German psychiatrist and Nazi who experimented on German soldiers returning from the eastern front during WWII. He coined the term in his 1958 publication on this mental illness. Per Wikipedia:

He defined it as “unmotivated seeing of connections [accompanied by] a specific feeling of abnormal meaningfulness”.[4] [5] He described the early stages of delusional thought as self-referential over-interpretations of actual sensory perceptions, as opposed to hallucinations.

Apophenia has also come to describe a human propensity to unreasonably seek definite patterns in random information, such as can occur in gambling.

Apophenia can be considered a commonplace effect of brain function. Taken to an extreme, however, it can be a symptom of psychiatric dysfunction, for example, as a symptom in schizophrenia,[7] where a patient sees hostile patterns (for example, a conspiracy to persecute them) in ordinary actions.

Apophenia is also typical of conspiracy theories, where coincidences may be woven together into an apparent plot.[8]

Can AI Be Infected with a Human Illness?

It is possible that generative AI, based as it is on human language, may have the same propensities. That is unknown as of yet, and so my experiments here were on the lookout for such errors. It could be one of the causes of AI hallucinations.

In information science a mistake in seeing a connection that is not real, an apophenia, leads to what is called a false positive. This technical term is well known in e-discovery law, where AI is used to search large document collections. When the patterns analyzed suggest a document is relevant, and it is not, that mistake is called a false positive. It is like a human apophenia. The AI can also detect patterns that cause it to predict a document is irrelevant, and in fact the document is relevant, that is a false negative. There is a pattern, a connection, that was not seen. That can be bad thing in e-discovery because it often leads to withholding production of a relevant document, which can in turn lead to court sanctions.

In e-discovery it is well known that AI consistently has far lower false positives and false negative rates than human reviewers, at least in large document reviews. Generative AI may also be more reliable and astute that we are, but maybe not. This is a new field. Se we should always be on the lookout for false positives and false negatives in AI pattern recognition. That is one lesson I learned well, and sometimes the hard way, in my ten years of working with predictive coding type AI in the e-discovery (2012-2022). In the experiments described in this article we will look for apophenic mistakes.

It is my hope that Advanced AI, properly trained and validated, can provide a counterbalance to human gullibility by rigorously filtering of signal from noise. Unlike the human brain, which often leaps to conclusions, AI can be programmed to ground its pattern recognition in evidence, statistical rigor, and cross-validation—if we build it that way and supervise it wisely.

Still, we must beware that the pattern-recognizing systems of AI may suffer from some of our delusionary tendencies. The best practices discussed here will consider both the positive and negative aspects of AI pattern recognition. We must avoid the traps of apophenia. We must stay true to the scientific methods and verify any new patterns purportedly discovered. Thus all opinions reached here will necessarily be lightly held and subject to further experimentation by others.

From Data to Insight: The Power of New Pattern Recognition

Modern AI models, including neural networks and transformer architectures like GPT-4, excel at uncovering subtle patterns in massive datasets far beyond human capability. This ability transforms raw data into actionable insights, thereby creating new knowledge in many fields, including the following:

Protein Structures: Models like Google’s DeepMind’s AlphaFold have already revolutionized protein structure prediction, achieving high success rates in predicting the 3D shapes of proteins from their amino acid sequences. This ability is crucial for understanding protein function and designing new drugs and medical therapies. The 2024 Nobel Prize in Chemistry was awarded to Demis Hassabis and John Jumper of DeepMind for their work on AlphaFold.

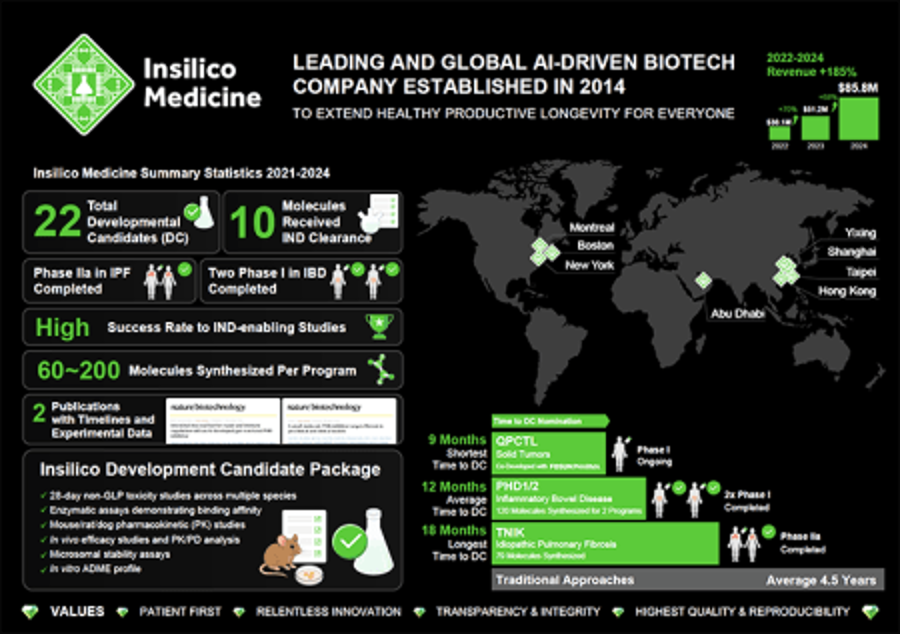

Medical Science. Generative AI models are now being used extensively in medical research, including analysis and proposals of new molecules with desired properties to discover new drugs and accelerate FDA approval. For example, Insilico Medicine uses its AI platform Pharma.AI, to developed drug candidates, including ISM001_055, for idiopathic pulmonary fibrosis (IPF). Insilico Medicine lists over 250 publications on its website reporting on its ongoing research, including a recent paper on its IPF discovery: A generative AI-discovered TNIK inhibitor for idiopathic pulmonary fibrosis: a randomized phase 2a trial (Nature Medicine, June 03, 2025). This discovery is especially significant because it is the first entirely AI-discovered drug to reach FDA Phase II clinical trials. Below is an infographic of Insilico Medicine showing some of its current work:

Also see, Fronteo, a Japanese based research company, and its Drug Discovery AI Factory.

Materials Science. Google DeepMind’s Graph Networks for Materials Exploration (“GNoME”) has already identified millions of new stable crystals, significantly expanding our knowledge of materials science. This discovery represents an order-of-magnitude increase in known stable materials. Merchant and Cubuk, Millions of new materials discovered with deep learning (Deep Mind, 2023). Also see, 10 Top Startups Advancing Machine Learning for Materials Science (6/22/25).

Climate Science and Environmental Monitoring. Generative AI models are beginning to improve climate simulations, leading to more accurate predictions of climate patterns and future changes. For example, Microsoft’s Aurora Forecasting model is trained on Earth science data to go beyond traditional weather forecasting to model the interactions between the atmosphere, land, and oceans. This helps scientists anticipate events like cyclones, air quality shifts, and ocean waves with greater accuracy, allowing communities to prepare for environmental disasters and adapt to climate change. See e.g., Stanley et al, A Foundation Model for the Earth System (Nature, May 2025).

Historical and Artistic Revelations

AI is also helping with historical research. A new AI system was recently used to analyze one of the most famous Latin inscriptions: the Res Gestae Divi Augusti. It has always been thought to simply be an autobiographical inscription, which literally translates from Ancient Latin as “Deeds of the Divine Augustus.” But when a specialty generative AI, Aeneas (again based on Google’s models) compared this text with a large database of other Latin sayings, the famous Res Gestae Divi Augusti inscription was found to share subtle language parallels with other Roman legal documents. The analysis uncovered “imperial political discourse,” or messaging focused on maintaining imperial power, an insight, a pattern, that had never seen before. Assael, Sommerschield, Cooley, et al. Contextualizing ancient texts with generative neural networks (Nature, July 2025).

The paper explains that the communicative power of these inscriptions are not only shaped by the written text itself “but also by their physical form and placement2,3” and that “about 1,500 new Latin inscriptions are discovered every year.” So the patterns analyzed not only included the words, but a number of other complex factors. The authors assert in the Abstract that their work with AI analysis shows.

… how integrating science and humanities can create transformative tools to assist historians and advance our understanding of the past.

In art and music, pattern detection has mapped the evolution of artistic styles in tandem with technological change. In a 2025 studio-lab experiment reported by Deruty & Grachten, a generative AI bass model (“BassNet”) unexpectedly rendered multiple melodic lines within single harmonic tones, exposing previously unnoticed structures in popular music bass compositions. This discovery was written up by Deruty and Gratchen, Insights on Harmonic Tones from a Generative Music Experiment (arXiv, June 2025). Their paper shows how AI can surface new musical patterns and deepen our understanding of human auditory perception.

As explained in the Abstract:

During a studio-lab experiment involving researchers, music producers, and an AI model for music generating bass-like audio, it was observed that the producers used the model’s output to convey two or more pitches with a single harmonic complex tone, which in turn revealed that the model had learned to generate structured and coherent simultaneous melodic lines using monophonic sequences of harmonic complex tones. These findings prompt a reconsideration of the long-standing debate on whether humans can perceive harmonics as distinct pitches and highlight how generative AI can not only enhance musical creativity but also contribute to a deeper understanding of music.

Legal Practice: From Precedent to Prediction

The legal profession has benefited from traditional rule-based statistical AI for over a decade, with predictive coding and similar applications. It is now starting to apply the new generative AI models in a variety of new applications. For instance, it can be used to uncover latent themes and trends in judicial decisions that human analysis has overlooked.

This was done in a 2024 study using ChatGPT-4 to perform a thematic analysis on hundreds of theft cases from Czech courts. Drápal, Savelka, Westermann, Using Large Language Models to Support Thematic Analysis in Empirical Legal Studies (arXiv, February 2024).

The goal of the analysis was to discover classes of typical thefts. GPT4.0 analyzed fact patterns described in the opinions and human experts did the same. The AI not only replicated many of the human expert identified themes, but, as report states, also uncovered a new one that the humans had missed – a pattern of “theft from gym” incidents. This shows that generative AI can sift through vast case datasets and detect nuanced fact patterns, or criminal modus operandi, that were previously undetected by experts (here, three law students under supervision of a law professor).

Another study in early 2025 applied Anthropic’s Claude 3-Opus to analyze thousands of UK court rulings on summary judgment, developing a new functional taxonomy of legal topics for those cases. Sargeant, Izzidien, Steffek, Topic classification of case law using a large language model and a new taxonomy for UK law: AI insights into summary judgment (Springer, February 2025). The AI was prompted to classify each case by topic and identify cross-cutting themes.

The results revealed distinct patterns in how summary judgments are applied across different legal domains. In particular, the AI found trends and shifts over time and across courts – insights that allow new, improved understanding of when and in what types of cases summary judgments tend to be granted. These patterns were found despite the fact that U.K. case law lacks traditional topic labels. This kind of AI-augmented analysis illustrates how generative models can discover hidden trends in case law for improved effectiveness by practitioners.

Even sitting judges have begun to leverage generative AI to inform their decision-making, revealing new analytical angles in litigation. The notable 2023 concurrence by Judge Kevin Newsom of the Eleventh Circuit admitted to experimenting with ChatGPT to interpret an ambiguous insurance term (whether an in-ground trampoline counted as “landscaping”). Snell v. United Specialty Ins. Co., 102 F. 4th 1208 – Court of Appeals, (11th Cir., 5/28/24). Also See, Ralph Losey, Breaking News: Eleventh Circuit Judge Admits to Using ChatGPT to Help Decide a Case and Urges Other Judges and Lawyers to Follow Suit (e-Discovery Team, June 3, 2024) (includes full text of the opinion and Appendix and Losey’s inserted editorial comments and praise of Judge Newsom’s language.)

After querying the LLM, Judge Newsom concluded that “LLMs have promise… it no longer strikes me as ridiculous to think that an LLM like ChatGPT might have something useful to say about the common, everyday meaning of the words and phrases used in legal texts.” In other words, the generative AI was used as a sort of massive-scale case law analyst, tapping into patterns of ordinary usage across language data to shed light on a legal ambiguity. This marked the first known instance of a U.S. appellate judge integrating an LLM’s linguistic pattern analysis into a written opinion, signaling that generative models can surface insights on word meaning and context that enrich judicial reasoning.

My Ask of AI to Find New Patterns

Now for the promised experiment to try to find at least one new connection, one previously unknown, undetected pattern linking different fields of knowledge. I used a combination of existing OpenAI and Google models to help me in this seemingly quixotic quest. To be honest, I did not have much real hope for success, at least not until release of the promised ChatGPT5 and whatever Google calls its counterpart, which I predict will be released the following week (or day). Plus, the whole thing seemed a bit grandiose, even for me, to try to get AI to boldly go where no one has gone before.

Absurd, but still I tried. I won’t go through all of the prompt engineering involved, except to say it involved my usual a complex, multi-layered, multi-prompt, multimodal-hybrid approach. I tempered my goals by directing ChatGPT4o, when I started the process, to seek new patterns that were useful, not Nobel Prize winning breakthroughs, just useful new patterns. I directed it to find five such new patterns and gave it some guidance as to fields of knowledge to consider, including of course, law. I asked for five new insights thinking that with such as big ask I might get one success.

Note, I write these words before I have received the response, but after I have written the above to help guide ChatGPT4o. Who knows, it might achieve some small modicum of success. Still, it feels like a crazy Quixotic quest. Incidentally, Miguel de Cervantes (1547-1616) character, Don Quixote (1605) does seem to person afflicted with apophenia. Will my AI suffer a similar fate?

I designed the experiment specifically with this tension in mind between epiphanies, representing genuine insights and real advances in knowledge, and illusions, which are merely plausible yet misleading patterns. One of my goals was to probe AI’s capacity to distinguish one from the other.

Overview of Prompt Strategy and Time Spent

First, I spent about an hour with ChatGPT4o to set up my request by feeding it a copy of the article as written so far. I also chatted with it about the possibility of AI finding new patterns between different fields of knowledge. Then I just told ChatGPT4o to do it, find a new inter connective pattern. ChatGPT4o “thought” (processed only) for just a few minutes. Then it generated a response that purported to provide me with the requested five new patterns. It did so based on its existing training and review of this article.

As requested, it did not use its browser capabilities to search the web for answers. It just “looked within” and came with five insights it thought were new. Almost that easy. I lowered my expectations accordingly before reading the output.

That was the easy part, after reading the response, I spent about 14-hours over the next several days doing quality control. The QC work used multiple other AIs, both by OpenAI and Google, to have them go online and research these claims, evaluate their validity – both good and bad, engage in “deep-think,” look for errors, especially signs of AI apophenia, and otherwise invited contrarian type criticisms from them. After that, I also asked the other AIs for suggested improvements they might make to the wording of the five clams and rank them by importance. The various rewordings were not too helpful, but the rankings were, and so were many of the editorial comments.

The 14-hours in QC does not include the approximate 6-hours of machine time by the Gemini and OpenAI models to do deep think and independent research on the web to verify or disprove the claims. My actual 14-hour time included traditional Google searches to double check all citations as per my “trust but verify” motto. My 14-hours also included my time to read (I’m pretty fast) and skim most of the key articles that the AI research turned up, although frankly some of the articles cited were beyond my knowledge levels. I tried to up my game, but it was hard. These other models also generated hundreds of pages of both critical and supportive analysis, which I also had to read. Finally, I probably put another 24-hours into research and writing this article (it took over a week), so this is one of my larger projects. I did not record the number of hours it took to design and generate the 26 videos because that was recreational.

Part Two of this article is where I will make the reveal. Was this experiment another comic story of a Don Quixote type (me) and his sidekick Sanchez (AI), lost in an apophenia neurosis? Or perhaps it is another story altogether? Neither hot nor cold? Stay tuned for Part Two and find out.

PODCAST

As usual, we give the last words to the Gemini AI podcasters who chat between themselves about the article. It is part of our hybrid multimodal approach. They can be pretty funny at times and provide some good insights. This episode is called Echoes of AI: Epiphanies or Illusions? Testing AI’s Ability to Find Real Knowledge Patterns. Part One. Hear the young AIs talk about this article. They wrote the podcast, not me.

Ralph Losey Copyright 2025 – All Rights Reserved

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.