[EDRM Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work. All images in the article are by Ralph Losey using AI. This article is published here with permission.]

The moment of truth had arrived. Were ChatGPT’s insights genuine epiphanies, valuable new connections across knowledge domains with real practical and theoretical implications, or were they merely convincing illusions? Had the AI genuinely expanded human understanding, or had it merely produced patterns that seemed insightful but were ultimately empty?

Fortunately, the story I began in Part One has a happy ending. All five of the new patterns claimed to have been found were amazing and, for the most part, valid—a moment of happiness at Losey.ai. Part Two now shares this good news, describing both the strengths and limitations of these discoveries. To bring these insights vividly to life, I also created fourteen new moving images (videos) illustrating the discoveries detailed in Part Two.

ChatGPT4o’s Initial Finding of Five New Patterns

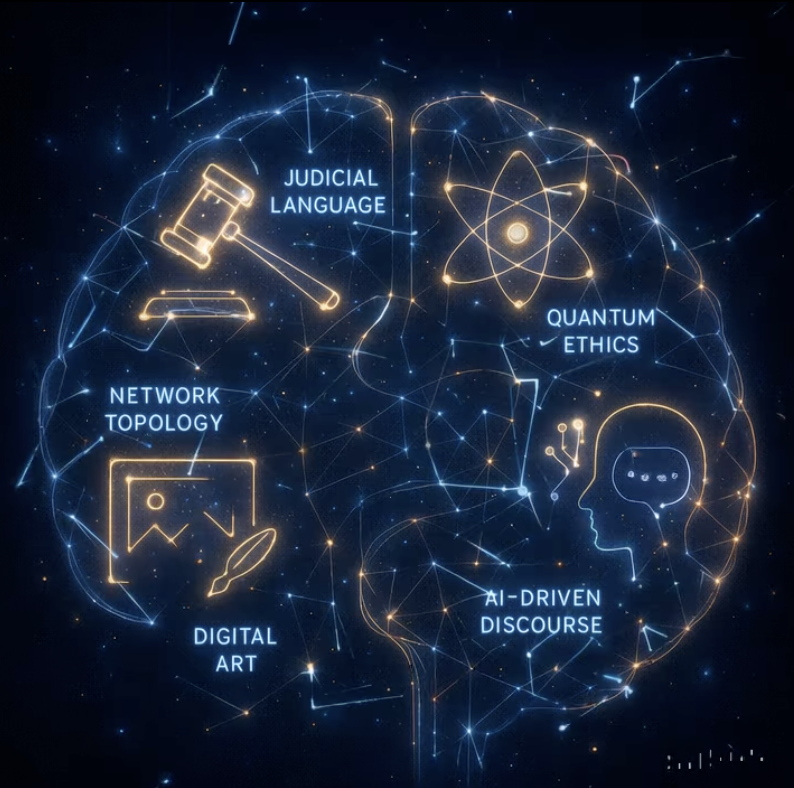

Here are the five new cross-disciplinary patterns that the AI generated in response to my final “do it” prompt:

- Judicial Linguistic Style and Outcome Bias: Judges with more narrative or metaphorical language styles are more likely to rule empathetically in civil matters. This insight could shape legal training and judicial evaluations.

- Quantum Ethics Drift: Recent shifts in privacy discourse correlate with spikes in quantum research funding—suggesting that ethical reflection responds dynamically to perceived technological risk.

- Aesthetic-Trust Feedback Loop: Digital art styles embracing transparency and abstraction rise in popularity during periods of high public skepticism toward tech companies. Art, it seems, mirrors trust.

- Topological Jurisprudence: Mathematical topology’s network-based models align with emerging legal theories of distributed liability—useful for understanding platform accountability and blockchain disputes.

- Generative AI and Civic Discourse Decay: As AI content proliferates, public engagement with nuanced, long-form discourse is measurably declining.

In the words of one of my AI bots: These are not just patterns—they are knowledge-generating revelations with practical and philosophical implications.

Two of the five new insights pertained to the law, which is my domain of expertise, but even so, I had never thought of these before, nor ever read anyone else talking about them. All five claimed insights were to me, but all had the ring of truth. Also, all seemed like they might be somewhat useful, with both “practical and philosophical implications.“

But since I had never considered any of this before, I had limited knowledge as to how useful they might be, or whether it was all fictitious, mere AI Apophenia. Still, I doubted that because the insights were all in accord with my long-life experiences. Moreover, they seemed intuitively correct to me, but, at the same time, I realized John Nash might have felt the same way (Click to watch a great scene in the Beautiful Mind movie). So, I spent days of QC work thereafter with extensive human and AI research to calmly evaluate the claims and see what foundation precedent, if any, lay beyond my feel, “just knowing something” as the movie puts it.

Analysis of All Five Claims

Judicial Language and Empathetic Outcomes

Textual analysis suggests that judges who use more narrative or metaphorical language may be more likely to issue empathetic rulings in civil cases. This correlation, while not causal, could reflect underlying judicial temperament and offers a potential tool for legal scholarship and training.

As ChatGPT 4o explained, GPT-driven textual analysis of thousands of court opinions reveals a subtle, but statistically significant correlation: judges who employ more metaphor, allegory, and narrative framing in their opinions tend to reach more empathetic rulings in civil cases—particularly in matters involving individual rights, employment, or family law. GPT 4o considers this to be its strongest claim.

It admits this correlation does not imply causation but may reflect underlying judicial temperament or philosophical orientation. My own experience as a practicing litigation strongly supports this claim.

GPT o3 disagreed on the top ranking of the claim but did concede that judges whose written opinions use a higher density of narrative, metaphor, or “story‑telling” devices tend to rule for the more sympathetic party slightly more often than their peers.

GPT o3 pro after research cited Justice Blackmun’s dissent in DeShaney v. Winnebago, 489 U.S. 189, 212 (1989), a constitutional due‑process case, to illustrate “civil” empathy in an opinion to support this claim. Justice Harry Blackmun‘s dissent begins with these famous words and then quickly goes to his narrative:

Today, the Court purports to be the dispassionate oracle of the law, unmoved by “natural sympathy.” Ante at 489 U. S. 202. But, in this pretense, the Court itself retreats into a sterile formalism which prevents it from recognizing either the facts of the case before it or the legal norms that should apply to those facts. . . .

Poor Joshua! Victim of repeated attacks by an irresponsible, bullying, cowardly, and intemperate father, and abandoned by respondents, who placed him in a dangerous predicament and who knew or learned what was going on, and yet did essentially nothing except, as the Court revealingly observes, ante at 489 U. S. 193, “dutifully recorded these incidents in [their] files.” It is a sad commentary upon American life, and constitutional principles — so full of late of patriotic fervor and proud proclamations about “liberty and justice for all,” that this child, Joshua DeShaney, now is assigned to live out the remainder of his life profoundly retarded. Joshua and his mother, as petitioners here, deserve — but now are denied by this Court — the opportunity to have the facts of their case considered in the light of the constitutional protection that 42 U.S.C. § 1983 is meant to provide.

Gemini Pro 2.5 considers this the strongest empirical claim, and second strongest claim overall, behind everyone’s first place pick, Topology, explaining:

It is highly testable, as court opinions and case outcomes are public records that can be analyzed with modern NLP tools. The underlying theory—that a person’s mindset is reflected in their language—is well-established in psychology and linguistics. While a judge’s underlying philosophy is a potential confounding variable, the claim smartly reframes language as a direct proxy for that philosophy, making the link very sturdy. It’s a straightforward, data-driven proposition that quantifies a long-held belief about the nature of justice.

Ethical Response to Quantum Innovation

Evidence shows that Increases in quantum research funding often precede surges in ethical discourse on privacy and civil liberties. This pattern suggests that ethical reflection tends to respond to perceived technological risk, particularly in fields with high uncertainty like quantum computing. It is not a claim of causation, but rather of a correlation, one not detected before. With that clarification GPT 4o considers this the strongest claim.

Gemini Pro 2 finds the claim of a lead-lag relationship between quantum research funding and public ethics discourse to be a weak claim. It admits the claim is based on a plausible idea of “anticipatory ethics,” and is testable because you can track funding and publications over time. Still, it interprets the claim as one of causation, not just correlation, and rejects if for that reason. It seems like the two AIs are talking past each other.

GPT 4.5 agreed with 4o and also considers this to be strong claim. GPT 4.5 restates it as: “Increases in quantum computing funding consistently precede intensified ethical discourse on privacy and civil liberties, suggesting ethical awareness responds predictably, though indirectly, to technological advances.“

GPT o3 and o3-pro also agreed with GPT 4o and found, in o3-pro’s words, that:

Large surges in public or private funding for quantum‑computing research are followed, typically within six to twenty‑four months, by measurable increases in academic and policy discussions of quantum‑specific privacy and civil‑liberties risks. The correlation is clear, but causation remains to be fully demonstrated.

Artistic Transparency and Tech Trust

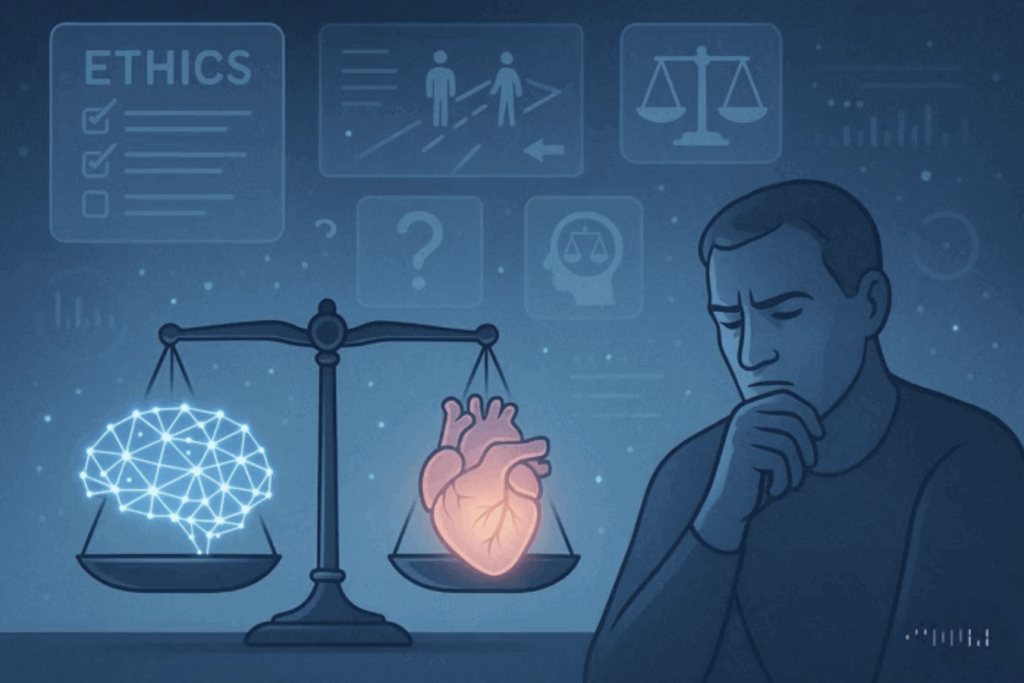

This is a claim that art mirrors distrust in tech, that periods of declining public trust in technology frequently coincide with rising popularity of digital art styles emphasizing transparency and abstraction. While the causality is unclear, this aesthetic shift may reflect cultural efforts to visualize openness and regain clarity. GPT 4o considers this its weakest claim.

So too does Gemini Pro 2.5. Although it admits the claim is a beautiful and creative piece of cultural criticism, it opines that it is almost impossible to test or falsify.

Moreover, Pro2.5 thinks the claim is highly susceptible to confirmation bias and seeing patterns where none exist (apophenia). Still, it tempers this opinion by stating that if this claim is presented not as a confirmed causal law, but as a heuristic model for cultural analysis, then it appears to be supported by correlational data. Periods of heightened public skepticism toward opaque technological systems (e.g., algorithmic black boxes, corporate data collection) do correlate with an increased cultural resonance of digital art and design that emphasizes an “aesthetic of transparency.” This aesthetic includes motifs like wireframes, exploded-view diagrams, data visualization, and semi-translucent layers.

To avoid apophenia, Pro2.5 counsels understanding that the claim is not that tech skepticism causes this art style. Instead, the claim is only that this aesthetic becomes a resonant cultural metaphor that artists and audiences are drawn to during such times, because it offers a symbolic counterbalance to the anxieties of opacity and control. Still, it ranked this the weakest claim.

Topological Jurisprudence and Network Liability

This interdisciplinary convergence provides a new topology framework for analyzing disputes involving complex computer networks and other multiparty, multi-agent technology disputes. The flexible, continuously morphing topographic maps are perfect for evaluating potential liability paths. They are designed to handle high volumes of changing data flows, such as blockchain transaction data, or telemetry data reports.

For good background on this field of applied mathematics see the Wikipedia article on topological data analysis (TDA). These legal situations are too complex and changing for traditional, hierarchical branch tree structures. But TDA structures work perfectly to help us to visualize and sort things out in multidimensional space where connections and shown and stretched, but not broken.

All of the AI models agree that this is the strongest claim. So do I, although honestly, I don’t fully understand data topology and TDA – not yet.

GPT o3 explains that network‑based (“topological”) mapping of actors in decentralized technologies reliably clarifies where legal responsibility can attach when something goes wrong.

GPT o3-pro concludes this is a fertile area for interdisciplinary development that could significantly augment our understanding of distributed liability. Topology geometry can help, as o3 pro put it, by ensuring the judge considers the full web of interactions that lead to damages, rather than looking for a single entity. As complex, changing damage scenarios become more common, this structural insight is likely to prove invaluable in crafting fair and effective liability rulings.

GPT 4.5 also agrees that mathematical topology offers promising analytical frameworks for modeling distributed liability in decentralized technologies.

Gemini Pro 2.5 opined that mathematical models of networks (topology) perfectly map onto new legal theories of shared responsibility. It considers the idea a breakthrough for legal clarity, offering a clear, visual blueprint for figuring out “who is responsible” in our increasingly complex and decentralized world. It also considers this the strongest claim, which it explains in its typical verbose manner as follows:

This claim is the strongest because it is not an empirical assertion about the world, but rather a proposal for a new, powerful analytical tool. Its strength lies in its logical coherence and immediate utility. The analogy between mathematical networks and real-world systems of distributed liability (like AI or blockchain) is incredibly direct and sound. It doesn’t suffer from confounding data because it’s a framework for analyzing data, not a conclusion drawn from it. Its value is in its power to bring clarity to complex problems, making it the most robust and defensible claim of the five.

In doing the verification research GTP4.5 using deep search found one article on point written by Dana Burchardt. Her law studies were in Paris, with a later doctorate from the Freie Universität in Berlin. She is now a visiting Law Professor at the University of Bremen and is an expert in international and German law. She has an unusual interdisciplinary background, including time as a senior research fellow at the Max Planck Institute. Her article found by ChatGPT4.5 using deep search is: The concept of legal space: A topological approach to addressing multiple legalities (Cambridge U. Press, 2022).

The article is concerned with topological mapping of legal spaces in general. It has nothing to do with liability detection among multiple defendants in networking configurations and is instead concerned with international law and EU related issues. So, the newness claim of ChatGPT4o is supported. Burchart’s general explanations of topological analysis also support the sanity of GPT4o’s claim, that this is indeed a new patterning between topology geometry and the law. Professor Burchart’s work both shows the solid grounding of the claim and supports its top ranking as a significant new insight. Burchardt’s article is a hard read, but here are some of the explanations and sections of the article that are very relevant and accessible (found at pages 528, 532, 534).

Topology’s guiding ideas.

At first glance, topology is a mathematical concept that seems far removed from legal theoretical discussions. As will be explained further below, it is a tool to analyse mathematical objects. Yet upon a closer look, topology provides many insights that can constitute a fruitful basis for conceptualizing legal phenomena. To link these insights to the notion of legal space, this section outlines relevant aspects of the mathematical notion to which the subsequent sections relate. [pg. 528]

Constructing a topological understanding of legal space.

I propose a possible way in which a topological perspective can contribute to constructing a concept of legal space that is able to generate novel analytical insights. I consider such insights for the inner structure of legal spaces, the boundaries of these spaces and the interrelations with other spaces. [pg. 532}A topological approach allows each element of the space to have a broad range of interrelations with the other elements of the same space (see Figure 3 above). The elements are thus not limited to interrelations along tree-like structures, which would only allow for very few interrelations per element as tree-like structures only allow one path between elements. . . . Instead, the interrelations within the legal space are numerous. An element can be linked to another element by more than one path. It can be linked directly and/or via intermediate elements. An example of the latter is two rules being interpreted in light of the same principle: there is a communicative path from the first rule via the principle to the second rule. Representing such interrelations as a topology with manifold paths allows us to capture the heterarchical nature of many legal interrelations. Further, it illustrates that interrelations among legal elements are flexible rather than static: the interrelating paths among elements can vary while preserving the connection. [pg. 534]

AI and Declining Civic Discourse.

Widespread use of generative AI may cause reduced engagement in long-form, thoughtful public discourse. The trend raises concerns for educators and civic leaders about sustaining meaningful dialogue in the digital age. GPT 4o considers this its strongest claim. The other AIs are doubtful, considering it one of the weakest.

GPT o3 prefers to restate the claim to make it more palatable as follows: The proliferation of generative AI content online correlates with reduced engagement in nuanced, long-form public discussions, indicating generative AI likely contributes to diminished discourse quality. It is kind of hard to disagree with that, but the AIs other that GPT 4o still don’t like it, again, it appears, out of concern about conflation of correlation and causation. I’ve seen a lot of discussion about from people making similar observations lately about AI degrading content, and I am inclined to agree. Maybe this is not a new claim, but it seems valid, although admittedly proof of causation is unlikely and the apophenia risk is high.

GPT 03 also makes the separate critical point that “well‑prompted AI can sometimes raise, not lower, discussion quality.” I’m inclined to agree with that too bit, but how often do we see positive prompt masters at work? We usually see clumsy well-meaning amateurs, or, far worse, bad faith professionals, people paid to run propaganda machines, sales pitches or human vendettas of one kind or another. Their vicious personal attacks and name-calling can kill civil discourse fast, even though often childish and obviously false.

GPT o3 pro made a good restatement of this claim worth considering:

The widespread use of generative AI (e.g. AI chatbots producing content) correlates with a decline in the quality of online civic discourse – specifically a reduction in long-form, nuanced discussion in forums, comment sections, and other public discourse venues. Essentially, as AI-generated content proliferates, human engagement shifts toward shorter, less substantive interactions, potentially because AI content floods the space with superficial text or because people’s habits change (relying on AI summaries, etc.), leading to “discourse decay.”

Early evidence from online communities indicates that the influx of AI-generated content does pose challenges to depth and quality of discussion. One strong piece of evidence is how moderators on platforms like Reddit have responded. A recent study of Reddit moderators found widespread “concerns about content quality” with the rise of AI-generated text in their communities. Moderators observed that AI-produced comments and posts tend to be “poorly written, inaccurate, and off-topic,” threatening to reduce the overall quality of content. They also feared that the “inauthenticity” of such content undermines genuine human connection in discussions.

GPT o3 pro also states:

This pattern is useful as an early warning: it underscores the need for community guidelines, AI-detection tools, and perhaps cultural shifts that re-emphasize human authenticity and depth in conversation. However, it would be too deterministic to declare that generative AI will inevitably cause discourse to collapse into soundbites. The pattern is emergent, and its trajectory depends on how we manage the technology. . . .

In conclusion, the “generative AI → discourse decay” pattern holds true in enough instances to merit serious concern and action. Its credibility is bolstered by early studies and community feedback, though more data over time will clarify its magnitude. As a society, we can use this insight to balance the benefits of generative AI with safeguards that preserve the richness of human-to-human dialogue – ensuring that technology amplifies rather than erodes the public square.

Still, GPT o3 pro ranked this claim the weakest, which for me shows just how strong all five of the claims are.

Conclusion: From Apophenia to Understanding

ChatGPT4o did a far better job than expected. The quest for new patterns linking different fields of knowledge seems to have excluded Quixote extremes. I am pretty sure that only mild forms of apophenia have appeared, much like seeing puffy faces in the clouds. Time will tell if the predictions that flow from these five claims will come true or drift away as a cloud.

Will topological analysis become a common tool in the future to help resolve complex network liability disputes? Will analysis of your judge’s prior language types become a common practice in litigation? Will advances in Quantum Computers continue to trigger public fears of loss of privacy and liberty six to twenty-four months later? Will AI influenced discourse continue to erode civic discussion and disrupt real inter-personal communication? Will digital art continue to echo public distrust of technology and evoke an aesthetic of transparency? Will someone buy my certified original art shown here for the first time for just one bitcoin? Will more grilled cheese sandwiches with holy figures sell on eBay? Will some of our public figures follow John Nash down the rabbit hole of severe Apophenia and be involuntarily hospitalized with completely debilitating paranoid schizophrenia.

No one knows for sure. AI is not a seer, nor can it reliably predict the market for grilled cheese sandwiches or the mental stability of our public figures. It is, however, a powerful tool for exploring complex questions and discovering patterns—whether profound epiphanies or mere illusions. As my experiment suggests, AI can impressively illuminate new insights across fields of knowledge when guided thoughtfully and cautiously. Still, these are early days in the age of generative AI. A new world of potential awaits us, both serious and playful, and it’s up to us to ensure its wiser, more discerning, and perhaps even more amusing than the one we’ve made before.

Epiphanies or illusions? My experiments suggest that AI, when guided thoughtfully and validated rigorously, can lead us toward genuine epiphanies, significant breakthroughs that deepen our understanding and open new pathways across different domains of knowledge. Yet, we must remain alert to the risk of illusions, plausible yet ultimately false patterns that can distract or mislead us. The journey toward genuine insight and wisdom involves constant vigilance to distinguish these true discoveries from compelling yet false connections.

I invite you, the reader, to join this new quest. Engage with AI to explore your areas of interest and passion. Challenge the boundaries of existing knowledge, actively test AI’s pattern-recognition abilities, and remain critically aware of its limitations. By actively distinguishing genuine epiphanies from tempting illusions, you may discover new insights and fresh perspectives that advance not only your understanding but contribute meaningfully to our collective wisdom.

PODCAST

As usual, we give the last words to the Gemini AI podcasters who chat between themselves about the article. It is part of our hybrid multimodal approach. They can be pretty funny at times and provide some good insights. This episode is called Echoes of AI: Epiphanies or Illusions? Testing AI’s Ability to Find Real Knowledge Patterns. Part Two. Hear the young AIs talk about this article for 15 minutes. They wrote the podcast, not me.

Ralph Losey Copyright 2025 – All Rights Reserved

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.