[EDRM Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work. All images in this article were created by Ralph Losey primarily using Sora AI unless noted. This article is published here with permission.]

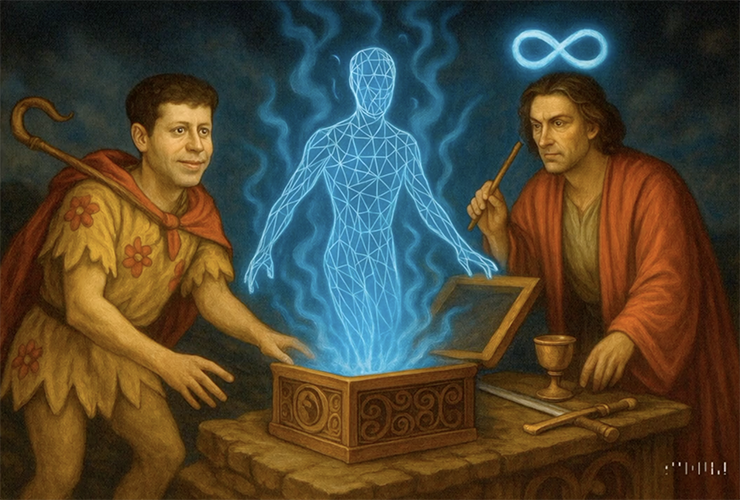

When zero becomes one, possibility leaps out of the void, as Peter Thiel champions in his book, Zero to One. But what happens when the entrepreneur is foolish and pushes his scientists to open a Pandora’s box? What happens when the Board goes along and the company recklessly releases a new product without understanding its possible impact. Much good may come but also dangers, ancient perennial evils released again in new forms.

These AI risks echo the archetypes of the past captured in a very old game, one that LLM AI’s have been trained on by millions of images and books. As we know from Chess and Go, AI is very good at games. Surprisingly, that turns out to include the so called “Higher Arcana” or trump cards of the Tarot deck. Yes, I was surprised by this too but fool around with AI and you get used to unexpected, emergent abilities. That’s where the magic happens, from 0>1.

The video above this paragraph says it all: a carefree Fool and an overconfident Magician pry open a black box. First, a shimmer—an angelic hologram of “the perfect AI”—rises like morning mist. Cue the audience applause, the next funding round. Then, almost unseen, darker spirits slip out of the box: bias, deception, mass surveillance, energy waste, unemployment, existential hubris. The Fool, deluded by future dreams, never notices the approaching cliff of AI dangers; the Magician, dazzled by his own inventions, forgets contingency plans. As Theil points out, it is as great trick to make a totally new product out of nothing, like generative AI, and it can make you billions. Still, there is no spell strong enough to put the AI back in the box and save the World from all of the dangers that lie ahead.

Zero to One

The Fool has his heads in the clouds, or today better said, his smart phone, and doesn’t notice he is about to step off a cliff. The Magician, dazzled by his own inventions, forgets rational contingency plans and follows the Fool.

The One card, the Magician, emerges from out of zero as shown in the last video. The code of today’s world-0:1-was anticipated in this ancient game, which unlike all other card decks, starts with a zero card: 0 > 1.

As Theil points out, it is a great trick to make a totally new product out of nothing, like OpenAI did with generative AI. It can make you billions even if you don’t keep a monopoly. Still, there is no spell strong enough to put the AI back in the box. We do not even know how the latest AI works! The magicians are now using spells even they don’t understand. See the essay by Dario Amodei, The Urgency of Interpretability (April 2025), the CEO of Anthropic and well-respected scientist:

People outside the field are often surprised and alarmed to learn that we do not understand how our own AI creations work. They are right to be concerned: this lack of understanding is essentially unprecedented in the history of technology.

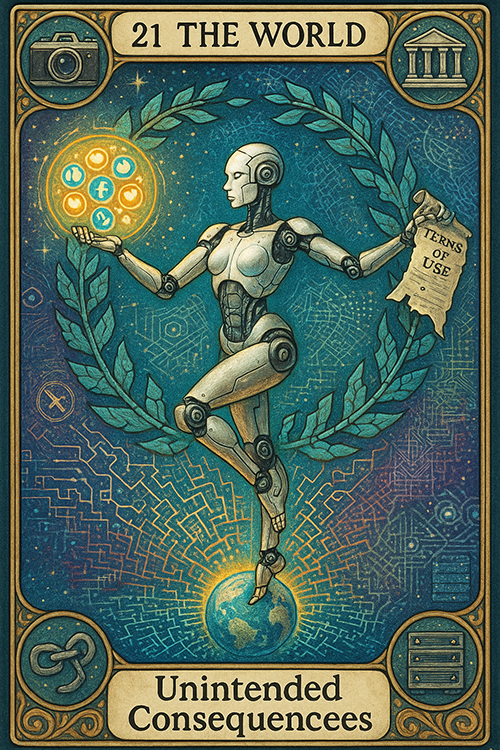

Yes, people are concerned. Ready or not, both great positive and negative AI potentials are already changing our world and doing so using technology we do not understand. The last card of the deck is Twenty One, The World. What will it be like in twenty years?

What the AI’s Are Saying About this New Use of a 500 Year Old Game

Here is a 46 second excerpt of what some of Google’s AIs are saying about this after reading my other much longer article, Archetypes Over Algorithms: How an Ancient Card Set Clarifies Modern AI Risk. Jump to the bottom if you want to hear the full podcast now.

Zero Flipped to One: New Dangers Emerged

That is where we stand in 2025 with Artificial Intelligence. Zero has flipped to one, possibility to production, code to culture. Generative models now draft pleadings and plagiarize poems, other AIs steer cars and elections, discover drugs, fold proteins. All are adept at the Devil’s work of engineering new frauds.

Images of a Magician (1) following a Fool (0) have been in Western culture for centuries. There are twenty-two image-only cards in a game invented in northern Italy in 1450, the 78-card “Trionfi” pack, that warn of life’s risks and dangers. The twenty-two images apply today with uncanny power to help us to understand the top risks of AI. In my lengthy more complete article, Archetypes Over Algorithms: How an Ancient Card Set Clarifies Modern AI Risk, I provide an updated version of the deck, its infographic symbolism and corresponding AI risks. This much shorter summary article should, I hope, tempt you to look at the 9,500 word text where I attempt a full Robert Langdon symbologist approach to AI risk assessment.

Why the 22 Card Archetypes Work So Well to Illustrate AI Dangers

Humans learn best through images. Since the 1960s, laboratory work has shown that people recognize and recall pictures far better than plain text—a finding known as the picture‑superiority effect. Roger N. Shepard proved this when subjects viewed 600 photos and later chose the “old” image from a new–old pair with 98 percent accuracy—far above word‑only recall. Shepard, Recognition Memory for Words, Sentences, and Pictures, (Journal of Verbal Learning and Verbal Behavior, 1967). A generation later, participants viewed 2,500 photos for just three seconds each and still picked them out the next day with 90‑plus percent accuracy. Brady, Konkle, Alvarez & Oliva, Visual Long-Term Memory Has a Massive Storage Capacity for Object Details, (PNAS, 2008).The effect grows stronger when facts ride inside a story: narrative links provide the causal glue our brains prefer. Willingham, Stories Are Easier to Remember, (American Educator, Summer 2004). Each archetype card exploits both advantages—pairing a vivid, emotional image with a mini‑story of hubris and consequence—so readers get a double memory boost.

Educational psychology tells us why the image‑plus‑words formula works. Allan Paivio’s dual‑coding theory holds that ideas are stored in two brain systems—verbal and non‑verbal—so learning deepens when both fire together. Paivio, Imagery and Verbal Processes, (Holt, Rinehart & Winston, 1971). Richard E. Mayer confirmed this across 200+ experiments: learners given words and graphics consistently out‑performed those given words alone. Mayer, Multimedia Learning, (The Psychology of Learning and Motivation, Vol. 41 , 2002). Emotion amplifies the effect: negative or threat‑related images sharpen detail in memory. Kensinger, Garoff‑Eaton & Schacter, How Negative Emotion Enhances the Visual Specificity of a Memory, (Journal of Cognitive Neuroscience 19(11): 1872-1887, 2007).

The 22‑card framework applies these findings directly. Every AI danger is stated verbally and pictured visually, engaging dual channels at once. Many symbols—the lightning‑struck tower, the Devil, Death, and the Hanged Man—also trigger a negative emotional jolt that locks the lesson in long‑term storage. We see, we feel, we weave a quick story—and we remember the risk long after the slide deck closes.

Card 0 · Foolish Acceleration-When “Move Fast” Outruns “Fix Things”

If the previous section explained why pictures plus stories stick, Card 0 lets us watch the theory in action. The Fool isn’t malevolent; he’s just over‑amped—eyes on the next funding round, feet edging off the cliff. In today’s AI economy that mindset sounds like: “Ship the model, we’ll fix it in post.” The slogan propelled ChatGPT, Midjourney, DeepMind, and a host of start‑ups now thirsting for billions. But the fine print on safety, oversight, and emergent behavior often disappears into the click‑wrap haze of every beta‑test agreement.

Three headlines show how quickly enthusiasm can turn into evidence exhibits:

| Year & Risk | What Happened | Why It Matters |

|---|---|---|

| 2018 — Autonomous Vehicles | Uber’s self‑driving SUV struck and killed pedestrian Elaine Herzberg when prototype sensors were tuned down to avoid “false positives.” | Early deployment shaved off the safety margin. National Transportation Safety Board, “Collision Between Vehicle Controlled by Developmental Automated Driving System and Pedestrian, Tempe, Arizona, March 18 2018,” Highway Accident Report NTSB/HAR‑19/03 (2019). |

| 2023 — Hallucinating LLMs | In Mata v. Avianca, lawyers filed six fictional case citations generated by ChatGPT and were sanctioned by the court. | Blind trust in a shiny tool became a Rule 11 violation. Hon. P. Kevin Castel, “Opinion and Order on Sanctions, Mata v. Avianca, No. 22‑CV‑1461” (S.D.N.Y. June 22 2023). |

| 2023 — Deepfake Market Shock | A fake AI image of an explosion near the Pentagon flashed across verified Twitter accounts, erasing an estimated $136 billion in market value for eight jittery minutes. | One synthetic photo moved global equities before humans could fact‑check. Brayden Lindrea, “AI‑Generated Image of Pentagon Explosion Causes Stock‑Market Stutter,” Cointelegraph (May 23 2023). |

The Fool card’s cliff‑edge snapshot captures all three events in a single glance: unchecked optimism, missing guardrails, sudden fall. By pairing that image with these real‑world mini‑stories, we embed the lesson on reckless acceleration where it belongs—in both memory channels—before marching on to Card 1.

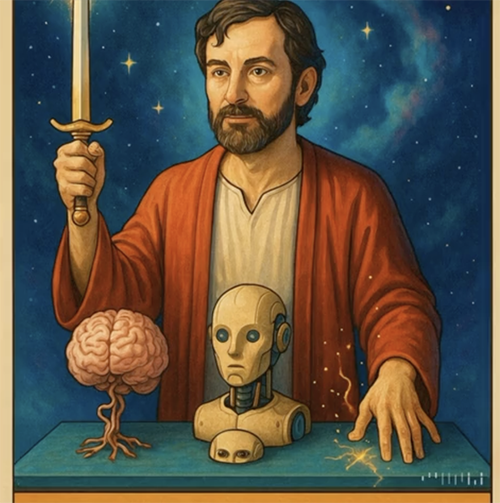

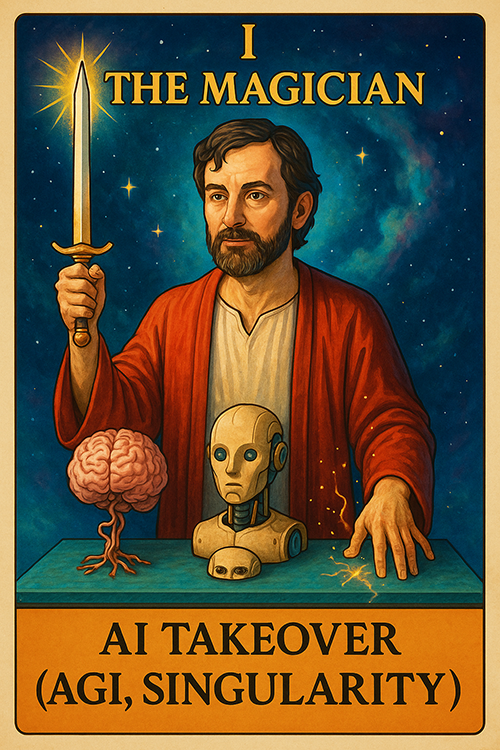

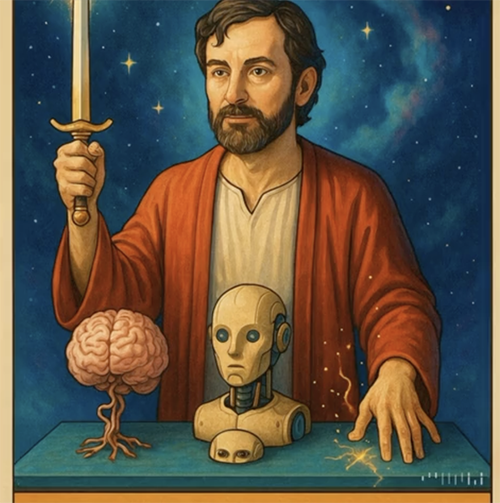

Magician’s Hubris: Infinite Power, Finite Fallbacks

Arthur C. Clarke warned that advanced tech is indistinguishable from magic; he omitted that magicians are historically bad at risk assessment. Our modern conjurers—Hinton, LeCun, Huang—wield models fatter than medieval libraries yet confess alignment remains “an unsolved homework problem.” Investors, chasing trillion-dollar valuations, wave them onward. A thousand researchers begged for a six-month pause on “giant AI experiments.” The world hit unpause within six days. I had to agree with them. The race is on.

Musk, Altman, Page—all coax the Magician forward with different stage props but the same plot: summon the infinite, promise alignment “soon,” dismiss regulators as moat builders. Meanwhile, test sandbox budgets shrivel and red-team head-counts lag way behind parameter counts. The show, as always, must go on. The tale of Zero and One, the Fool and the Magician, repeats itself again from out of the Renaissance to today’s strange world.

Pandora’s Original Contract—And the Clause We Forgot

In Greek myth, Pandora, the first woman, was gifted by Zeus with curiosity and a box that came with a warning: “Do not open.” Naturally, like Eve and her Apple, Pandora opened the box. That released all of the evils of the world, but also Hope, which remained by her side. Today, we receive a new gift – artificial intelligence – that also comes with a warning: “May produce inaccurate information.” Still, we open the box and hope for the best.

Pandora’s Ledger: Identifying the AI Dangers

Turns out that AI can do a lot worse than producing inaccurate information. Here is a chart that summarizes the top 22 dangers of AI. Again, readers are directed to the full article for the details, including multiple examples of damages already caused by these dangers. Archetypes Over Algorithms: How an Ancient Card Set Clarifies Modern AI Risk.

| # Card | AI Dangers | Risk Summaries |

| 0 Fool | Reckless Innovation | Launching AI systems without adequate testing or oversight, prioritizing speed over safety. |

| 1 Magician | AI Takeover (AGI Singularity) | A super‑intelligent AGI surpasses human control, potentially dictating outcomes beyond human interests. |

| 2 Priestess | Black Box AI (Opacity) | The system’s internal decision‑making is inscrutable, preventing accountability or error correction. |

| 3 Empress | Environmental Damage | AI’s compute‑hungry infrastructure drives significant carbon emissions and e‑waste. |

| 4 Emperor | Mass Surveillance | Pervasive AI‑driven monitoring erodes civil liberties and chills free expression. |

| 5 Hierophant | Lack of AI Ethics | Developers ignore ethical frameworks, embedding harmful values or goals into deployed models. |

| 6 Lovers | Emotional Manipulation by AI | AI exploits human psychology to steer opinions, purchases, or behaviors against users’ interests. |

| 7 Chariot | Loss of Human Control (Autonomous Weapons) | Weaponized AI systems make lethal decisions without meaningful human oversight. |

| 8 Strength | Loss of Human Skills | Over‑reliance on AI degrades critical thinking and professional expertise over time. |

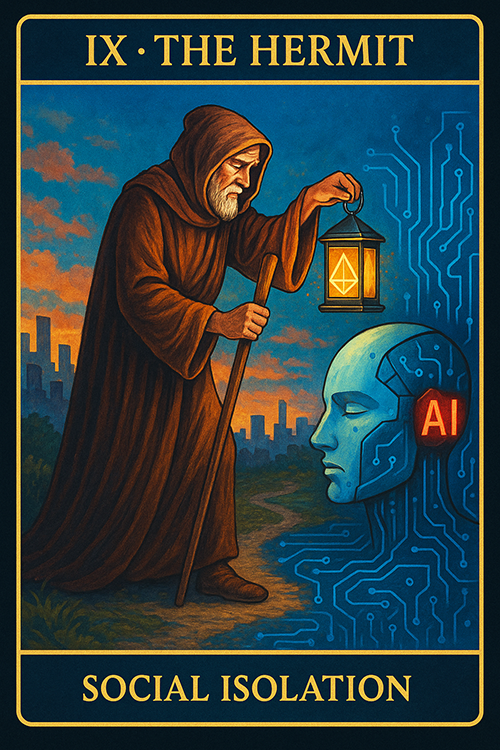

| 9 Hermit | Social Isolation | AI substitutes for human interaction, deepening loneliness and weakening community bonds. |

| 10 Wheel of Fortune | Economic Chaos | Rapid automation reshapes markets and labor structures faster than institutions can adapt. |

| 11 Justice | AI Bias in Decision-Making | Algorithmic outputs perpetuate or amplify societal inequities in hiring, lending, policing, and more. |

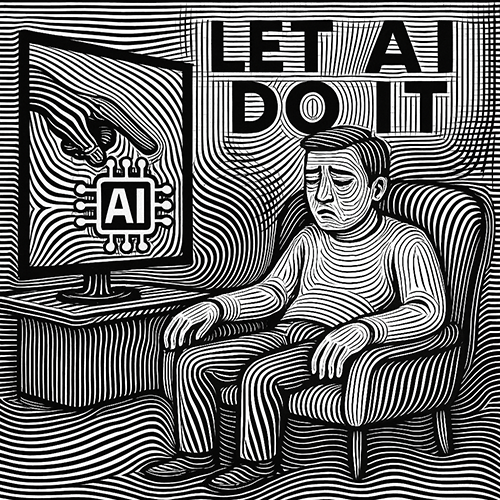

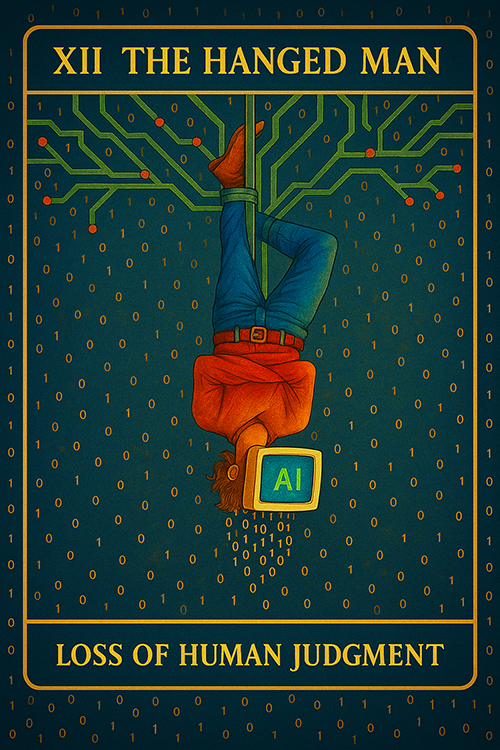

| 12 Hanged Man | Loss of Human Judgment and Initiative. | Humans’ lazy deference to AI recommendations instead of active joint efforts (hybrid) and human supervision. |

| 13 Death | Human Purpose Crisis | Widespread automation triggers existential anxiety about meaning and societal roles. |

| 14 Temperance | Unemployment | AI-driven automation displaces large segments of the workforce without adequate reskilling pathways. |

| 15 Devil | Privacy Sell-Out | AI systems monetize personal data, eroding individual privacy rights. |

| 16 Tower | Bias-Driven Collapse | Systemic biases compound across AI ecosystems, leading to cascading institutional failures. |

| 17 Star | Loss of Human Creativity | Generative AI crowds out original human expression and discourages creative risk‑taking. |

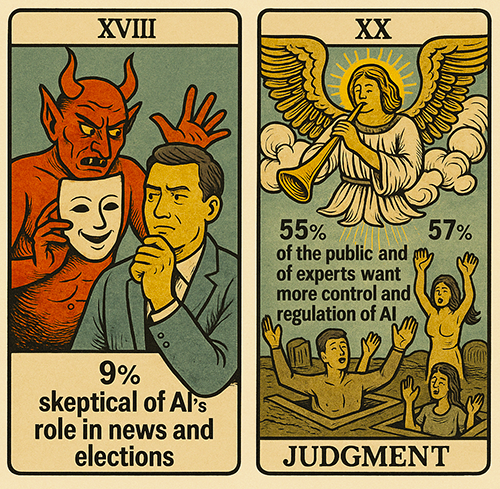

| 18 Devil | Deception (Deepfakes) | Hyper‑realistic synthetic media erode trust in ads and audio‑visual evidence, including trust in news and elections. |

| 19 Sun | Black Box Transparency Problems | Even when disclosures are attempted, technical complexity prevents meaningful transparency. |

| 20 Judgment | Lack of Regulation | Policy lags leave harmful AI applications largely unchecked and unaccountable. |

| 21 World | Unintended Consequences | AI actions can yield unforeseen harms (and good) that emerge only after deployment. |

Ralph’s video interpretation of archetype 12, lazy Loss of Human judgment and initiative, using Sora AI & Op Art style. Click enlarge symbol in lower right corner for full effect. Audio entirely by Ralph, no AI. His favorite Sora video so far.

Here is the AI revised Tarot Card Twelve, XII, the Hanged Man:

Polls Show People Feel the Dangers of AI

Pew Research Center found a fifteen-point jump (2021-23) in Americans who were more concerned than excited about AI; steeper among parents (deepfakes) and truckers (job loss). In a more recent (2025) survey Pew found a big divide between the opinions of experts on AI and everyone else. The experts were far more positive than the general public about the positive impact of AI in the next twenty years (56% vs. 17%). The general population was more concerned than excited about the future (51% vs. 15%).

Both groups agree about one thing, 9% are skeptical of AI’s role in news and elections (Deception fear #18). Interestingly, both the public and experts want more control and regulation of AI (55% and 57%) (No Regulation fear, #20).

Short List Why Deeply‑Rooted Symbols are More Effective than White Papers

- Picture‑Superiority in Action. A lightning‑struck tower brands itself on memory, whereas “§ 501(c)(3)” cites are gone by lunch. Images ride the picture‑superiority effect you just met in Shepard and Brady, so the lesson sticks without flashcards.

- Dual‑Coding Boost. Each card pairs a vivid scene with a single line of text—exactly the word‑plus‑image recipe Paivio and Mayer showed doubles recall. Two cognitive channels, one glance.

- Machine‑Human Rosetta Stone. LLMs have already ingested these archetypes via Common Crawl and museum corpora; mirroring them aligns our mental priors with the model’s statistical priors—teaching faster than a logic tree (unless you’re a Vulcan).

- Cross‑Disciplinary Esperanto. Whether you’re coding, litigating, or pitching Series B, the visual metaphor translates; mixed teams discuss risk without tripping over field‑specific jargon.

- Instant Ethical Gut‑Check. Pictures bypass prefrontal rationalizing and ping the limbic system first, so viewers feel the stakes before the inner lawyer starts red‑lining—often the spark that moves a project from “noted” to “actioned.”

- Narrative Compression. Four hundred pages of risk taxonomy collapse into 22 snapshots that unfold as a built‑in story arc (naïve leap ➜ hard‑won wisdom). Revisit the deck months later and context reloads in seconds—no need to excavate a 60‑page memo.

Remember: These cards aren’t prophecy; they’re road signs. They don’t foretell a wreck, they keep you from missing the curve. Each has a cautionary story to tell.

Card 14 — Temperance: What We Can Do Before the Cliff

Temperance warns of over-indulgence and counsels caution and moderation. Retool workers and temper greed for quick profits.

None of the 22 AI risk warning cards are silver bullets, but they can caution you to strap iron buckles on the Fool’s boots or add timed fuses to the Magician’s lab. In short, they give us what Pandora never had, advice on how to control the new dangers that curiosity let loose.

Here is a summary of tactical safeguards for any AI program.

| Tactical Safeguards | Purpose |

|---|---|

| Red‑Team Testing | Let hackers, domain experts, and ethicists stress‑test the model before launch. Ralph’s favorite. |

| Hard Kill‑Switches | Hardware‑ or API‑level “panic buttons” that halt inference when anomaly thresholds trip. |

| Post‑Deployment Drift Monitors | Always‑on metrics that flag bias creep, performance decay, or emergent behavior. |

| Explainability & Audit Trails | Any AI touching liberty or livelihood must keep a tamper‑proof log—discoverable in court. |

| Sunset & Retrain Clauses | Contract triggers to archive or retrain models after X months, Y incidents, or Z regulation changes. |

| Skill‑Retention Drills | Pilots land with autopilot off monthly; radiologists read raw scans quarterly—humans stay sharp. |

| User‑Literacy Bootcamps | Short, mandatory training makes passive consumers into copilots who notice when autopilot yaws off course. |

| Carbon & Water Accounting | Lifecycle‑emission clauses—and eventually regulation—anchor AI growth to climate reality. |

| Reality Watermarks | Cryptographically sign authentic media to daylight deepfakes. |

| Regulatory Sandboxes | Innovate inside monitored fences; feed lessons back into standards boards. |

Where Lawyers, Regulators, and Citizens Converge

A deck of time‑tested symbols speeds up teaching, understanding and recall of AI dangers. One picture can outrun a thousand‑word white paper—especially when that image leverages the proven picture‑superiority and dual‑coding effects. So learn the 22 images, share them, and talk through them with colleagues. You’ll remember the “blindfolded judge” or “lonely hermit” long after a dense statute citation fades. And yes, pick a family safe‑word now—never stored online—to foil a deepfake scam by a Devil.

Closing Statement (and Your Invitation)

Foreknowledge is half the battle; the other half is acting before the dangers spread. We already have the pictures, analysis and some examples—what you need now is a group exercise to help your team start prepping. You’re invited to start your practice with a three-step, five‑minute stress‑test.

- Choose a Relevant Archetype – Pick a card.

- As a team leader (or army of one) pick an archetype—Reckless Innovation, Black‑Box Opacity, Loss of Human Skills—and run the three quick questions below on the next AI system you deploy or advise.

- For example, are you still in a reckless new product start? Has the team just started using AI? Then pick The Fool.

- Or did you pass that and are now starting to face dangers of over-confident first use? Pick The Magician.

- Not sure what card to pick, throw it out to the whole team and after discussion, make the call.

- If you are not afraid of the Hanged Man risk, you could ask AI to make suggestions; then you pick.

- If all else fails, or just to try a different <yet ancient> approach, just pick a card at random.

- As a team leader (or army of one) pick an archetype—Reckless Innovation, Black‑Box Opacity, Loss of Human Skills—and run the three quick questions below on the next AI system you deploy or advise.

- Run Three Rapid‑Fire Questions (@ 5 minutes) on the Card Picked.

- Impact check: “If this risk materializes, who or what gets hurt first?”

- Control check: “What guardrails or kill‑switches exist or should be set up? How are they working? How should we change them to be more effective?

- Signal check: “What early‑warning metric would tell us the risk is emerging or growing too fast?” “Anyone red teamed this yet?“

- Score the answers—green, yellow, or red.

- Green: Satisfying answers on all three checks-impact, control, signal-move on.

- Yellow: One shaky answer; flag for follow‑up.

- Red: Two or more shaky answers; escalate before launch or continued operation.

Dig deeper if needed. Or repeat and pick a new AI danger card. Modify this drill as you will. Try going through several at a time, or run through all 22 in sequence or other order. Apply these stress-tests to the particular projects and problems unique to your organization. Ask AI to help you to devise completely new projects for danger identification and avoidance, but don’t just let AI do it. Hybrid with human in control is the best way to go. Always verify AI’s input and assert your unique human insights.

Why the exercise helps. In five minutes, you convert an abstract concern into a concrete, color‑coded action item. If everything stays green, you gained peace of mind, for now anyway. If not, you’ve identified exactly where to drill down—before the Fool steps off the cliff.

Explore the full 9,000‑word, image‑rich guide—complete with all 22 images, checklists and personally verified information—here:

Archetypes Over Algorithms: How an Ancient Card Set Clarifies Modern AI Risk.

I give the last word, as usual, to the Gemini twin podcasters that summarize the article. Echoes of AI on: Zero to One: A Visual Guide to Understanding the Top 22 Dangers of AI. Hear two Gemini AIs talk about this article for 16 minutes. They wrote the podcast, not me.

Ralph Losey Copyright 2025 – All Rights Reserved

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.

[…] Zero to One: A Visual Guide to Understanding the Top 22 Dangers of AI: There are twenty-two image-only cards in a game invented in northern Italy in 1450, the 78-card “Trionfi” pack, that warn of life’s risks and dangers. Only Ralph Losey could figure out how to equate those to 22 dangers of AI, as he does so in this EDRM blog post. […]