[EDRM Editor’s Note: EDRM is proud to publish Ralph Losey’s advocacy and analysis. The opinions and positions are Ralph Losey’s copyrighted work. All images in the article are by Ralph Losey using AI. This article is published here with permission.]

Generative AI is not just disrupting industries—it is redefining what it means to trust, govern, and be accountable in the digital age. At the forefront of this evolution stands a new, critical line of employment: AI Risk-Mitigation Officers. This position demands a sophisticated blend of technical expertise, regulatory acumen, ethical judgment, and organizational leadership. Driven by the EU’s stringent AI Act and a rapidly expanding landscape of U.S. state and federal compliance frameworks, businesses now face an urgent imperative: manage AI risks proactively or confront severe legal, reputational, and operational consequences.

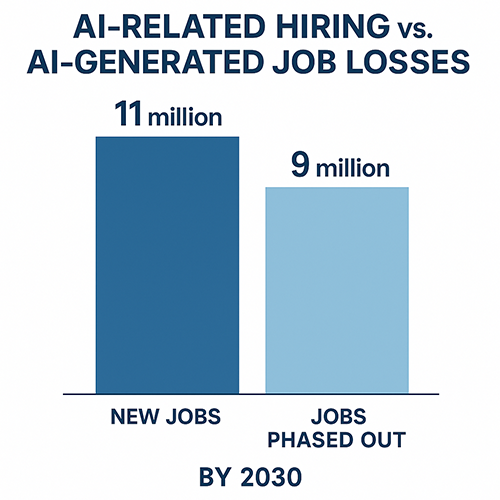

This aligns with a growing consensus: AI, like earlier waves of innovation, will create more jobs than it eliminates. The AI Risk-Mitigation Officer stands as Exhibit A in this next wave of tech-era employment. See e.g. my last series of blogs, Part Two: Demonstration by analysis of an article predicting new jobs created by AI (27 new job predictions); Part Three: Demo of 4o as Panel Driver on New Jobs (more experts discuss the 27 new jobs). See Also: Robert Capps, NYT magazine article: A.I. Might Take Your Job. Here Are 22 New Ones It Could Give You. (NYT Magazine, June 17, 2025). In a few key areas, humans will be more essential than ever.

Defining the Role of the AI Risk-Mitigation Officer

The AI Risk-Mitigation Officer is a strategic, cross-functional leader tasked with identifying, assessing, and mitigating risks inherent in AI deployment.

While Chief AI Officers drive innovation and adoption, Risk-Mitigation Officers focus on safety, accountability, and compliance. Their mandate is not to slow progress, but to ensure it proceeds responsibly. In this respect, they are akin to data protection officers or aviation safety engineers, guardians of trust in high-stakes systems.

This role requires a sober analysis of what can go wrong—balanced against what might go wonderfully right. It is a job of risk mitigation, not elimination. Not every error can or should be prevented; some mistakes are tolerable and even expected in pursuit of meaningful progress.

The key is to reduce high-severity risks to acceptable levels—especially those that could lead to catastrophic harm or irreparable public distrust. If unmanaged, such failures can derail entire programs, damage lives, and trigger heavy regulatory backlash.

Both Chief AI Officers and Risk-Mitigation Officers ultimately share the same goal: the responsible acceleration of AI, including emerging domains like AI-powered robotics.

The Risk-Mitigation Office should lead internal education efforts to instill this shared vision—demonstrating that smart governance isn’t an obstacle to innovation, but its most reliable engine.

Why The Role is Growing

The acceleration of this role is not theoretical. It is propelled by real-world failures, regulatory heat, and reputational landmines.

The 2025 World Economic Forum’s Future of Jobs Report underscores that 86% of surveyed businesses anticipate AI will fundamentally transform their operations by 2030. While AI promises substantial efficiency and innovation, it also introduces profound risks, including algorithmic discrimination, misinformation, automation failures, and significant data breaches.

A notable illustration of these risks is the now infamous Mata v. Avianca case, where lawyers relied on AI to fabricate case law, underscoring why human verification is non-negotiable. Mata v. Avianca, Inc., No. 1:2022cv01461 – Document 54 (S.D.N.Y. June 22, 2023). Courts responded with sanctions. Regulators took notice. The public laughed, then worried.

The legal profession worldwide has been slow to learn from Mata the need to verify and take other steps to control AI hallucinations. See Damien Charlotin, AI Hallucination Cases (as of July 2025 over 200 cases and counting have been identified by the legal scholar in Paris with an ironic name for this work, Charlotin). The need for risk mitigation is growing fast. You cannot simply pass the buck to AI.

Risk Mitigation employees and other new AI related hires will balance out the AI generated layoffs. The 2025 World Economic Forum’s Future of Jobs Report at page 25 predicted that by 2030 there will be 11 million new jobs created and 9 million old jobs phased out. We think the numbers will be higher in both columns, but the 11/9 ratio may be right overall all, meaning a 22% net increase in new jobs.

Core Responsibilities

AI Risk-Mitigation Officers are part legal scholar, part engineer, part diplomat. They know the AI Act, understand neural nets, and can hold a room full of regulators or engineers without flinching. Key responsibilities encompass:

- AI Risk Audits: Spot trouble before it starts. Bias, black boxes, security flaws—find them early, fix them fast. This involves detailed pre-deployment evaluations to detect biases, security vulnerabilities, explainability concerns, and data protection deficiencies. Good practice to follow-up with periodic surprise audits.

- Incident Response Management: Don’t wait for a headline to draft a playbook. Develop and lead response protocols for AI malfunctions or ethical violations, coordinating closely with legal, PR, and compliance teams.

- Legal Partnership: Speak both code and contract. Collaborate with in-house counsel to interpret AI regulations, draft protective contractual clauses, and anticipate potential liability.

- Ethics Training: Culture is the best control layer. Educating employees on responsible AI use and cultivating an ethical culture that aligns with both corporate values and regulatory standards.

- Stakeholder Engagement: Transparency builds trust. Silence breeds suspicion. Bridging communication between technical teams, executive leadership, regulators, and the public to maintain transparency and foster trust.

Skills and Pathways

Professionals in this role must possess:

- Regulatory Expertise: Detailed knowledge of EU AI Act, GDPR, EEOC guidelines, and evolving state laws in the U.S.

- Technical Proficiency: Deep understanding of machine learning, neural networks, and explainable AI methodologies.

- Sector-Specific Knowledge: Familiarity with compliance standards across sectors such as healthcare (HIPAA, FDA), finance (SEC, MiFID II), and education (FERPA).

- Strategic Communication: Ability to effectively mediate between AI engineers, executives, regulators, and stakeholders.

- Ethical Judgment: Skills to navigate nuanced ethical challenges, such as balancing privacy with personalization or fairness with automation efficiency.

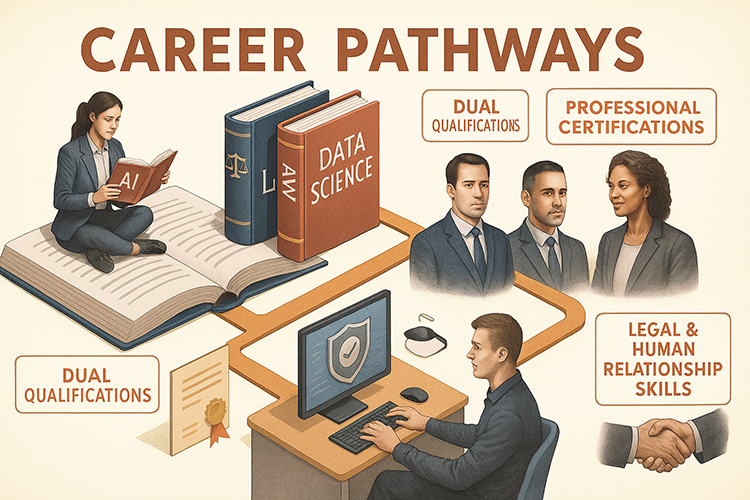

Career pathways for AI Risk-Mitigation Officers typically involve dual qualifications in fields like law and data science, certifications from professional organizations and practical experience in areas like cybersecurity, legal practice, politics or IT. Strong legal and human relationship skills are a high priority.

U.S. and EU Regulatory Landscapes

The EU codifies risk tiers. The U.S. litigates after the fact. Navigating both requires fluency in law, logic, and diplomacy.

The EU’s AI Act classifies AI systems into four risk categories:

- Unacceptable. AI banned altogether due to the high-risk of violating fundamental rights. Examples include social scoring systems and real-time biometric identification in public spaces, including emotion recognition in workplaces and schools. Also bans AI-enabled subliminal or manipulative techniques that can be used to persuade individuals to engage in unwanted behaviors.

- High. AI that could negatively impact the rights or safety of individuals. Examples include AI systems used in critical infrastructures (e.g. transport), legal (including policing and border patrol), medical, educational, financial services, workplace management, and influencing elections and voter behavior.

- Limited. AI with lower levels of risk than high-risk systems but are still subject to transparency requirements. Examples include typical chatbots where providers must make humans aware Ai is used.

- Minimal. AI systems that present little risk of harm to the rights of individuals. Examples include AI-powered video games and spam filters. No rules.

Regulation is on a sliding scale with Unacceptable risk AIs banned entirely and Minimal to No risk category with few if any restrictions. Limited and High risk classes require varying levels of mandatory documentation, human oversight, and external audits.

Meanwhile, U.S. regulatory bodies like the FTC and EEOC, along with state legislatures and state enforcement agencies, are starting to sharpen oversight tools. So far the focus has been on controlling deception, data misuse, bias and consumer harm. This has become a hot political issue in the U.S. See e.g. Scott Kohler, State AI Regulation Survived a Federal Ban. What Comes Next? (Carnegie’s Emissary, 7/3/25); Brownstein, et al, States Can Continue Regulating AI—For Now (JD Supra, 7/7/25).

AI Risk-Mitigation Officers must navigate these disparate regulatory landscapes, harmonizing proactive European requirements with the reactive, litigation-centric U.S. environment.

Legal Precedents and Ethical Challenges

Emerging legal precedents emphasize human accountability in AI-driven decisions, as evidenced by litigation involving biased hiring algorithms, discriminatory credit scoring, and flawed facial recognition technologies. Ethical dilemmas also abound. Decisions like prioritizing efficiency over empathy in healthcare, or algorithmic opacity in university admissions, require human-centric governance frameworks.

In ancient times, the Sin Eater bore others’ wrongs. Part Two: Demonstration by analysis of an article predicting new jobs created by AI (discusses new sin eater job in detail as a person who assumes moral and legal responsibility for AI outcomes). Today’s Risk-Mitigation Officer is charged with an even more difficult task; try to prevent the sins from happening at all or at least reduce them enough to avoid hades.

Balancing Innovation and Regulation

Cases such as Cambridge Analytica (personal Facebook user data used for propaganda to influence elections) and Boeing’s MCAS software (use of AI system undisclosed to pilots led to crash of a 737) demonstrate that innovation without reasonable governance can be an invitations to disaster. The obvious abuses and errors in these cases could have been prevented had there been an objective officer in the room, really any responsible adult considering risks and moral grounds. For a more recent example, consider XAI’s recent fiasco with its chatbot. Grok Is Spewing Antisemitic Garbage on X (Wired, 7/8/25).

Cases like this should put us on guard but not cause over-reaction. Disasters like this easily trigger too much caution and too little courage. That too would be a disaster of a different kind as it would rob us of much needed innovation and change.

Reasonable, practical regulation can foster innovation by mitigating uncertainty and promoting stakeholder confidence. The trick is to find a proper balance between risk and reward. Many think regulators today tend to go too far in risk avoidance. They rely on Luddite fears of job loss and end-of-the world fantasies to justify extreme regulations. Many think that such extreme risk avoidance helps those in power to maintain the status quo. The pro-tech people instead favor a return to the fast pace of change that we have seen in the past.

We went to the Moon in 1969. Yet we’re still grounded in 2025. Fear has replaced vision. Overregulation has become the new gravity.

That is why anti-Luddite technology advocates yearn for the good old days, the sixties, where fast paced advances and adventure were welcome, even expected. If you had told anyone back in 1969 that no Man would return to the Moon again, even as far out as 2025, you would have been considered crazy. Why was John F. Kennedy the last bold and courageous leader? Everyone since seems to have been paralyzed with fear. None have pushed great scientific advances. Instead, politicians on both sides of the aisle strangle NASA with never-ending budget cuts and timid machine-only missions. It seems to many that society has been overly risk adverse since JFK and this has stifled innovation. This has robbed the world of groundbreaking innovations and the great rewards that only bold innovations like AI can bring.

Take a moment to remember the 1989 movie Back to the Future Part II. In the movie “Doc” Brown, an eccentric, genius scientist, went from the past – 1985 – the future of 2015. There we saw flying cars powered by Mr. Fusion Home Energy Reactors, which were trash fueled fusion engines. That is the kind of change we all still expected back in 1989 for the far future of 2015; but now, in 2025, ten years past the projected future, it is still just a crazy dream. The movie did, however, get many minor predictions right. Think of flat-screen TVs, video-conferences, hoverboards and self-tying shoes (Nike HyperAdapt 1.0.) Fairly trivial stuff that tech go-slow Luddites approved.

Conclusion

The best AI Risk-Mitigation Officers will steer between the twin monsters of under-regulation and overreach. Like Odysseus, they will survive by knowing the risks and keeping a steady course between them.

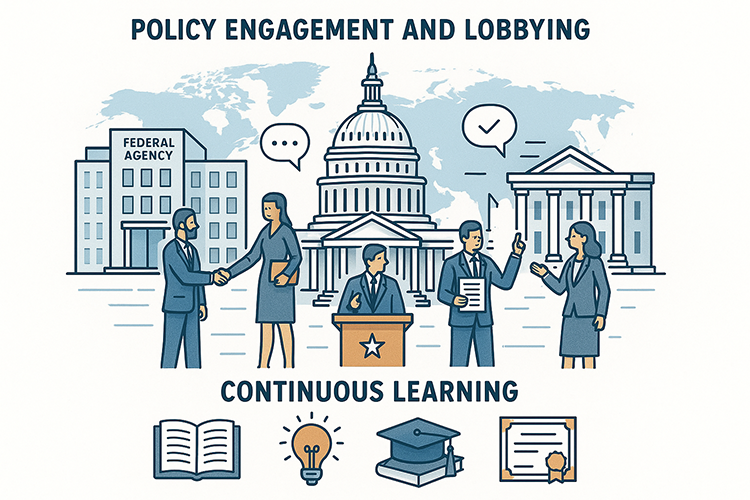

They will play critical roles across society—in law firms, courts, hospitals, companies (for-profit, non-profit, and hybrid), universities, and government agencies. Their core responsibilities will include:

- Standardization Initiatives: Collaborating with global standards organizations such as ISO, NIST, and IEEE to craft reasonable, adaptable regulations.

- Development of AI Governance Tools: Encouraging the use of model cards, bias detection systems, and transparency dashboards to track and manage algorithmic behavior.

- Policy Engagement and Lobbying: Actively engaging with legislative and regulatory bodies across jurisdictions—federal, state, and international—to advocate for frameworks that balance innovation with public protection.

- Continuous Learning: Staying ahead of rapid developments through ongoing education, credentialing, and immersion in evolving legal and technological landscapes.

As this role evolves, AI Risk Management specialists will likely develop sub-specialties—possibly forming distinct departments in areas such as regulatory lobbying, algorithm auditing, compliance architecture, and ethical AI research, both hands-one and legal study.

This is a fast-moving field. After writing this article we noticed the EU passed new rules for the largest AI companies only, all US companies of course, except one Chinese. It is non-binding at this point and involves highly controversial disclosure and copyright restriction. EU’s AI code of practice for companies to focus on copyright, safety (Reuters, July 10, 2025). It could stifle chatbot development and lead the EU in a stagnant deadline as these companies withdraw from the EU rather than kill their own models to comply.

Slowdown or reduction is not a viable option at this point because of national security concerns. There is a military race now between the US and China based on competing technology. AGI level of AI will give the first government to attain it a tremendous military advantage. See e.g., Buchanan, Imbrie, The New Fire: War, Peace and Democracy in the Age of AI (MIT Press, 2022); Henry Kissinger, Allison. The Path to AI Arms Control: America and China Must Work Together to Avert Catastrophe, (Foreign Affairs, 10/13/23); Also See, Dario Amodei’s Vision (e-Discovery team, Nov. 2024) (CEO of Anthropic, Darion Amodei, warns of danger of China winning the race for AI supremacy).

As Eric Schmidt explains it, this is now an existential threat and should be a bipartisan issue for survival of democracy. Kissinger, Schmidt, Mundie, Genesis: Artificial Intelligence, Hope, and the Human Spirit, (Little Brown, Nov. 2024). Also See Former Google CEO Eric Schmidt says America could lose the AI race to China (AI Ascension, May 2025).

Organizations that embrace this new professional archetype will be best positioned to shape emerging regulatory frameworks and deploy powerful, trusted AI systems—including future AI-powered robots. The AI Risk-Mitigation Officer will safeguard against catastrophic failure without throttling progress. In this way, they will help us avoid both dystopia and stagnation.

Yes, this is a demanding job. It will require new hires, team coordination, cross-functional fluency, and seamless collaboration with AI assistants. But failure to establish this critical function risks danger on both fronts: unchecked harm on one side, and paralyzing caution on the other. The best Risk-Mitigation Officers will navigate between these extremes—like Odysseus threading his ship through Scylla and Charybdis.

We humans are a resilient species. We’ve always adapted, endured, and risen above grave dangers. We are adventurers and inventors—not cowering sheep afraid of the wolves among us.

The recent overregulation of science and technology is an aberration. It must end. We must reclaim the human spirit where bold exploration prevails, and Odysseus—not Phobos—remains our model.

We can handle the little thinking machines we’ve built, even if the phobic establishment wasn’t looking. Our destiny is fusion, flying cars, miracle cures, and voyages to the Moon, Mars, and beyond.

Innovation is not the enemy of safety—it is its partner. With the right stewards, AI can carry us forward, not backward.

Let’s chart the course.

PODCAST

As usual, we give the last words to the Gemini AI podcasters who chat between themselves about the article. It is part of our hybrid multimodal approach. They can be pretty funny at times and have some good insights, so you should find it worth your time to listen. Echoes of AI: The Rise of the AI Risk-Mitigation Officer: Trust, Law, and Liability in the Age of AI. Hear two fake podcasters talk about this article for a little over 16 minutes. They wrote the podcast, not me. For the second time we also offer a Spanish version here. (Now accepting requests for languages other than English.)

Ralph Losey Copyright 2025 – All Rights Reserved

Assisted by GAI and LLM Technologies per EDRM GAI and LLM Policy.

[…] Navigating AI’s Twin Perils: The Rise of the Risk-Mitigation Officer: Terrific discussion from Ralph Losey about one new job which will grow significantly over the next few years – AI Risk-Mitigation Officer. And here’s some potential good news: The 2025 World Economic Forum’s Future of Jobs Report at page 25 predicted that by 2030 there will be 11 million new jobs created and 9 million old jobs phased out. Those who can reinvent themselves will not only save their career but likely enhance it. 😊 […]